🔬 Research Summary by Francesco Fabbri, a 4th year Ph.D. student at UPF, Barcelona, working on Algorithmic Bias in Recommender Systems. His work focuses on analyzing and mitigating unintended and potentially harmful algorithmic effects embedded in online social platforms.

[Original paper by Francesco Fabbri, Yanhao Wang, Francesco Bonchi, Carlos Castillo, Michael Mathioudakis]

Overview: Recommender systems play a key role on Online Social Platforms (OSP), for enhancing the connectivity between users and available contents. This paper focuses on mitigating the “radicalization pathway” affecting the recommendation output of “what-to-watch-next” algorithms. We define the problem of reducing the prevalence of radicalization pathways by selecting a small number of recommendations to change, in order to minimize the segregation among all radicalized videos.

Introduction

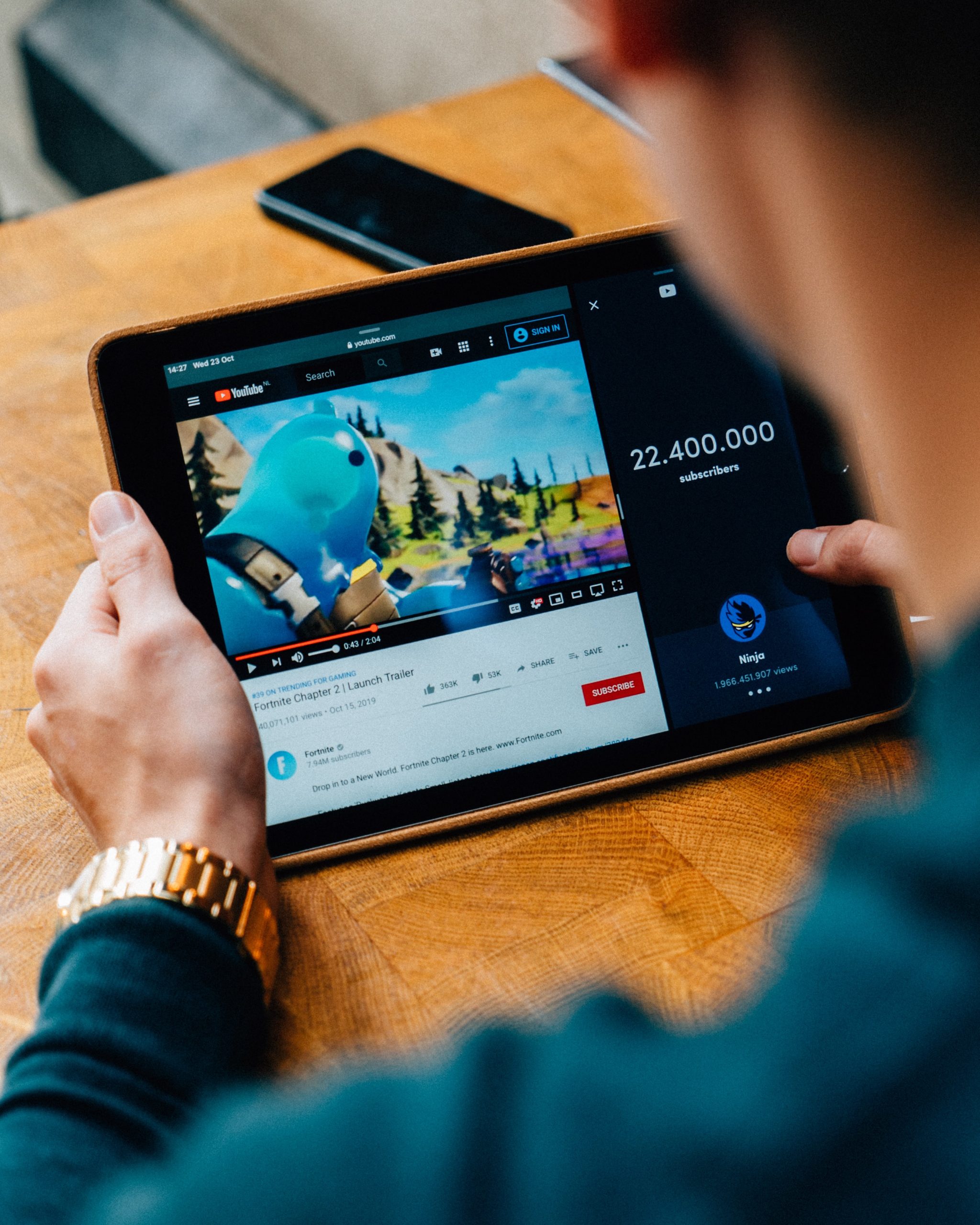

“What-to-watch-next” (W2W) recommenders are key features of video sharing platforms, as they sustain user engagement, thus increasing content views and driving advertisement and monetization. However, recent studies have raised serious concerns about the potential role played by W2W recommenders, specifically in driving users towards undesired or polarizing content. Specifically, radicalized communities on social networks and content sharing platforms have been recognized as keys in the consumption of news and in building opinions around politics and related subjects. Recent work highlights the role of recommender systems, which may steer users towards radicalized content, eventually building “radicalization pathways” (i.e., a user might be further driven towards radicalized content even when this was not her initial intent). In this paper, we study the problem of reducing the prevalence of radicalization pathways in W2W recommenders while maintaining the relevance of recommendations.

Key Insights

From W2W to Recommendation Graph

Formally, we model a W2W recommender system as a directed labeled network where nodes correspond to videos (or other types of content) and directed edges represent recommendation links from one node to another. In this scenario, each video is accompanied by the same number 𝑑 of recommendation links. Moreover, each video is already classified as either “harmful” (e.g., radicalized) or “neutral” (e.g., non-radicalized). The browsing activity of a user through the W2W recommendations is modeled as a random walk on the graph: after watching a video, the user moves to one of the next 𝑑 recommended videos with a probability that depends on the position of the recommended video in the ranking submitted to the user. In this setting, for each harmful node 𝑣, we measure the expected number of consecutive radicalized videos visited through the browsing before reaching any non-radicalized video. We call this measure the “segregation” score of node 𝑣: intuitively, it quantifies how easy it is to get “stuck” in radicalization pathways starting from a given node. Our goal is to reduce the segregation of the recommendation graph while guaranteeing that the quality of recommendations is maintained, where the quality is measured by the normalized discounted cumulative gain (nDCG) of each node.

Graph Rewiring to reduce Segregation

An important challenge is that the underlying recommendation graph has intrinsically some level of homophily because, given that the W2W seeks to recommend relevant videos, it is likely to link harmful nodes to other harmful nodes. We formulate the problem of reducing the segregation of the graph as selecting 𝑘 modifications in the lists of recommended videos for some nodes, using rewiring operations on edges. Each rewiring operation changes the destination of an existing recommendation, i.e. remove an old edge in the graph, creating a new one with the same source node. Proposing a solution with k-rewirings, we minimize the maximum of segregation scores among all harmful nodes, while maintaining recommendation quality measured by nDCG above a given threshold for all nodes. Our proposed algorithm is based on the absorbing random walk theory, thanks to which we can efficiently compute the segregation score of each node and update it after every rewiring operation. Specifically, our method finds a set of 𝑘 rewiring operations by greedily choosing the optimal rewiring for the special case of 𝑘 = 1 – i.e., the 1-Rewiring problem, then updates the segregation score of each node.

From W2W to News Recommendation

We present a solution effective in two different scenarios: one in the context of video sharing and the other in the context of news feeds. In first scenario, we are able to reproduce a pipeline similar to the one proposed by popular Video Sharing Platforms (e.g. YouTube) in which videos-to-videos recommendations are generated and our algorithm aims at reducing segregation of videos labeled as radicalized. Moreover, in the second case, we design a news recommender system which contains “reliable” and “unreliable” sources of information, in which our algorithm is employed to reduce the chances to capture the user in news characterized by low-quality information. We compare our proposed algorithm against several baselines, including an algorithm for suggesting new edges to reduce radicalization in Web graphs.

Between the lines

In our work we studied the problem of reducing the risk of radicalization pathways in what-to-watch-next recommenders via edge rewiring on the recommendation graph. This work is just a first step in the context of radicalization mitigation in Online Social Platforms. We aim to raise critical observations about the sequential interactions involving harmful contents and user personalization in online social networking platforms and hint the need to design algorithms which keeps in consideration existing biases involved either in the data or into the algorithm design. In our first problem formulation binary labels on the nodes are a strong assumption and relative scores in [0,1] should be considered as a follow-up. Moreover, in the design of our algorithm the recommendation graph is part of the input. This implies that the recommendations are precomputed and static. A scenario where recommendations are generated dynamically in an online fashion is needed.

Our work presents a metric quantifying segregation of contents, but many other phenomena can be modeled (e.g. reachability, discoverability) starting from the proposed definition. The choice depends on the different domains and aspects to focus on (e.g. popularity bias, algorithmic discrimination).