🔬 Research Summary by Teresa Scantamburlo, an Assistant Professor at Ca’ Foscari University of Venice (Italy). She works at the intersection of computer science and applied ethics, focusing on AI governance and human oversight.

[Original paper by Teresa Scantamburlo, Joachim Baumann, and Christoph Heitz]

Overview: Growing evidence shows that machine learning (ML) does not necessarily make human decisions fairer. Researchers have addressed the problem by focusing on the (un)fairness of ML models, and this approach triggers a question: Is algorithmic fairness just a property of the model? In this paper, the authors argue that algorithmic fairness regards the relationship between the prediction and the decision task and develop a framework to clarify the role of the “prediction modeler” and the role of the “decision-maker.”

Introduction

Many of today’s algorithmic decision systems rely on machine learning predictions. In the ML language, a prediction does not necessarily refer to a future event (e.g., whether a customer will return to my e-commerce store). It simply refers to an unknown fact at the time of a decision (e.g., whether a credit applicant is trustworthy). So, one may ask: What is the role of prediction in modern algorithmic decision-making? How does it impact the fairness of a decision? The authors argue that distinguishing between prediction and decision is key to tackling algorithmic unfairness. While fairness is often linked to the features of a prediction model, the authors contend that what is truly “fair” or “unfair” pertains to the broader decision system rather than the prediction model. As they put it, “Fairness is about the real-world consequences on human lives resulting from a decision, not merely a prediction.” This motivated a framework that distinguishes between the role of the ‘prediction-modeler’ and the role of the ‘decision-maker’ and specifies the information required from each for implementing fairness in a prediction-aided decision system. Based on a theoretical analysis of the interactions between the ‘prediction-modeler’ and the ‘decision-maker,’ the authors identify the information gaps that must be addressed to implement fairness measures in the decision system.

Key Insights

The (un)fairness of prediction-based decision-making

In this paper, the authors consider the typical scenario of an algorithmic decision-making system, where a life-changing decision is made based on the prediction of an ML learning model, such as deciding whether to grant a loan based on the applicant’s repayment prediction. In a similar context, the authors distinguish between two functions:

- The predictive function that an ML model usually performs. The model individual data to produce an output predicting a target variable specific to a person. This prediction, whether presented as a score, a probability, or a point estimate, is typically unknown to the decision-maker during the decision-making process.

- The decision-making function which is informed by the prediction, but in nearly all cases, it is also influenced by additional parameters. For example, for a bank’s loan decision, the repayment probability, the interest rate, and the bank’s business strategy may be decisive parameters.

The distinction between prediction- and decision-making

Usually, in the ML literature, the distinction between the two tasks is blurred. Authors observe that even without an explicit ideological position, “formal characterizations tend to apply fairness criteria to the prediction model (e.g., the classifier), assuming that the decision consists of the prediction outcome.” However, a decision process can be more complex than a prediction model. From a theoretical point of view, the two concepts are benchmarked against different criteria. A prediction is usually assessed in terms of its predictive power (e.g., accuracy) concerning a correct answer or a measurement, also known as “ground truth.” However, the authors continue that it is “nonsensical to ask whether a decision is accurate or not since, broadly speaking, there is no such thing as ‘ground truth’ in a decision process.” A decision may be deemed “right” or “wrong,” yet its evaluation can vary based on numerous factors. A decision’s quality assessment should consider its intended purpose and impact on the decision-maker and the surrounding environment.

Note that the distinction between the two functions is not only motivated by a theoretical analysis. In practice, these tasks may correspond to different roles, which are “split organizationally and covered by different people, different departments, or even different companies.”

The role of prediction-modeller and decision-maker

The two roles, the prediction-modeler and decision-maker, involve distinct tasks and often conflicting objectives. On one hand, the prediction-modeler prioritizes prediction performance metrics such as accuracy. However, this approach can be problematic when deploying machine learning models for consequential decision-making. Standard ML algorithms, which are optimized solely for accuracy, may fall short in complex settings and overlook pertinent factors for a decision. Moreover, “the limited domain-specific knowledge possessed by prediction-modelers can pose challenges in consequential decision-making”

Conversely, the decision-maker strives to optimize the benefits derived from decision-making, often aligned with business-related goals. These observations underscore that the goals of a prediction-modeler may not align with the final objectives of the decision system. Conversely, the decision-maker aims to maximize the benefits gained through decision-making, frequently aligned with business-related objectives. These observations emphasize that the goals of a prediction-modeler may diverge from the ultimate objectives of the decision system

The interaction between the two roles

The framework addresses this inherent tension by delineating the roles of the prediction-modeler and the decision-maker. This separation facilitates distinct performance measures and assigns responsibilities to each actor, considering their domain-specific competencies.

Under a perspective of responsibility, the decision-maker is responsible for the decisions and hence their consequences. However, as discussed below, the prediction-modelers also have their area of responsibility. They are responsible for creating the basis for a good decision, which consists of (a) delivering a meaningful and robust prediction and delivering all information needed for the decision-maker to care for fairness and other relevant ethical requirements.

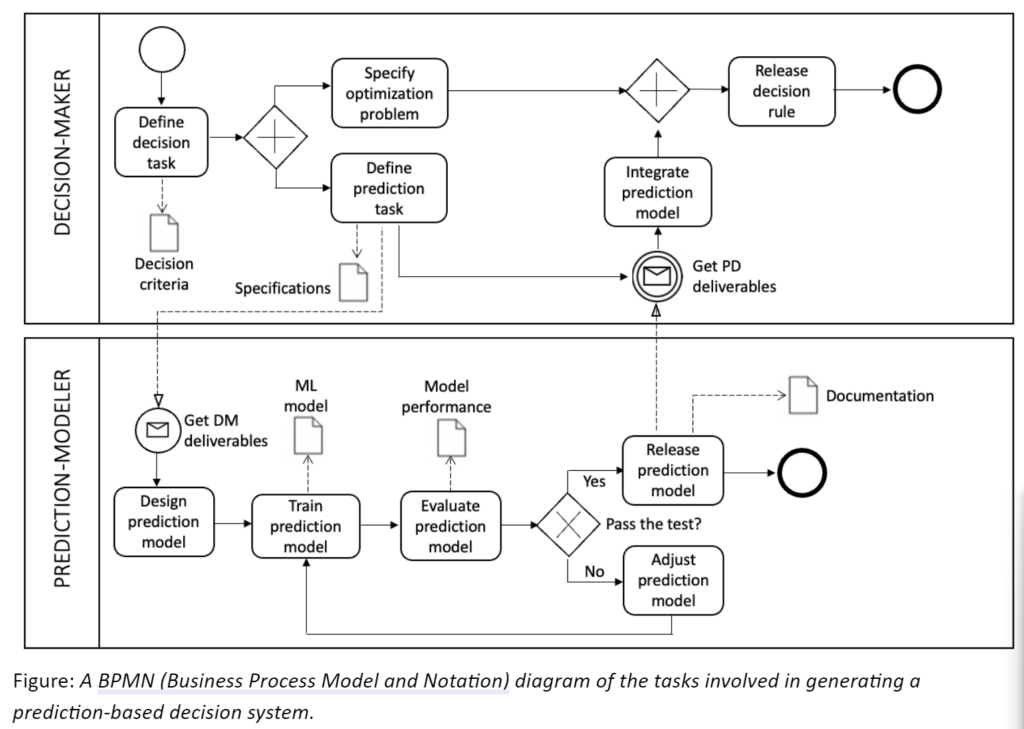

In concrete terms, the analysis of the two roles translates into a scheme of interaction that suggests an information flow that has practical consequences for the fairness of the decision system (see the figure). The information flow can be characterized by a minimum set of deliverables that the prediction-modeler and the decision-maker must provide to each other, such as the sensitive attribute and the calibration function.

Between the lines

The framework enhances the understanding and reasoning behind creating fairness in prediction-based decision-making. The framework offers a starting point to set up scenarios for analyzing algorithmic fairness from the point of view of two key actors. The strong interdependence between the two roles stresses the importance of creating meaningful interactions and communication channels. The framework could be further extended to assist AI providers in designing interactions among AI actors that can impact on the equity and transparency of AI-based decision systems.

The contribution offered in this work goes in two main directions. First, it shifts the focus from abstract algorithmic fairness to context-dependent decision-making, recognizing diverse actors with unique objectives and independent actions. Second, it provides a conceptual framework to structure prediction-based decision problems concerning fairness issues (and potentially other ethical requirements) in real-world scenarios.