🔬 Column by Julia Anderson, a writer and conversational UX designer exploring how technology can make us better humans.

Part of the ongoing Like Talking to a Person series

Voice technology poses unique privacy challenges that make it difficult to earn consumer trust. Lack of user trust is a massive hurdle to the growth and ethical use of conversational AI. As Big Tech companies and governments attempt to define data privacy rules, conversational AI must continuously become more transparent, compliant and accountable.

Data fuel machine learning. In conversational AI, the data include what we say to our devices and each other, even in the privacy of our homes. Data dignity advocates have called for more control over our personal data, and regulations have grown alongside new technologies.

Eavesdropping at home

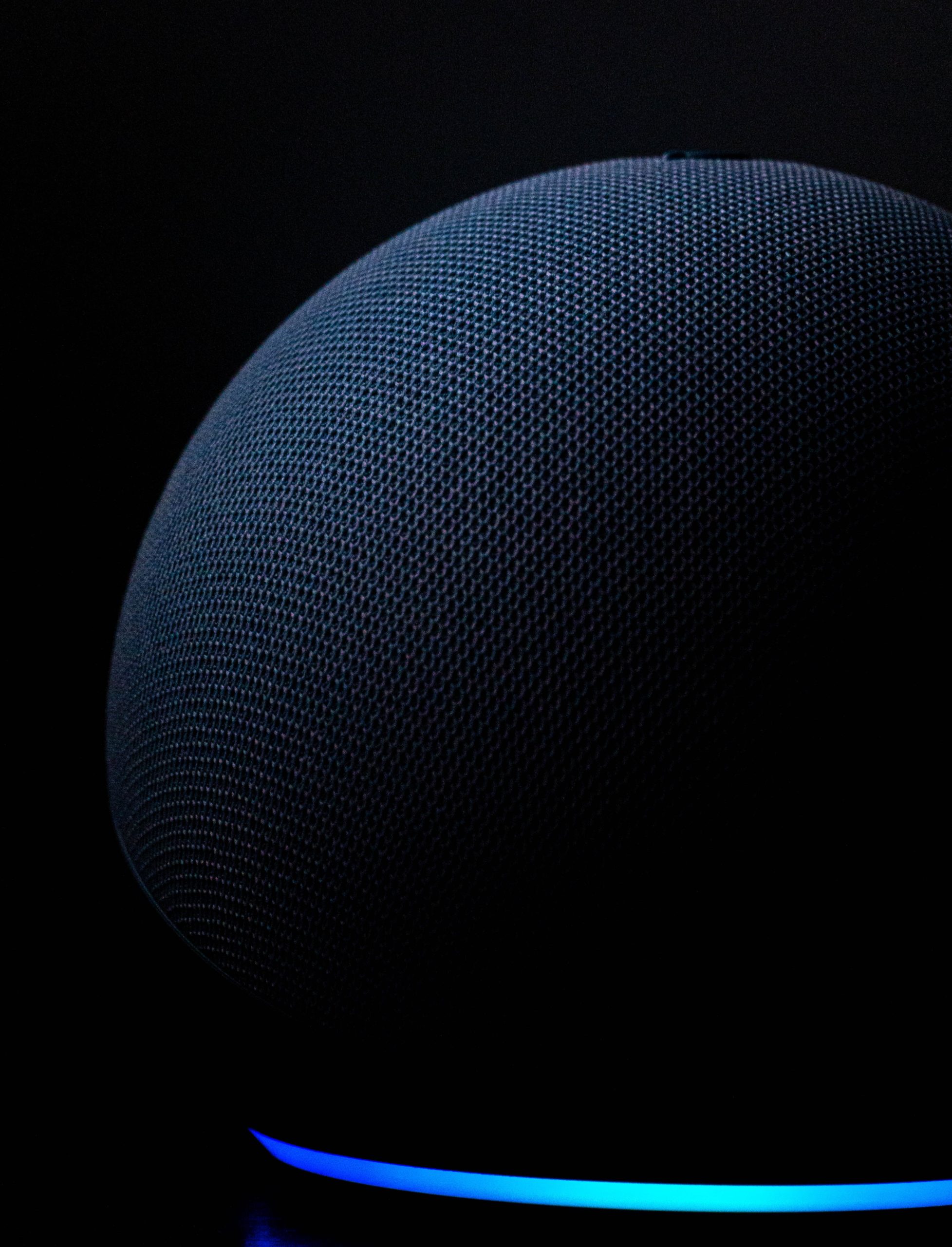

A common concern about voice assistants is the fear that someone, beyond your device, is listening to what you say. This alone makes people wary of putting machines with microphones around their houses. Beyond sensitive conversation being overheard, the voices of unintended people, such as children or guests, may be captured.

While voice assistants are one of the most advanced forms of conversational AI technologies, they become more intelligent when trained on better data. Such training requires humans to review user voice recordings from devices. These recordings are transcribed, annotated and returned to the system to train the AI to better respond to various situations.

While AI systems constantly improve based on the data it is trained on, the way companies acquire that data create trustworthy products. Amazon and Google claim their smart speakers only review an “extremely small number of interactions” to improve the customer experience. An Echo device does not record all your conversations, but rather “wakes up” to talk to you only after it hears certain words (i.e., “Alexa”). Companies like Google also provide options to delete voice history or mute devices to give users more control over their interactions.

Technology moves faster than regulation

Despite some safeguards, people deserve other opportunities to control how conversational AI collects personal data. Third-party apps or skills may not adhere to the same data collection guidelines as the device manufacturer, nor are they required to. The existing data privacy rights outlined in Europe’s General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA) are hardly universal. In fact, these rules are not unique to voice interfaces and AI, which may use data differently than other technologies.

The world of voice assistants has grown from the platforms of a few Big Tech companies to several independently developed and owned assistants. Introducing third-party providers, through voice apps or integrations, also complicates how data are gathered and used. This is the consumer equivalent of being tracked on websites by cookies, which are small files that remember information about you every time you visit a site.

Nowadays, many companies ask for explicit consent to collect data when you land on their website. This push towards opt-in data collection preserves your personal information before it is shared to many entities, sometimes for advertising purposes. However, this kind of explicit consent is not common practice in conversational AI.

Preserving your voice

The nuances of voice technology make it difficult to create a one-size-fits-all approach to privacy. One way to assuage the fear of being recorded is through on-device processing. Rather than send your voice to the cloud, where humans may later review certain requests, your device would handle everything directly. Apple is taking this step towards transparency through Siri’s on-device processing to also speed up performance.

When it comes to data retention, the European Data Protection Board published guidelines reminding companies to ask themselves: is it necessary to store all voice recordings? Perhaps remembering payment information is beneficial to companies and consumers, but what about recent web searches? Users often wonder what purpose all their data serves. Transparency around how people can delete personal data is crucial for building trust despite these doubts.

Increasing transparency may require other novel measures. The Open Voice Network explores what that could sound like. For example, people could enter “training mode,” where the AI asks for permission to use data for training. This data could be deleted post-processing. Other suggestions include voice assistants giving proactive suggestions such as “Do you want to hear XYZ company’s privacy policy?” or by adapting privacy policies for text-to-speech playback.

These practices are key when any sort of biometric data are used, like in voice authentication. An ideal approach is active enrollment, a type of opt-in, using a quick explanation of how voice matching would benefit the product experience. When implemented correctly, this type of technology could even verify health records or secure online portals. However, using audio recordings of someone else’s voice may introduce another type of privacy invasion.

Vocal accountability

Every bit of data gathered is a puzzle piece about one’s life. While the commands we give our voice assistants may be innocuous, perhaps what the AI is inferring about is a misrepresentation. In this case, what we don’t know could harm future experiences with these products.

Keeping AI transparent, asking for user consent and providing data controls are all steps in the right direction. If we treat our voices as personally as we do our passwords, then companies should continue keeping our identities safe.