✍️ Original article by Ravit Dotan, a researcher in AI ethics, impact investing, philosophy of science, feminist philosophy, and their intersections.

In this article, the first in the series, I describe the framework I am creating to help organizations develop AI more responsibly. In a nutshell, I recommend that organizations design policies and actions to increase their knowledge of AI ethics, integrate this knowledge into their workflows, and create oversight structures to keep themselves accountable.

My framework builds on research from responsible innovation (e.g., Stilgoe, Owen, & Macnaghten and Lubberink et al.), AI ethics (e.g., NIST’s framework), philosophy (e.g., Longino), and feedback from practitioners and experts in AI ethics. Moreover, it is compatible with leading frameworks for risk assessment and AI ethics principles, such as The European Commission’s Ethics guidelines for trustworthy AI, UNESCO’s Recommendation on the Ethics of Artificial Intelligence, The OECD’s principles, NIST’s Guide for Conducting Risk Assessments, and The EthicalOS Toolkit.

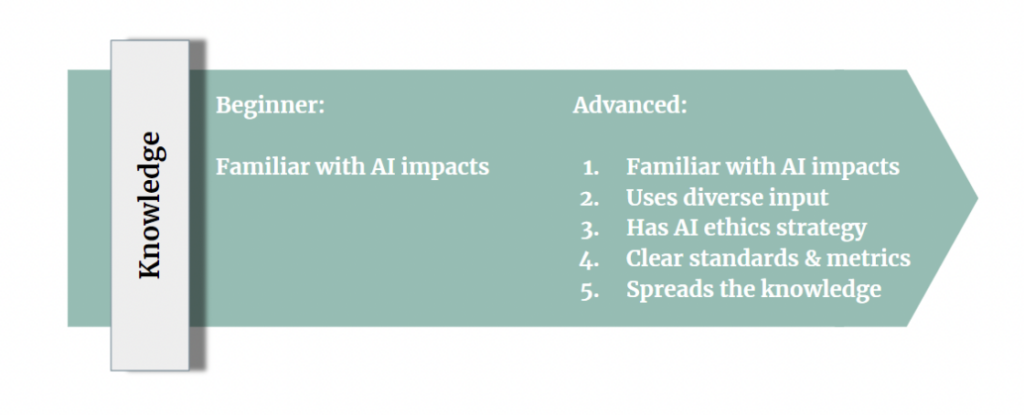

In what follows, I discuss each of the pillars of my framework — knowledge, workflow, and oversight. I introduce questions that organizations that develop AI should ask themselves as they design their policies. I suggest concrete actions they can take to increase their AI ethics maturity from beginner to advanced. The next article in this series will describe a case study, and the third article will guide those investing in data and AI companies.

1. Knowledge

You can’t solve problems without understanding them. Therefore, it is crucial that organizations that develop AI work to understand how their AI may impact people, society, and the environment, for better or worse.

When beginning their journey in AI ethics, companies should work to understand the impacts of their AI system. Organizations that are advanced in AI ethics should continuously grow their knowledge, have a strategy for implementing AI ethics, have standards and metrics informed by diverse input, and spread the knowledge across the organization. What that means in practice depends on the company’s stage of development. Our expectations of a pre-seed company differ from our expectations of an enterprise.

1.1 Familiarity with AI impacts

To better familiarize yourself with your AI system’s potential impacts, anticipate what could go wrong and critically evaluate your assumptions and activities. Early-stage companies may need to assess their risks themselves, and they can and should do so even in the pre-seed stage. Helpful tools to use to evaluate AI risks include Kosa’s Responsible AI Self-Assessment, The EthicalOS Toolkit, NIST’s Guide for Conducting Risk Assessments, The OECD Framework for the classification of AI systems, Digital Dubai’s AI System Ethics Self-Assessment Tool, Canada’s Algorithmic Impact Assessment Tool, and The Turing Institute Understanding Artificial intelligence Ethics and Safety Guide. More established companies can be expected to use the help of experts in evaluating your AI’s potential impacts, as experts can guide them and give them an informed external perspective.

1.2 Consulting diverse experts and stakeholders in learning about the potential impacts of its AI system

Without the participation of diverse stakeholders, as you think about the impacts of your AI, you are likely to have substantive blind spots. Pre-seed companies can include AI ethics in customer discovery processes and purposefully seek conversations with diverse stakeholders. Well-established companies may deploy surveys, convene focus groups, facilitate research, and build feedback channels into their platform to engage with diverse stakeholders.

Whichever channels of communication are set up, make sure to address the following questions:

- How can your organization gain access to diverse perspectives from all stakeholders?

- How can your organization cultivate the literacy that different stakeholders need to be able to engage with AI ethics?

- Is your organization proactively seeking ethics feedback about the AI systems it develops and uses?

- How do you use stakeholder feedback to improve your AI system?

1.3 Having a Strategy for Implementing AI Ethics

Organizations should know where AI ethics fit in the company’s overall strategy. For example, which risk areas matter most to them? Which departments’ workflows should have AI ethics components?

1.4 Having clear AI ethics standards and metrics

For example, in pre-seed companies, formulating standards may mean clearly articulating red lines to guide decisions on which capabilities not to pursue. In well-established companies, having clear standards and metrics may mean articulating AI ethics principles and deciding on technical interpretations and minimum thresholds for each.

You can find some guidance on articulating AI ethics principles here.

1.5 Effectively spreading knowledge about AI ethics across the organization

For example, in pre-seed companies that consist only of the founders, spreading AI ethics knowledge may mean intentional conversations about the topic between the founders. In well-established companies, spreading knowledge may mean including AI ethics in onboarding and ongoing training. Such activities help employees build up their skills and create spaces to have meaningful conversations that will push your organization forward.

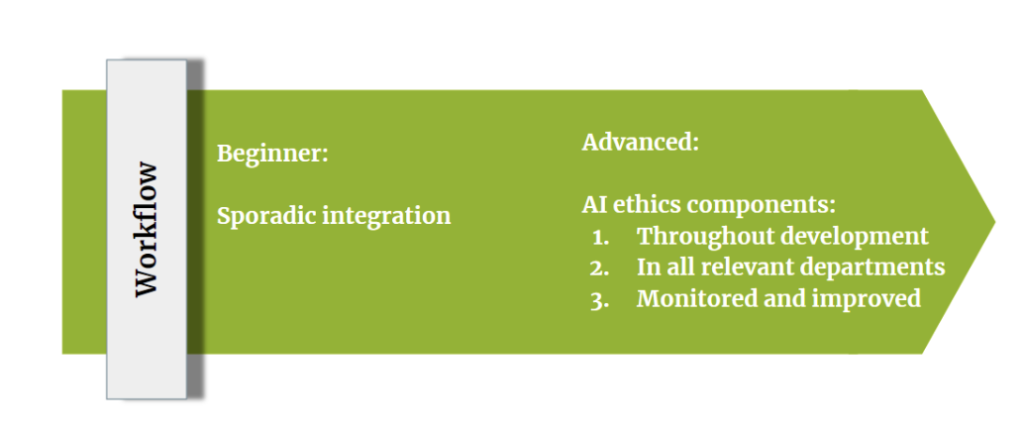

2. Workflow

If your goal is to make your AI more responsible, you must act on your knowledge by incorporating responsible AI practices into all your workflows, including R&D, sales, procurement, HR, and so on. Companies that are beginners in AI ethics integrate it into their workflow sporadically. Companies that are advanced in AI ethics do so systematically and holistically.

As with knowledge, what that means depends on the company’s stage of development. Even pre-seed companies can and should include AI ethics in their workflows. For example, if your workflow consists of following a to-do list on a shard doc, think about where AI ethics belongs on that to-do list. The rule of thumb is that the company’s workflows, whatever they are, should have systematic and holistic ethical AI facets. In this section, I suggest some questions to remember as you think about how to include AI ethics in your workflows.

2.1 Integrate AI ethics components throughout the development life cycle, from ideation to production

Questions that can help you decide how to embed responsible AI practices in your R&D include:

- What is your process for detecting and resolving unsatisfactory responsible AI performance in each development phase?

- Which responsible AI issues would prevent moving the feature from one phase to the next, e.g., from ideation to development? Which issues would require taking the feature offline?

- How do you create incentives to meet your responsible AI goals in your R&D work? For example, will your R&D department have responsible AI OKRs and KPIs?

Once you have your responsible AI standards, measures, and policies, you can look for the technical tools to help you meet your goals. You may create some of the tools yourself. Alternatively, you may use tools that are already out there. Prominent tools include IBM’s AI Fairness 360, an open-source toolkit to reduce bias in AI, Gebru et al.’s Datasheets for Datasets, Mitchell et al.’s Model Cards for Model Reporting, and Holland et al.’s Dataset Nutrition Label, all of which help increase transparency. Last, you may opt to use a vendor to help you. You can find many responsible AI vendors in the Ethical AI Database.

2.2 Integrate AI ethics components into all departments that are relevant to your AI ethics activity.

Any department that can enhance or deter your AI ethics activities is relevant. This includes HR, sales, marketing, customer relations, operations, etc.

For example, questions to ask yourself in setting up sales and procurement policies include the following:

- How do you determine whether a 3rd party AI system meets your responsible AI standards before you buy it and on an ongoing basis?

- How do you determine whether a buyer will likely meet your responsible AI standards?

- Which responsible AI issues block a purchase/sale or end an ongoing engagement?

- What is the process for detecting and resolving violations of your responsible AI standards after the system is sold/bought? For example, who is responsible for detecting and resolving problems, you or the 3rd party?

- How do you create incentives to meet your responsible AI goals in buying and selling AI systems? For example, will your sales/procurement department have responsible AI KPIs?

2.3 Monitor and improve

You need to routinely monitor the efficacy of the integration of AI ethics in your workflows, as changes in circumstances may change the effectiveness of the integration. How do you measure and monitor the efficacy of your responsible AI policies in R&D and other departments?

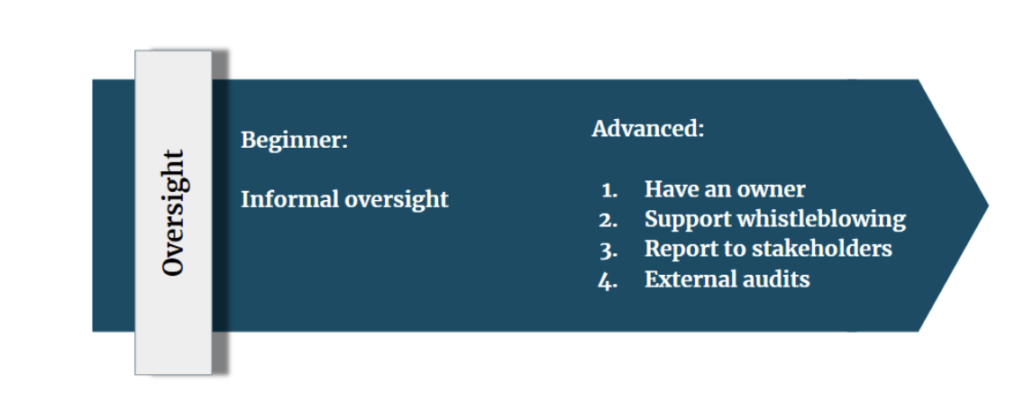

3. Oversight

Oversight is critical in keeping ourselves accountable. Beginners use informal oversight structures. Advanced organizations use multiple oversight structures that provide different kinds of accountability. As in the other pillars, our expectations for AI ethics oversight depend on the company’s stage of development, and even early-stage companies can and should set up oversight structures for AI ethics.

3.1 Put someone in charge of AI ethics activities

Ensure the responsible AI owner is well-positioned to enforce policies and influence the organization. In addition, think about how to instill a culture of responsibility. Even when an individual is in charge, everyone in the organization is responsible for aligning AI with the company’s values.

3.2 Set up reporting and whistleblowing procedures for responsible AI concerns

Employees are the first line of defense in detecting AI ethics issues. Ensure you have a designated space to report concerns without worrying about judgment and retaliation and have effective procedures to review and address concerns. For example, seed-stage companies can set aside designated time to discuss AI ethics as a group. Established companies can have formal whistleblowing procedures.

3.3 Report on your AI ethics progress to external and internal stakeholders

Make sure that your report is accessible to diverse stakeholder groups and that people can use the reports to track your progress over time. It is helpful to report your technical performance, e.g., your datasets’ composition, workflow policies, and culture. Early-stage companies can report their AI ethics progress to their board and investors. Established companies can also include AI ethics in public periodic reports about their work.

3.4 Conduct external audits for your responsible AI performance and practices

As Charles Raab poetically puts it, “an organization or profession that simply marks its homework cannot make valid claims to be trustworthy” (see Ayling and Chapman for more discussion of the importance and state of external audits in AI ethics). Just like your reports, audits can help examine technical performance, the efficacy of workflow policies, and your culture.

4. Summary and the next installment

We want AI to build humanity up rather than tear it down. In this article, I sketched steps organizations can take to develop AI more responsibly. My framework is organized around three pillars, knowledge, workflow, and oversight, and it builds on research from responsible innovation, AI ethics, and philosophy. In the following article, I will describe a case study; in the third article, I will provide guidance for investors and buyers of AI systems. Feedback is very welcome in this form.