🔬 Research Summary by Keyu Zhu and Nabeel Gillani

Keyu Zhu is a PhD student in the H. Milton Stewart School of Industrial and Systems Engineering at Georgia Institute of Technology. His current research interest lies in real-world applications of differential privacy.

Nabeel Gillani is an Assistant Professor of Design and Data Analysis at Northeastern University between the College of Arts, Media and Design and the D’Amore-McKim School of Business. He directs the Plural Connections Group, whose mission is to use tools from computation and design to foster pluralism: an inclusive response to diversity in society.

[Original paper by Keyu Zhu, Nabeel Gillani, and Pascal Van Hentenryck]

Overview: In the past decade, differential privacy, the de facto standard for privacy protection, has gained prevalence among statistical agencies (e.g., US Census Bureau) and corporations (e.g., Apple and Google), particularly in releasing sensitive information. This paper studies one under-explored yet important application based on demographic information—the redrawing of elementary school attendance boundaries (“redistricting”) for racial and ethnic diversity—and evaluates how and to what extent privacy-protected demographic information might impact racial and socioeconomic integration efforts across US public schools.

Introduction

Imagine yourself as an extraterrestrial researcher about to visit Earth in the Earth-616 universe. Your mission: to create more diversity by redrawing school attendance boundaries. However, to protect planet Earth’s privacy, the Sorcerer Supreme—Doctor Strange—has devised a security protocol called “differential privacy,” which prevents you from directly observing data from inhabitants of Earth-616 for fear of learning too much about Earthlings. Instead, under differential privacy, you and Doctor Strange will venture to another universe randomly selected among millions, where you observe similar yet slightly perturbed versions of earthlings in Earth-616 and use those observations to develop school attendance boundaries optimized to foster more racially and ethnically diverse schools. Then, Doctor Strange will teleport to Earth-616 and report your findings to local educational agencies.

Our research investigates the impacts of this multiverse-traversal protocol on your redistricting endeavors—especially how redrawing boundaries based on “noisy,” randomly-perturbed data might affect the redistricting policies that local educational agencies eventually adopt to foster more diverse schools. Our main finding: as Doctor Strange strengthens privacy protection, the selection of travel destinations across the multiverse progressively becomes more randomized, and, when applied to school districts in the Earth-616 universe, the alternative boundaries derived during the trip turn out to be less effective in desegregating schools.

Impacts of Differential Privacy on Diversity-Promoting School Attendance Boundaries

Optimize School Attendance Boundaries for Promoting Diversity

To this day, the issue of racial segregation is still prevalent in US K12 education. According to the U.S. Government Accountability Office (GAO), more than a third of students (about 18.5 million) attended a predominantly same-race/ethnicity school during the 2020-21 school year. Additionally, it is reported by the same agency that newly formed school districts in the recent decade are more racially segregated than the others. Over 70% of their students are white, worsening the situation.

Therefore, it is both meaningful and necessary to rethink the dominant student assignment policy across US school districts—school attendance boundaries or neighborhood catchment areas defined for schools—to create a more diverse and integrated environment for the next generation.

As an initial step, (Gillani et al., 2023) take a constraint programming approach of simulating alternative attendance boundaries optimized to achieve racial and ethnic desegregation across multiple US school districts. This study builds upon these algorithms.

Challenges from Differential Privacy

The U.S. Census Bureau has recently adopted a powerful disclosure avoidance system (DAS) based on a privacy assessment framework, known as differential privacy, to release its 2020 Census data products (Abowd, 2018). This change can exert profound impacts on many critical demographics-related downstream decision tasks, including political redistricting (Kenny et al., 2021) and funds allocation (Steed et al., 2022).

In the foreseeable future, it is possible that other datasets used by local educational agencies (like the American Community Survey) and local educational agencies themselves might eventually also take similar privacy-preserving approaches to safeguard citizens’ and students’ privacy. It makes us wonder how the adoption of differential privacy would affect one of the critical policy decisions that school districts often make based on these datasets: determining how school attendance boundaries should be drawn to achieve the many (often competing) objectives districts are generally contending with—like ensuring students don’t attend schools that are too far away, but also, ensuring schools are demographically diverse and offer students from all backgrounds the chance to learn from and with one another.

Before a deep dive into our findings, we will give you a brief walkthrough of how differential privacy works. At its core, it limits the ability of data analysts to gain much knowledge of a dataset’s underlying sensitive information. To achieve that goal, a common practice is to apply carefully calibrated random noise to produce a perturbed version of the original dataset.

Privacy-diversity Trade-off

We conduct empirical studies of 968 elementary schools across 67 school districts in Georgia, USA and evaluate the assignments of Census blocks to elementary schools within given school districts for the following three proposals:

- non-private school assignment derived from the model in (Gillani et al., 2023),

- private school assignment based on privacy-protected demographics of students,

- current school assignment.

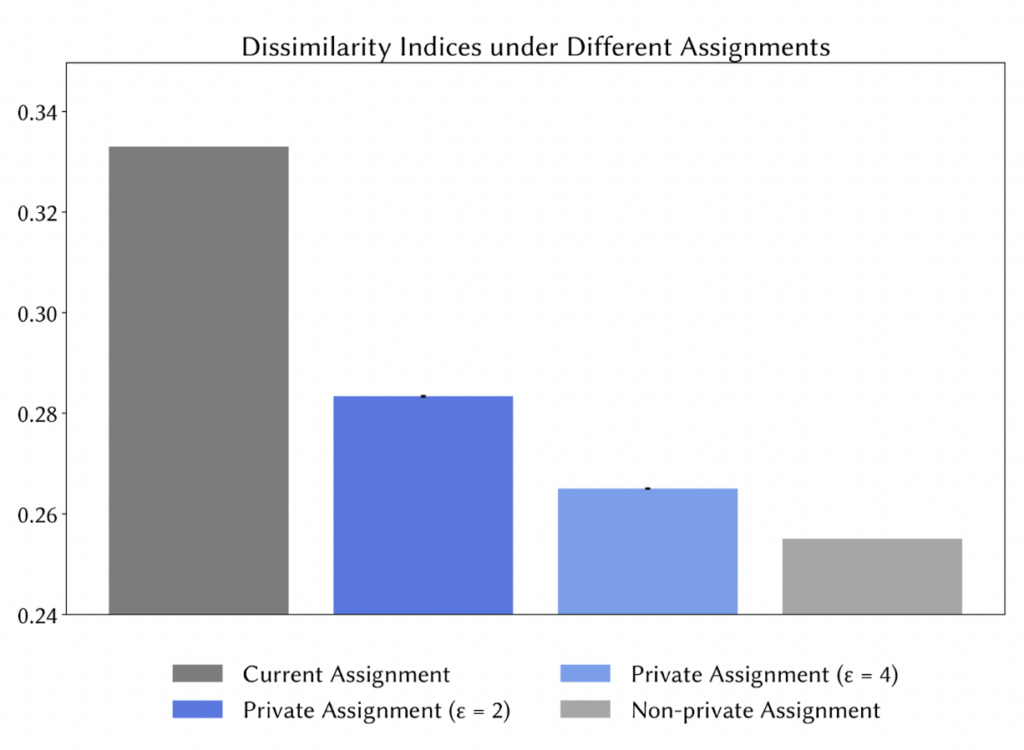

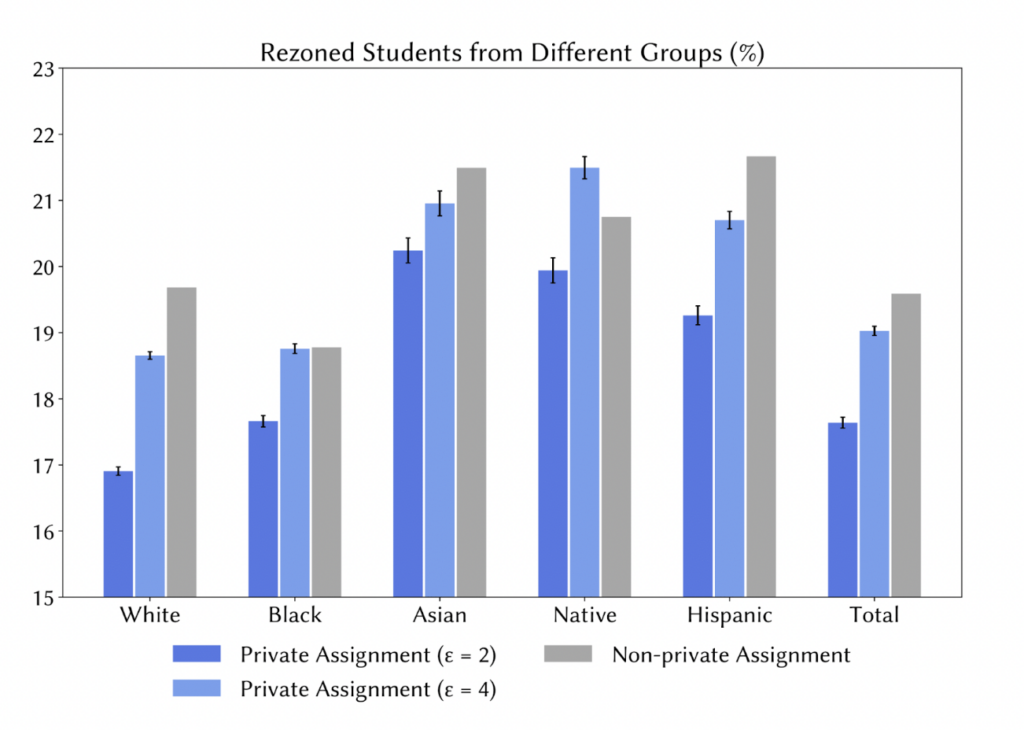

Our overarching finding is that across several outcome measures, the performance of private school assignments is moderate and lies somewhere between the non-private and current ones.

The lower the dissimilarity index, the less radically segregated. The lower value of ε represents stronger privacy guarantees.

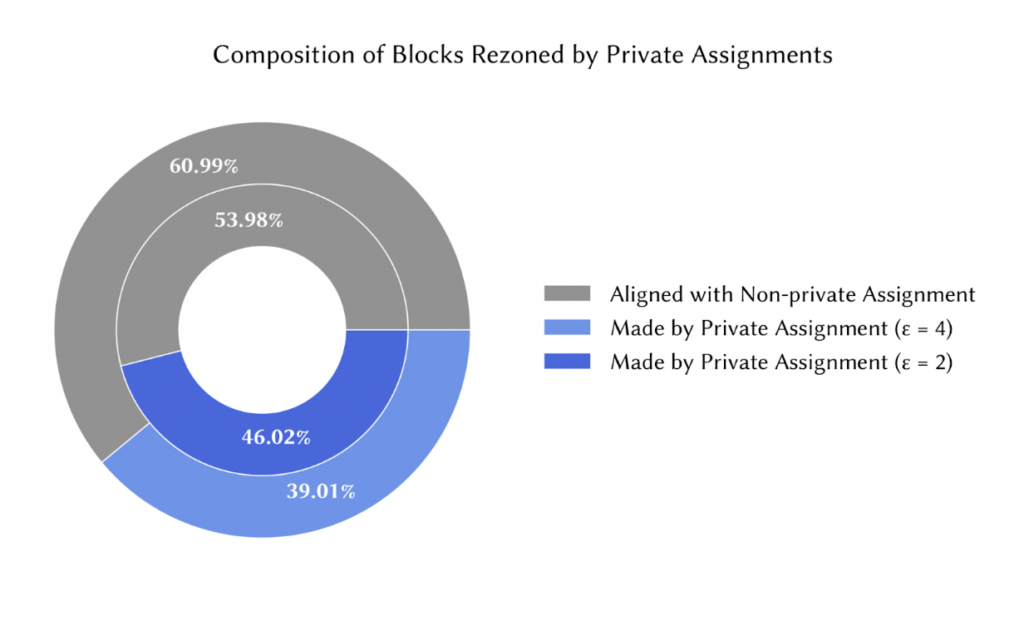

As illustrated in the figure above, strong privacy protection hinders rezoning effects in racial and ethnic desegregation. This phenomenon can be partially justified because, perturbed by more random noise, the private school assignment rezones fewer Census blocks and students in expectation.

Moreover, as privacy protection gets stronger, the proportion of the rezoning decisions made by the private assignment aligned with those made by the non-private assignment drops (61% for ε = 4 vs. 54% for ε = 2).

Interestingly, we estimate that the impacts of differential privacy on commuting times from home to school are minimal across all racial and ethnic groups.

Last but not least, we find that only a small amount of the difference between private and non-private school assignments’ impact on changes in diversity can be explained by district-level demographics and baseline segregation rates. This observation suggests that the nuances of each district’s current boundaries and geographic population distribution will likely influence how much the introduction of differential privacy will affect redistricting outcomes to foster more diverse and integrated schools.

Between the lines

Our results demonstrate that private school assignments re-assign fewer students and yield less diverse attendance boundaries as privacy protection strengthens. This finding points to a privacy-diversity trade-off local educational policymakers may face in forthcoming years, particularly as computational methods increasingly play a role in the attendance boundary redrawing.

To conclude this article, we elaborate on two limitations of our work. One, we focus on “average-case” analyses across multiple districts, which may obfuscate district-level nuances vis-á-vis how much differential privacy is likely to affect. More specifically, the impacts of differential privacy on redistricting endeavors for a given school district might differ from our findings and largely depend on its characteristics.

Two, our simulations are not based on ground-truth data from an actual school district. Instead, we conduct them based on estimated student counts per Census block, derived from a combination of datasets from the US Department of Education and the US Census (the latter of which has already been released under a disclosure avoidance system).

These limitations underscore the need for researchers to work closely with school districts to deepen the understanding of how differential privacy affects the redrawing of school attendance boundaries. Our team has started to form such collaborations and looks forward to the new research questions and practical applications that await!

References

Abowd, J. M. (2018, July). The US Census Bureau adopts differential privacy. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (pp. 2867-2867).

Gillani, N., Beeferman, D., Vega-Pourheydarian, C., Overney, C., Van Hentenryck, P., & Roy, D. (2023). Redrawing Attendance Boundaries to Promote Racial and Ethnic Diversity in Elementary Schools. Educational Researcher.

Kenny, C. T., Kuriwaki, S., McCartan, C., Rosenman, E. T., Simko, T., & Imai, K. (2021). The use of differential privacy for census data and its impact on redistricting: The case of the 2020 US Census. Science advances, 7(41), eabk3283.

Steed, R., Liu, T., Wu, Z. S., & Acquisti, A. (2022). Policy impacts of statistical uncertainty and privacy. Science, 377(6609), 928-931.