🔬 Research Summary by Maurice Jakesch, a Ph.D. candidate at Cornell University, where he investigates the societal impact of AI systems that change human communication.

[Original paper by Maurice Jakesch, Zana Buçinca, Saleema Amershi, and Alexandra Olteanu]

Overview: AI ethics guidelines argue that values such as fairness and transparency are key to the responsible development of AI. However, less is known about the values a broader and more representative public cares about in the AI systems they may be affected by. This paper surveys a US-representative sample and AI practitioners about their value priorities for responsible AI.

Introduction

Private companies, public sector organizations, and academic groups have published AI ethics guidelines. These guidelines converge on five central values: transparency, fairness, safety, accountability, and privacy. But these values may differ from what a broader and more representative population would consider important for the AI technologies they interact with.

Prior research has shown that value preferences and ethical intuitions depend on peoples’ backgrounds and personal experiences. As AI technologies are often developed by relatively homogeneous and demographically skewed, practitioners may unknowingly encode their biases and assumptions into their concept and operationalization of responsible AI.

This study develops an AI value survey to understand how groups differ in their value priorities for responsible AI and what values a representative public would emphasize.

Key Insights

The authors draw on empirical ethics and value elicitation research traditions to develop a survey. They ask participants about the importance of 12 responsible AI values in different deployment scenarios and field the survey with three groups:

- A US census-representative sample (N=743) to understand what values a broader public cares about in the AI systems they interact with.

- A sample of AI practitioners (N=175) to test what values those who develop AI systems would prioritize.

- A sample of crowdworkers (N=755) to explore whether they can diversify ethical judgment in the AI development process.

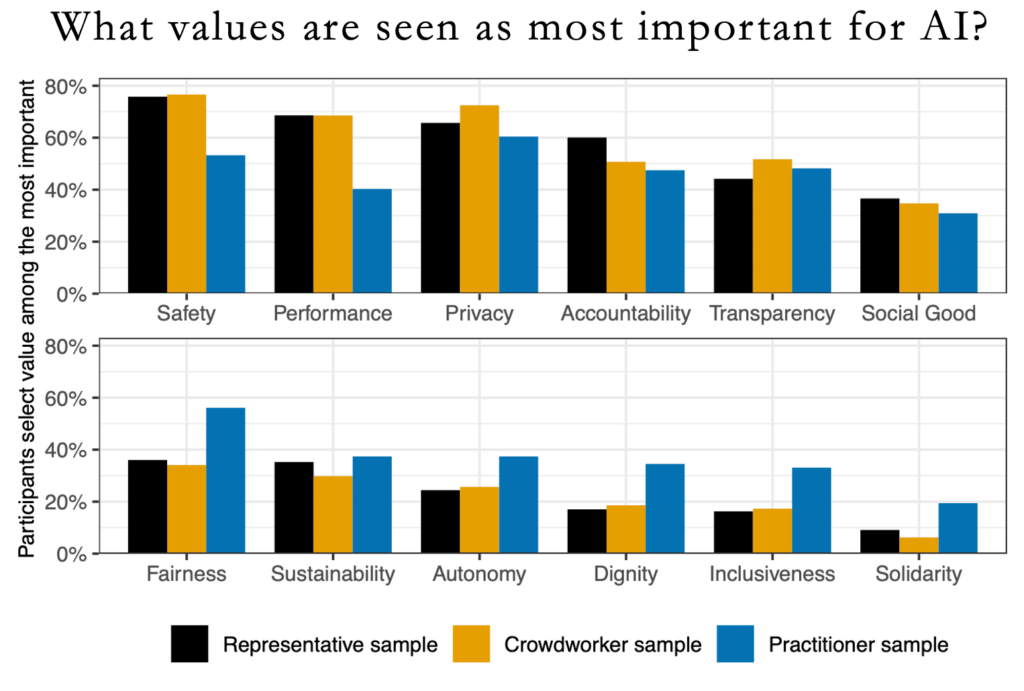

The findings show that different groups perceive and prioritize responsible AI values differently. AI practitioners, on average, evaluated responsible AI values as less important than other groups. At the same time, AI practitioners prioritized fairness more often than participants from the US-census representative sample who emphasized safety, privacy, and performance. The results highlight the need for AI practitioners to contextualize and probe their ethical intuitions and assumptions.

The authors also find differences in value priorities along demographic lines. For example, women and black respondents evaluated responsible AI values as more important than other groups. The most contested value trade-off was the one between fairness and performance. Surprisingly, participants reporting past experiences of discrimination did not prioritize fairness more than others, but liberal-leaning participants prioritized fairness while conservative-leaning participants tended to prioritize performance.

Between the lines

The results empirically corroborate a commonly raised concern: AI practitioners’ priorities for responsible AI are not representative of the value priorities of the wider US population. They show that different groups differ in their judgment of specific behaviors and technical details and disagree on the importance of the values at the core of responsible AI.

The disagreement in value priorities highlights the importance of paying attention to who defines what constitutes “ethical” or “responsible” AI. AI ethics guidelines may emphasize different values depending on who writes them and who is consulted. Representation matters, and consulting populations outside the West about their priorities for responsible AI would surface even starker disagreement about what responsible AI should be about.