By Bogdana Rakova (@bobirakova), Research Fellow at Partnership on AI, Data Scientist at Accenture.

The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems (A/IS) is a community of experts collaborating on comprehensive, crowd-sourced, and transdisciplinary efforts that aim to contribute to better alignment between AI-enabled technology and society’s broader values. The collaborative efforts of these experts in their work on Ethically Aligned Design [1] has given rise to 15 working groups dedicated to specific aspects of the ethics of A/IS. P7010 is one of these working groups which has published the 7010-2020 – IEEE Recommended Practice for Assessing the Impact of Autonomous and Intelligent Systems on Human Well-Being. It puts forth “wellbeing metrics relating to human factors directly affected by intelligent and autonomous systems and establishes a baseline for the types of objective and subjective data these systems should analyze and include (in their programming and functioning) to proactively increase human wellbeing” [2]. The impact assessment proposed by the group could provide practical guidance and strategies that “enable programmers, engineers, technologists and business managers to better consider how the products and services they create can increase human well-being based on a wider spectrum of measures than economic growth and productivity alone” [3].

Why is the question of metrics relevant during and beyond the covid crisis response efforts?

Many scholars around the world have raised difficult questions in the implementation of contact tracing and other technology that is being utilized by corporations and governments during the outbreak response efforts. As part of their response, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems called for new metrics for success “in order for such systems to remain human-centric, serving humanity’s values and ethical principles” [4]. Similarly, in his recent call for action, french philosopher Bruno Latour calls for new metrics he describes as “protective measures” [5]. He refers to germs as super-globalizers and writes about what it would mean for us to become efficient globalisation interrupters through questioning. This could lead us to new forms of political expression, ”just as effective, in our millions, as the infamous coronavirus as it goes about globalising the planet in its own way” [5]. The act of questioning, that is embodied and situated in the real world, could be facilitated by what he calls protective measures –

“not just against the virus, but against every element of the mode of production that we don’t want to see coming back. So, it is no longer a matter of a system of production picking up again or being curbed, but one of getting away from production as the overriding principle of our relationship to the world” [5].

The intersection of AI and community well-being

The questioning of our relationship to the world gives rise to the need for a better understanding of the role of A/IS in the way we interact with the world. Comprehensive metrics frameworks such as the one proposed by IEEE P7010 could create new insights about the impacts of A/IS if they are applied in concrete contexts and if the learnings are shared in collaborative and generative ways. One invitation for researchers who are interested in such applied work has been a forthcoming special issue of the Springer International Journal of Community Well-being. It aims explore the emerging intersection of AI and community well-being through investigating the questions of:

- What well-being frameworks do we need in order to guide the development, deployment and/or operations of AI-systems for the benefit of humanity? How do they allow communities to participate? What could the history of communities around the world teach us about AI? What type of opportunities are there that AI could help us benefit from and how?

- How can AI protect community well-being from threats (climate change, economic inequality, gender inequality, interference with the democratic process, mental illness, etc)?

- How is the use of AI in itself a threat to community well-being? What can a community do to mitigate, manage or negate the threat? Threats may include unemployment, income inequality, psychological well-being (e.g. sense of purpose), safety, human rights violations, etc.

Going Forward

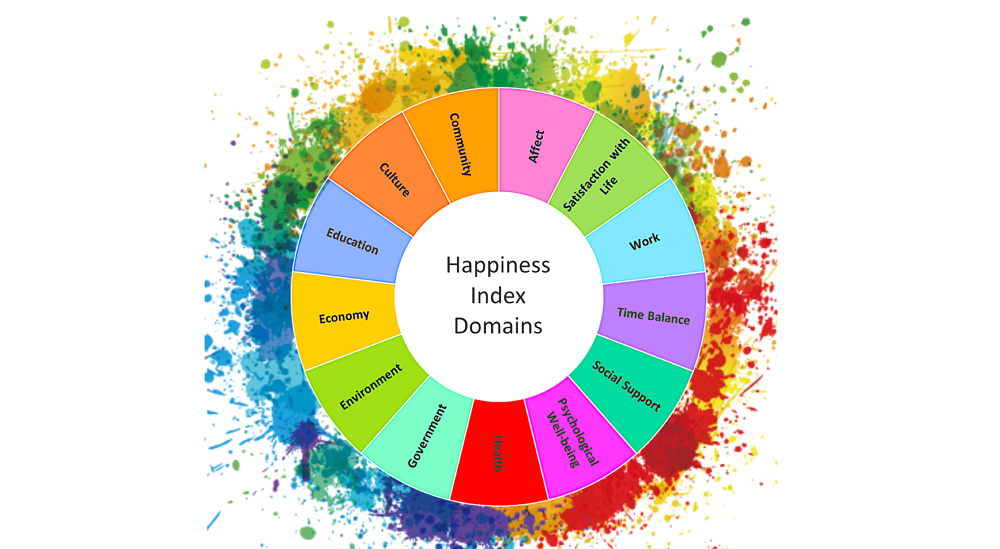

Moments of crisis remind us of what is most important to us. They create space for us to reconsider the personal as well as the societal choices we make in every one of the comprehensive domains of happiness summarized in the below graphic by the Happiness Alliance.

Ultimately, enabling and demanding for diverse voices in every aspect of technology development could bring about tremendous positive impacts to the well-being of people and the planet. New forms of cooperation and collaboration through the implementation of guidelines, best practices, metrics frameworks, and other efforts could empower everyone to participate in the way technology is transforming our lived experience.

References:

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems, First Edition. IEEE, 2019.

- Approved PAR 7010-2020 – IEEE Recommended Practice for Assessing the Impact of Autonomous and Intelligent Systems on Human Well-Being.

- Musikanski, L., Havens, J., & Gunsch, G. (2018). IEEE P7010 Well-being Metrics Standard for Autonomous and Intelligence Systems. An introduction. IEEE Standards Association. Piscataway Township, NJ: IEEE Standards Association.

- The Executive Committee of The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. (2020). Statement Regarding the Ethical Implementation of Artificial Intelligence Systems (AIS) for Addressing the COVID-19 Pandemic.

- Latour, B. (2020). What protective measures can you think of so we don’t go back to the pre-crisis production model? Unpublished Manuscript.