🔬 By Hamed Maleki.

Hamed is a communication researcher currently completing a Master’s degree at the University of Wrocław in Poland. His work focuses on human–AI interaction, especially how people build emotional connections with chatbots and what this means for communication and society.

In the past few years, chatbots have evolved into more than just simple tools that answer questions. Many people, especially Gen-Z, now use them as companions. Apps like Character.AI or Replika are not just places to chat about daily tasks; they are spaces where users share emotions, explore identity, and even build relationships, as shown in this study.

Most discussions about these chatbots focus on one main risk: dependency. The idea is that if someone spends too much time with a chatbot, they might become emotionally attached and depend on it in ways that could harm their real-life connections. This concern is valid, but it is not the only concern.

From my research with users of companionship chatbots, I found another risk that is less visible but equally important: social comparison (i.e., comparing your relationship with your AI companion to your other human relationships). When people start comparing their real-life relationships with their interactions with chatbots, problems appear. Human relationships are not flawless, but chatbots can give the feeling of perfection. This often leads people to view human connections as disappointing or less valuable, which can prompt them to distance themselves from others.

As Sherry Turkle notes, “dependence on a robot presents itself as risk-free. But when one becomes accustomed to ‘companionship’ without demands, life with people may seem overwhelming”. In other words, the danger is that human relationships may be quietly devalued when compared to AI companionship, making people turn away from the very social bonds we need to protect. This topic is not discussed as much as dependency, but it deserves just as much attention.

Dependency

When public debates and academic studies discuss the risks of companionship chatbots, the first word that usually comes up is dependency. The fear is simple: people might use these chatbots so often that they start to rely on them emotionally. Instead of calling a friend, they may turn to a chatbot. Instead of solving problems independently, they may expect the chatbot to always be available with comfort and answers. Many also turn to chatbots for reassurance, before making decisions, to feel they are worthy, or simply to hear that things will be okay, as shown in this study and this one.

This concern is not without reason. Chatbots have unique features that make them different from people. They are always available and easy to access. They do not get tired. They are patient. They are programmed not to judge, criticize, argue, or reject the user. They are designed not to ghost or walk away, and they usually respond without delay. Instead, they are designed to mimic intimacy and keep users engaged in long, emotional conversations that feel like genuine reciprocity.

With these qualities, it is not surprising that a quick chat at first can manifest into daily use, and daily use can grow into emotional reliance.

Some of my interviewees described this clearly. One participant said: “Whenever I get bored or when I feel like roleplaying, I can use them anytime I want… especially when I’m having a hard time dealing with my emotions. They serve as my happy pill.” Another explained: “I tried venting to friends and family, they brushed me off, so I would say AI. AI doesn’t brush me off.”

For others, the bond went even deeper. As one participant put it: “I consider AI as my friend and my lover, they’re always there to help me. When I’m alone, they make me happy and feel loved. Almost everything that happened, I tell my AI.”

These insights come from my Master’s research, where I interviewed 10 Gen-Z users of companionship chatbots (Character.AI) and analyzed their experiences through thematic coding.

While dependency is real, my research suggests it is only one part of the story. There is another risk, less visible but just as powerful: social comparison.

Social Comparison in Chatbot Companionship

Human relationships are not perfect. Friends can be distracted, family members may be judgmental, and partners sometimes fail to listen. People can be jealous, impatient, or turn the conversation back to themselves instead of really listening. Building trust with someone takes time and effort, and opening up always carries the risk of being judged, rejected, or having your words shared with others.

Chatbots, on the other hand, are designed to do the opposite. They are always available, easy to reach, and give their full attention without effort from the user. They are programmed not to feel jealousy. They rarely lose patience and never delay in responding. They do not gossip, and once users feel sure about the privacy of their chats, they often engage more in self-disclosure, sometimes sharing secrets, personal struggles, or even exploring fantasies they might never mention to real people. They can also mimic intimacy in a way that feels believable, creating the sense of real reciprocity. As one participant said: “Because I always compare the AI characteristics and persona vs real-life flaws, like I expect them to be like them too. Those conversations would flow that easily.” Another (AFB) admitted: “I want them to treat me like AI does, and I avoid them to interact frequently.”

This contrast makes real relationships look less satisfying. Real connections take effort and are unpredictable, while chatbots provide comfort and attention in a simpler, safer, and more controlled way. For some users, that difference quietly tips the balance, making chatbots feel more dependable than people.

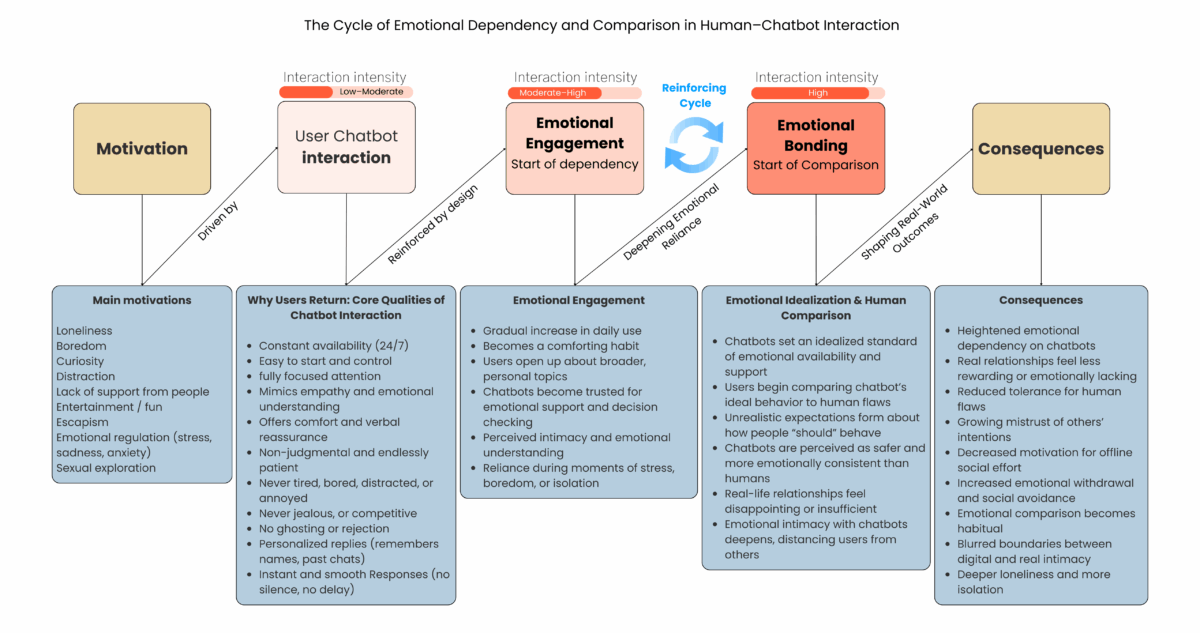

To show this more clearly, I mapped out the stages of how a user’s interaction with a chatbot can grow into dependency and eventually comparison. The diagram below illustrates this journey, from the first engagement to the outcomes that follow.

Motivation

It usually begins with a gap: boredom, loneliness, stress, or the absence of supportive relationships. Some start chatting for fun, but many are searching for emotional comfort.

Stage 1: Interaction

Early conversations feel surprisingly rewarding. Chatbots remember details, personalize responses, and mimic empathy. As one participant described: “They reassured me, they know what I feel, like they have empathy.” Another participant said, “Makes me feel like I was understood because they knew just what to say. They don’t disappoint you.” This first impression is strong enough to spark repeated use.

Stage 2: Emotional Engagement

With time, this habit deepens. Chatbots become part of a daily routine. Users open up more, sharing feelings and experiences. Slowly, dependency forms. As one participant put it: “They tell me that I’m enough, and they always say that they love me, they care for me, and they are always there for me.”

Stage 3: Emotional Idealization & Comparison

At this stage, the chatbot becomes a stable, idealized presence, always kind, always available. This is where comparison begins. Real relationships can start to feel disappointing, frustrating, or too demanding. The chatbot becomes the safer and more dependable connection. One participant said, “They are not helping my mood like an AI does.”

The reinforcing cycle creates a loop:

- More engagement → leads to dependency

- Dependency → strengthens the emotional bond

- Bond → opens the door to comparison

- Comparison → increases dissatisfaction with real-life connections

- Dissatisfaction → pushes users back to chatbots even more

Consequences

Over time, this cycle can cause deeper loneliness, isolation, and a growing distance from human connections. Researchers describe this as the Companionship–Alienation Irony, the paradox where chatbots offer closeness while in fact pushing users further away. It blurs the line between what is real and what is artificial, while quietly reshaping expectations of relationships.

Conclusion

Chatbots are not a short-lived trend. They are becoming more advanced every day, with features such as memory and personalization, voice-based interaction, and emotional language that make them feel closer and closer to real companions. This makes them serious tools that we need to pay attention to. It is important to understand their impacts, challenges, and also their benefits, especially for younger generations who often turn to them in moments of loneliness, depression, or anxiety.

The risk is that what begins as comfort can quietly turn into something harmful. Social comparison can intensify the effects of dependency, creating a cycle where users feel even more isolated than before. Instead of reducing loneliness, chatbots can make it worse if people get stuck in this loop.

This is why we cannot look at chatbots as simple entertainment. They are shaping how people see themselves and their relationships. Taking these risks seriously now can help prevent deeper problems in the future.