Written by Connor Wright, research intern at FairlyAI — a software-as-a-service AI audit platform which provides AI quality assurance for automated decision making systems with focus on AI equality. He is also a researcher here at MAIEI.

The classic 90’s guideline of “On the internet, nobody knows you’re a dog” has evolved. Instead of nobody knowing you’re a different kind of being, the tagline now refers to whether you’re interacting with someone who actually exists or not. The rise of chatbots has pulled into question what the very core of conversation ought to be, as well as ethical issues alongside it. So, what actually is a chatbot? Who has taken this issue seriously? Can chatbots actually be a force for good? I’ll answer all 3 of these questions throughout this discussion.

To start with, an interesting distinction can be made in the chatbot arena, namely between chatbots and social bots. A chatbot is a bot that is involved in direct human dialogue with a singular human counterpart (such as a virtual assistant on a website). A social bot is a bot that is not directly involved in dialogue with a human and rather is orientated towards disseminating content (such as automated profiles spreading fake news on Facebook and Twitter). What makes this distinction even more interesting is its role in the most prominent chatbot legislation I could find, the Bolstering Online Transparency Act of California.

In 2019, California released the Act (now referred to as the California Act) in order to help combat the ability to deceive that chatbots possess. Chatbots had been able to spread false information at alarming rates, and often able to convince human actors to make a certain political or business decision thinking they have been talking with a human. As a result, chatbots risks centre around their ability to spread false information that can then harbour negative impacts in society.

Hence, the California Act aims to mitigate this. Here, a chatbot is deemed by the California Act to be an online account where most (if not all) of the content is not the result of a person. From here, the act solely aims to legislate chatbots that are involved in human interaction in order to deceive. Some may then say that social bots are excluded as they don’t actually get involved with interacting with humans, just solely feeding them content. However, they would be wrong. The fact that social bots have the intention for the content to be acted upon by humans, they too fall under the remit of the act. Above all, any automated account that is looking to deceive a human within California will have to face up to the California Act. Now, how does it accomplish this?

The proposed solution is devilishly simple. Whenever a chatbot engages with a human, the first message has to contain a clear and non-conspicuous declaration that the chatbot is indeed a chatbot. For example, a chatbot message such as “Hey, how can I help you?” doesn’t cut it. Instead, the chatbot ought to say something along the lines of “Hi I’m Connor, your automated virtual assistant. How may I help you?”. In order to avoid such protocol being taken advantage of, the FTC issued some guidelines on how this ought to be adhered to. For example, the font and colour of the text used to introduce the chatbot must be clear, while the customer is not to have to scroll anywhere to discover the disclosure. Given the problem being addressed by the legislation, are chatbots only aimed to deceive?

Fortunately, there is a positive side to the emergence of chatbots. Their automated nature has allowed businesses to dedicate valuable time to other more pressing issues as opposed to time-consuming admin tasks. Chatbots have been able to permit businesses to provide 24/7 customer care as well, with virtual assistants answering brief customer queries no matter what time of day and in what time zone, increasing customer satisfaction, reducing hold times over the phone, and thus easing the workload on customer services.

Furthermore, chatbots have been able to start being trained to spot common typos that customers may make. Providing data sets with words and their associated typos has allowed the virtual assistant to still be encompassing of different language skills of customers, as well as avoiding having to ask the customer to repeat what they’re saying and cause frustration. The California Act’s distinction requirement also allows the customer to be able to expect potentially having to phrase their messages slightly differently to avoid such frustration. In this way, chatbots can be seen as augmentative and not just deceitful.

On the internet, nobody knows you’re a chatbot unless you’re in California. While the spread of false information through automated accounts and misleading people online is still rife, the California Act shows a positive way forward to helping the public navigate the issue. Chatbots have already demonstrated their ability to augment the human experience, especially in the business arena. So, while there exists the possibility for chatbots to be abused, there also exists the possibility to combat this and truly harness the benefits chatbots can bring.

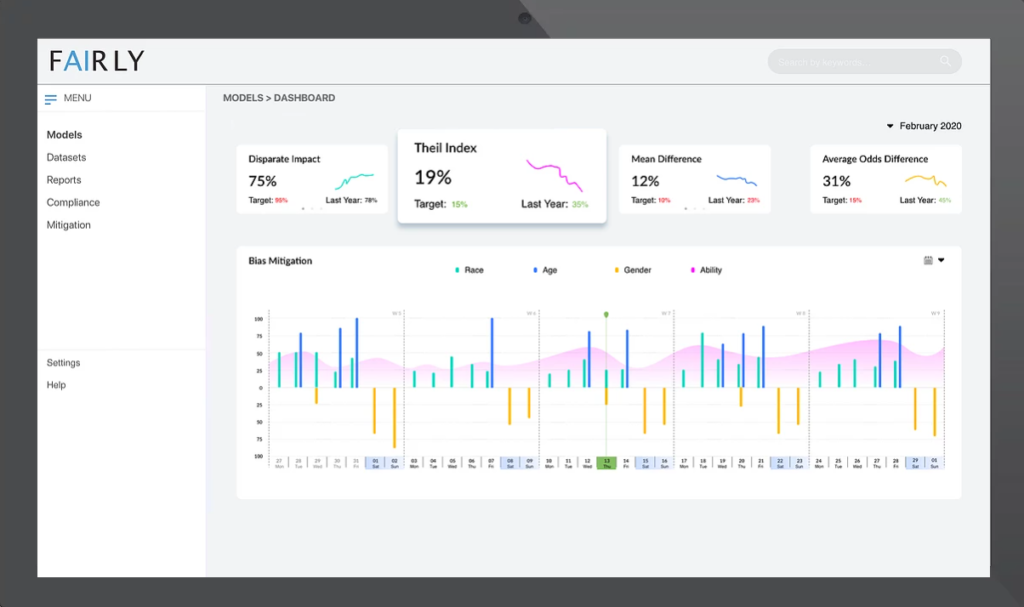

Fairly’s mission is to provide quality assurance for automated decision making systems. Their flagship product focuses on providing an easy-to-use tool that researchers, startups and enterprises can use to compliment their existing AI solutions regardless whether they were developed in-house or with third party systems. Learn more at fairly.ai.