🔬 Research Summary by Rudy Arthur, a Senior Lecturer in Data Science at the University of Exeter.

[Original paper by Rudy Arthur]

Overview: What3Words (W3W) is a geocoding app that has been aggressively marketed in the UK and globally. Police, ambulances, and other emergency services encourage people to use W3W to report their locations. The findings of my work suggest that things can go wrong with W3W addresses far more often than claimed by the company, due to intrinsic flaws in their algorithm. Therefore using W3W as part of critical services should be questioned.

Introduction

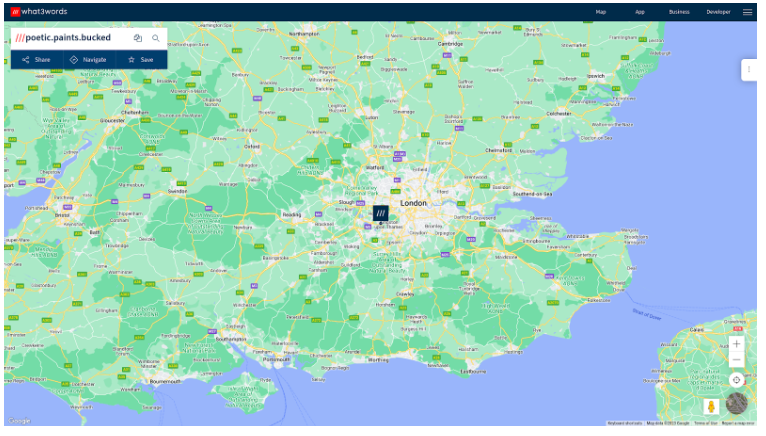

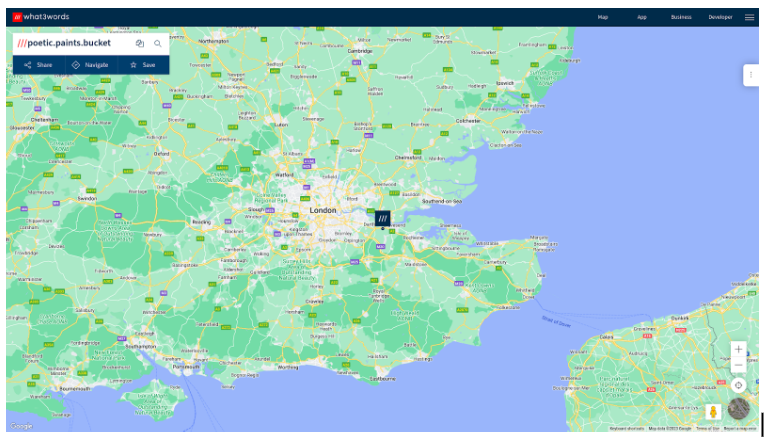

You’re trying your best not to panic as you describe the accident you just witnessed to the dispatcher but they reassure you that the ambulance is only five minutes away from your location, which, according to the What3Words app is poetic.paints.bucked

Unfortunately, the ambulance is actually five minutes away from poetic.paints.bucket

What3Words (W3W) is an app that aims to help people relay their location in an easy and accurate way. To quote the company:

We have divided the world into 3m squares and given each square a unique combination of three words. what3words addresses are easy to say and share, and as accurate as GPS coordinates.

51.520847, −0.19552100 ↔ /// filled.count.soap

What3Words

Key Insights

The scenario I have in mind is the following: you open up the What3Words app and press a button to get your location. It says you are in Birmingham, UK, at the location gear.fines.larger. You then transmit that location by saying it out loud or typing it [1]. The other person records your address and could then pass it on to another person or along a chain of several people until, finally, someone inputs the address into the W3W app to identify your location. At each one of these steps, there is a possibility for confusion, that is, getting one of the words wrong, which results in a wrong address.

Global Confusion

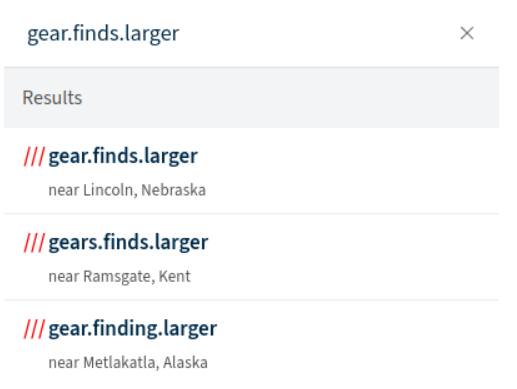

In the paper, I analyzed two types of confusion. The first is when the received location is very far from the transmitted location. This is actually what W3W is aiming for. If you call an ambulance in Birmingham, UK, and they receive an address in Australia, it will be obvious this is wrong. However, even if they know you’re not in Australia, they still need your location! If you type any address into the W3W search bar, it will offer three suggestions. An example might make this clearer. My location is gear.fines.larger, but the receiver hears gear.finds.larger. Typing this into the search bar gives:

The true address isn’t there! Hopefully, all of these suggestions are far enough away that it’s obvious I’m not at any of them (Kent is in the UK but reasonably far from Birmingham), and hopefully I’m still on the line so that they can ask me to give my address again.

To understand how often this can happen, I asked what proportion of addresses have more than three other addresses with which they could be confused. A conservative estimate, using only a subset of how one could confuse an address, is that around 25% of addresses have four or more ‘confusions’ and are vulnerable to this issue.

Local Confusion

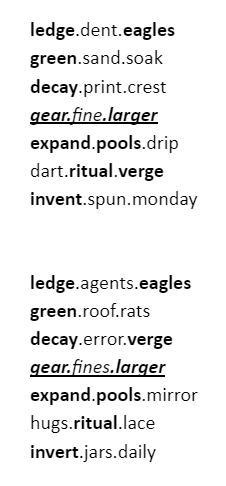

The second type of confusion is the one W3W wants to avoid, having two similar addresses close together. For example, the locations gear.fines.larger and gear.fine.larger are around 1 mile apart. These could easily be confused, and the confusion would not be obvious at all. The question is, how often does it happen that two similar addresses are close together? The answer is – much more often than they should. The reason is a little technical due to the algorithm W3W uses to randomize the addresses. The randomization algorithm is a linear congruence, and while the outputs look random, there is a lot of non-random structure. In particular, there can be long ‘runs’ of similar addresses that don’t get mixed properly.

To understand, let’s look at the W3W addresses immediately next to gear.fines.larger and gear.fine.larger. These are:

W3W uses around 40000 unique words. If the addresses were truly random, we wouldn’t have so many words in common between nearby areas in the same city. The fact that many addresses share 1 or 2 words close together greatly increases the chances of a nearby confusing pair. For those interested in the details, a simple mathematical argument is given in the paper to show why the randomization algorithm results in this behavior.

Between the lines

I understand the appeal of What3Words. It’s more fun to say three random words than something like a Grid Reference or a postcode. However, that is not a good enough reason to use W3W for important applications like emergency services. The problems discussed are not just due to a few confusing addresses. There are systematic problems at the heart of the app. There are many ways these issues could be solved (for example, a checksum); however, the commercial and closed-source nature of the application means that we have to rely on W3W for the fix, something which they do not seem inclined to do.

Software, especially software used in matters of life and death, should be subject to the same rigorous testing and evaluation standards as any other product, from car tires to climbing rope. Even for less serious uses, like delivering mail, relying on a private company to provide addresses (as has apparently been done in Mongolia) is a dubious proposition. For emergency applications, my concern is that the popularisation of W3W could discourage the adoption of more reliable alternative systems like AML (which automatically sends the user’s location to emergency services without any user input required) or Grid Reference (which is printed on almost all UK maps). Until the problems with the algorithm have been fixed and W3W has been shown to work reliably under stressful conditions, it should only be used.with.caution.

Notes

[1] There is a way to directly share a link using the app, though there is little benefit in using W3W in this case compared to, say, Google Maps. The point of W3W is that addresses are “easy to say and share.”