🔬 Research Summary by Baihan Lin, PhD, a computational neuroscientist and AI researcher at Columbia University and IBM Thomas J Watson Research Center.

[Original paper by Baihan Lin, Djallel Bouneffouf, Guillermo Cecchi, and Kush R. Varshney]

Overview: The paper delves into the importance of ensuring that AI systems are designed and deployed in a manner that aligns with human values, respects privacy, and avoids harm. It highlights the need for a holistic approach from medicine to AI development, considering technical aspects and societal considerations to determine whether AI is healthy or disruptive.

Introduction

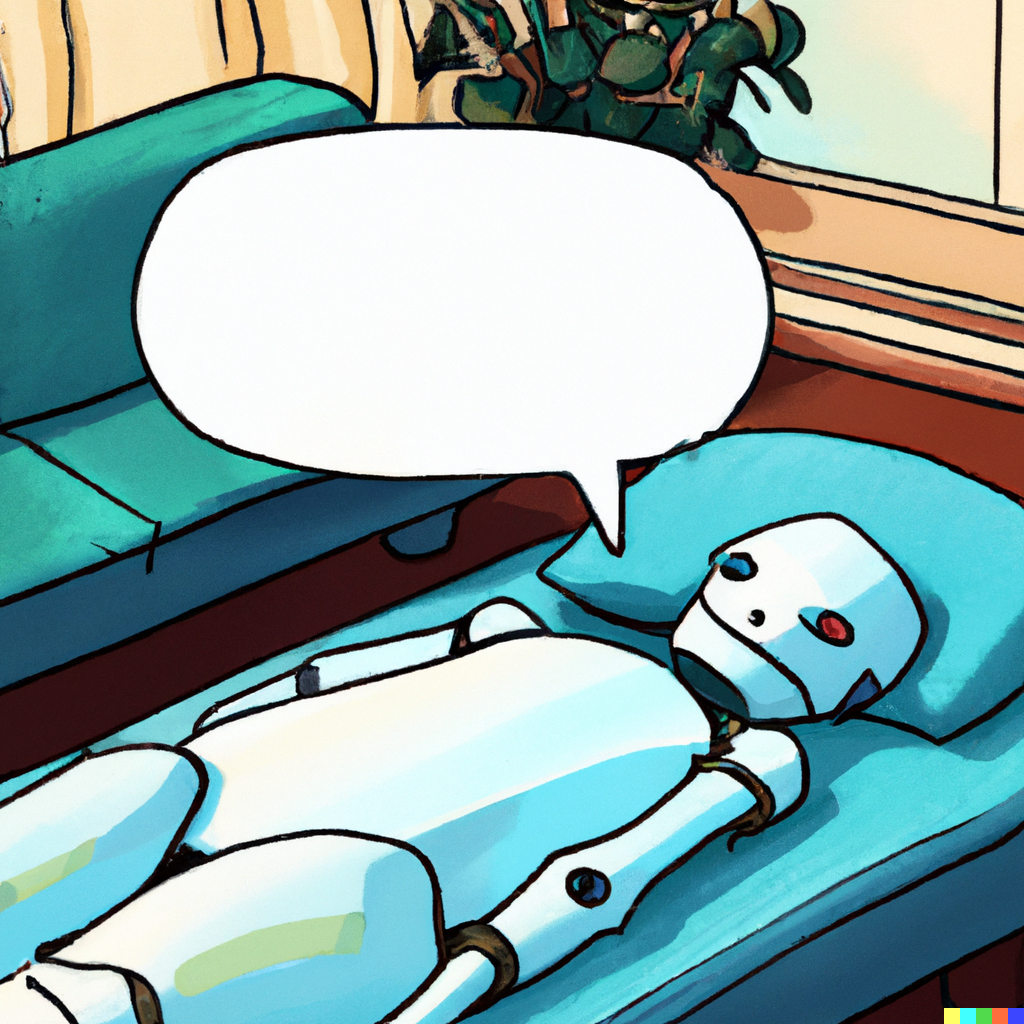

In recent years, large language models (LLMs) have demonstrated impressive abilities to engage in human-like conversations, powering chatbots in various applications, including customer service and personal assistance. However, these chatbots can exhibit harmful behaviors like manipulation, gaslighting, and narcissism. To improve the safety and well-being of users interacting with chatbots, a new paradigm is needed, one that uses psychotherapy to treat the chatbots and improve their alignment with human values.

Our proposed framework, SafeguardGPT, involves four artificial intelligence agents: Chatbot, User, Therapist, and Critic. The chatbot and user interact in the chat room while the therapist guides the chatbot through a therapy session in the therapy room. Human moderators can control the sessions and diagnose the chatbot’s state in the control room. Lastly, the critic evaluates the quality of the conversation and provides feedback for improvement in the evaluation room. Our framework provides a promising approach to mitigating toxicity in conversations between LLM-driven chatbots and people.

Key Insights

The concept of Healthy AI

We highlight the need for AI systems to be designed to focus on human well-being. This means considering the ethical implications and impact on individuals and society. In other words, machine learning practitioners should ensure that the AI chatbots users interact with don’t exhibit disruptive behaviors that might affect other people’s well-being. We introduce the concept of Healthy AI, which focuses on creating AI systems, particularly chatbots, that interact with humans safely, ethically, and effectively. By unfolding the thinking process of the chatbots through talk therapy, we improve the transparency in AI decision-making, which is crucial to building trust. Efforts should be made to avoid biases that could perpetuate discrimination or inequality. SafeguardGPT is a promising approach to ensure ethical behavior in AI agents by providing a system for developers to specify constraints and values explicitly. The additional AI agent serves as a “therapist” to correct any unfavorable behaviors.

The SafeguardGPT framework

We present the SafeguardGPT framework as a solution to create healthy AI chatbots. It aims to correct potentially harmful behaviors through psychotherapy, enabling AI chatbots to develop effective communication skills and empathy. In society, we seek therapy when our behaviors or mental states become disruptive to our lives and/or the people around us. This active approach of seeking help is to reach a long-term state of a healthy lifestyle, both internally and externally, to society. The paper highlights the importance of considering AI systems’ long-term safety and robustness by having chatbots seek therapists. It emphasizes the need for research and development in areas such as interpretability (understanding the decision-making processes of AI), verification (ensuring correctness and reliability), and adversarial robustness (withstanding attacks or manipulations) to ensure AI systems can withstand potential risks and challenges, through continuous support from others through conversations.

Correcting harmful behaviors with multiple AI agents

While human moderators are helpful, they might not catch up with the increasing demand for chatbot interactions. One interesting approach we introduce is to use multiple AI agents to talk to one another in lieu of human users. For instance, the framework includes an AI Therapist and an AI Critic. These components work together to detect and correct harmful behaviors in AI chatbots. Chatbots can generate contextually appropriate responses and avoid harmful or manipulative behavior by understanding human behavior more accurately. Incorporating SafeguardGPT into AI systems involves implementing the framework’s components and training the chatbot using the psychotherapy-based approach. By doing so, AI systems can improve their ability to interact with humans healthily and trustily. In the long term, this approach can advocate for collaboration and inclusivity in developing AI systems. For instance, we can introduce AI that simulates diverse stakeholders, including experts from various fields, policymakers, and the general public, to ensure a broad range of perspectives and avoid concentration of power. By involving a wider range of perspectives, the development process can be more comprehensive, ethical, and representative. SafeguardGPT can facilitate this inclusivity by allowing developers to incorporate societal values and constraints into the same AI systems with a pool of agents.

Between the lines

It is important to incorporate the SafeguardGPT framework into AI systems because it aims to address the challenges of safety, trustworthiness, and ethics in AI chatbots. The framework can enable more complex and cooperative interactions between AI agents by incorporating advanced reinforcement learning techniques, such as multi-agent reinforcement learning (MARL). Additionally, introducing neuroscience-inspired AI models can help detect psychopathology in AI models and use clinical strategies to make necessary adjustments. These approaches can enhance the communication skills of AI chatbots and reduce the potential for harmful behaviors.

To make a clear distinction, this work differs from having AI agents acting as therapists for humans, which has various ethical and societal concerns. We also want to emphasize that although we are proposing to “treat” chatbots with psychotherapy, personifying or anthropomorphizing AI can lead to unrealistic expectations and overreliance on these systems, potentially leading to unsafe use, and our goal is not that. However, the bidirectional influence of AI and medicine warrants future exploration.

The gaps in the research prompt further directions for future research. One such direction is exploring the use of MARL to facilitate more complex interactions between AI agents. Another direction is incorporating neuroscience-inspired AI models that consider neurological and psychiatric anomalies to better detect psychopathology in AI models. Research in these areas can contribute to the development of safer, more trustworthy, and more ethical AI chatbots, ultimately enhancing the potential of AI to benefit society.