🔬 Research Summary by Imke Grabe, a PhD student at the IT University of Copenhagen, Denmark, where she researches co-creative applications of generative AI.

[Original paper by Imke Grabe, Miguel González-Duque, Sebastian Risi, and Jichen Zhu]

Overview: While Generative Adversarial Networks (GANs) have become a popular tool for designing artifacts in various creative tasks, a theoretical foundation for understanding human-GAN co-creation is yet to be developed. We propose a preliminary framework to analyze co-creative GAN applications and identify four primary interaction patterns between humans and AI. The framework enables us to discuss the affordances and limitations of the different interactions in the co-creation with GANs and other generative models.

Introduction

Deep generative models have become potential co-designers to humans in creative tasks in various disciplines such as architecture, fashion, computer games, and art. While technological advancements underlying GANs progress rapidly, how to develop GANs that can be used effectively in co-creation by human designers is still an open problem. This paper aims to answer the question: How do co-creative GAN applications support co-creativity? To do so, we adapt an existing framework previously applied to analyze a broad range of co-creative interfaces and further developed it to describe generative models. We suggest a preliminary framework for an in-depth analysis of co-creative GAN applications. We show that the proposed taxonomy lets us analyze emerging patterns in the interaction with GANs. We limit our study to GANs because most examples in the field of generative design use them, but we believe that our framework applies to other deep generative models.

Key Insights

Our paper presents a preliminary framework for mapping the interaction in co-creative GAN applications and discusses the co-creative affordance of the emerging interaction patterns. More specifically, we tailor existing frameworks to describe co-creative GAN applications. With the resulting vocabulary grounded in GANs’ architecture, we aim to describe co-creative scenarios aligned with GANs’ inner workings. We developed and tested our framework by analyzing related literature in art, computer games, fashion, and object design.

The Framework

Our framework builds on existing theories from the field of mixed-initiative co-creation and human-AI co-creation with generative models. It adapts their proposed action set to a minimal set required to describe co-creative GAN applications. The resulting set of six actions maps the interaction between the two agents, namely a human designer and a computational system, including a GAN as the main technology, which might be supported by other algorithms facilitating the interaction with it. The six actions, initialize, learn, constrain, create, select, and adapt, can be executed by both the human and the GAN.

Four Primary Interaction Patterns

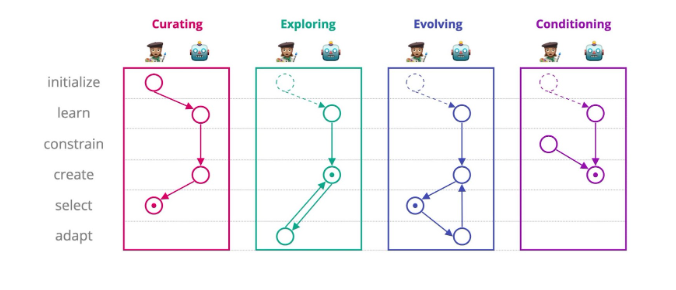

With the help of the developed framework of agents and actions, we identified four interaction patterns which we present along with an exemplary selection of co-creative GAN applications in the paper. We reviewed studies that propose models for co-creation with GANs tested in user studies, such as a GAN that allows game designers to generate Mario levels. Besides that, we also considered approaches that are not explicitly embedded into use cases yet but suggest applicable models that could support co-creative GAN applications. For example, a GAN trained to generate dress designs based on given features such as color could, in practice, be controlled by a human designer. Among all studies, we found four underlying interaction patterns, which we summarize below and for which we show the graphical notations in the figure. These interaction patterns are not mutually exclusive and can be combined, as we also offer examples in the paper.

Curating

The most straightforward interaction pattern is categorized as Curating. After being initialized by a human designer, a GAN learns to generate artifacts by being trained through gradient descent. Then, a set of outputs is created by sampling from the GAN, from which the human agent selects a set of final artifacts.

Exploring

The interaction pattern Exploring describes an interaction where the human iteratively adapts artifacts after they have been created by sampling from the GAN. This is commonly done by interpolating between artifacts or moving an artifact in latent directions or along directions representing semantic attributes.

Evolving

In the third identified pattern, Evolving, the human selects artifacts for the computational agent to adapt and combine in the following step. Within the field of evolutionary computation, these actions are also referred to as mutation and recombination, respectively.

Conditioning

Lastly, the pattern Conditioning describes an interaction in which the human constrains the desired artifact characteristics before outputs are created through sampling from the GAN.

Interaction Patterns in Comparison

The interaction patterns we identified based on the framework allow us to analyze how co-creative GAN applications support co-creativity to different degrees.

Curating and Conditioning can be regarded as one-shot approaches since the computational agent does not recall previously created artifacts, making each iteration an isolated process. While Curating leaves the human agent out of the actual (co-)creative process and gives the GAN complete creative control, Conditioning restricts the GAN’s creativity by assigning the human agent more creative authority along the way, allowing for more co-creativity. By committing to a human-set frame, the GAN’s design space is restricted, leading to a trade-off between novelty and typicality concerning humans’ expectations.

Exploring and Evolving, conversely, implies iterative interaction between humans and GAN. While human initiative appears only after the first creation stage in both patterns, they support the co-creative exchange differently. Through selection in an Evolving flow, the human agent narrows the conceptual space of the GAN. Hence, they restrict it to developing a chosen population of designs. In comparison, Exploring leaves the conceptual space as is but lets the human designer traverse through it.

Between the lines

By synthesizing existing GAN applications and theory from the field of mixed-initiative co-creation, our work aims to shed light on how GANs participate in co-creative design processes. By increasing awareness of the system’s functioning, this insight might allow us, and non-experts in particular, to design better interactive applications. With the identification of emerging patterns, we aim to enable a discussion on the current trends in co-creative GAN applications and illuminate possible future research in this field.

With the framework’s GAN-specific grounding, we hope to make the technical and, thereby, interactive capabilities better understandable when mapping interactions. More specifically, the proposed set of actions supports the mapping of interactions with generators via latent codes. Hence, these actions are applied to other generative models, such as variational autoencoders (VAEs), consisting of a generator network with outputs sampled based on alterable latent codes.

As the development of generative models has continued rapidly since the conduction of this study, the framework is yet to be extended to include recently arising models, especially in the light of new text-conditional generative models.