✍️ By Thomas Linder, PhD1

Thomas holds a PhD in the Sociology of Technology and is a Senior Coordinator at Open North, a Lecturer in Responsible AI at Concordia University, and a member of the AI Policy Group at the Center for AI and Digital Policy (CAIDP).

The current wave of generative AI development has made teaching responsible AI harder, and more necessary, than ever.

Challenges in Teaching Responsible AI

Teaching AI literacy is by now thoroughly accepted as urgently necessary, and universities, NGOs, private sector businesses, and public sector organizations are all scrambling to offer and enroll their staff in such courses. However, the question of what, exactly, these trainings are supposed to achieve is more complicated than it may first seem. In this essay, I reflect on designing and teaching a course on responsible AI last year and unpack the goals I discovered to be critical to teaching responsible AI. These goals are to decenter generative AI, unpack AI, and anchor responsible AI in stakeholder perspectives. With these goals in mind, I then make recommendations on how we can keep responsible AI firmly focused on ethical outcomes.

At the end of 2024, I was hired to redesign a Responsible AI course for a continuing education class at Concordia University. The course had originally been developed by the late, and sorely missed, Abhishek Gupta, and while excellent, it predated the generative AI hype cycle and needed updating. In designing and teaching the course I uncovered several important challenges, the response to which also illuminated further pedagogical goals for teaching responsible AI.

The three central, and remarkably persistent, challenges were:

- The term “AI” was implicitly understood in the paradigm of generative AI. If left unguided, all conversations about various aspects of AI drifted inexorably into a discussion of GenAI.

- Conversations about “AI” were stubbornly anthropomorphic and frequently excluded crucial understanding of the tools’ mechanisms.

- Moving from abstract and generalized risks and principles to their concrete application in situations and analysing the specific likely outcomes and impacts—in other words, making the values and principles real and tangible—is never easy. This is a central goal of any social studies course, but in this case, the centrality of generative AI and anthropomorphic language made it harder.

From these challenges, two larger points about “responsible AI” both as an object of pedagogy as well as a discourse around governance and ethics, became clear.

Lessons from teaching Responsible AI

First, the object of the discussion requires careful definition. What is to be responsibly governed? What does “AI” refer to in this context? Clearly not just generative AI (although that, too, is a rapidly diversifying category). There are numerous other kinds of AI tools that bear only a passing resemblance to generative AI, from facial recognition to fraud prediction to automated decision-making.2 This variance is arguably even more important for responsible governance than the similarities, for it is in the unique characteristics of each system that significant aspects of its power and potential harm lie. While this is well understood amongst expert policy circles, it was apparently less so in lay discourse.

Thus, the first recommendation for teaching responsible AI is to consistently decenter generative AI. To underscore the wide range of different AI tools out there and highlight the technological and functional differentiation amongst them. This, of course, and increasingly ever more so, applies to the category of generative AI itself. There are important differences between a chatbot answering resident queries about local ordinances and a research assistant LLM.3

The second issue is that the anthropomorphizing language applied as a metaphorical shorthand to describe the functioning of these tools obscures and impedes a critical analysis not just of how the systems actually work, but further and more importantly, that the ways in which they work are the products of human decision. Language like “learn,” “know,” “think,” and “understand,” would treat them as black boxes, independent of human design in general—and specifically, independent of data, model type, model training, and tool implementation in the operational context. It may be impossible, or at least impractical, to attempt to eradicate anthropomorphic metaphors (and indeed, is “train” not anthropomorphic? More divisively, what about “learn”?) Anthropomorphism isn’t so easily identified and rejected; but it is crucial to centralize the role of human agency.

Whatever the language choice, the second recommendation for teaching responsible AI is thus to unpack “AI” as a socio-technical system, in other words as a system comprised of technical components (hardware and software) as well as social components (people, culture, organizational structures, etc). Unpacking AI as a sociotechnical system in this case means to disentangle all the moments across the AI lifecycle that human decision-making affected the structure and functioning of the system.4 In such a manner, it can be shown that “AI” is really just a complex system of interconnected social and technical components, the particular operational form of which is the contingent product of a lifecycle’s worth of human decision-making. It is through this material deconstruction of the AI entity that the tendency to anthropomorphize can be effectively undermined.

Once the diversity of AI tools and their sociotechnical contingency is an established ontology, students have the foundation to grapple with a different kind of problem: that of values and risks, and the degree to which the particular standpoints of various stakeholders influence their perspectives on which values and risks are pertinent in a situation.5 It is on this knotty problem that my experience teaching the subject led to an important insight for teaching responsible AI: recenter stakeholders’ perspectives.

The traditional approach is to teach the subjects of values and risk on the one hand and stakeholder engagement on the other separately. To first provide an abstracted, universalized framework for the former (e.g. US AI Bill of Rights or NIST) and then bring in stakeholder perspectives later in the process, positioned as a potential situational modulation of the risks and values. This certainly makes sense from a policy development perspective; however, for a pedagogical approach to responsible AI, it can be inverted to tackle the third significant challenge: making real and tangible the otherwise so abstract, decontextualized, disembodied, universalized notions of values and risks.

Having defined AI as a sociotechnical system, one has effectively already brought the situated knowledge of the stakeholders into the picture. The relevance of their values, and their perception of risk, is already established as an important causal factor in the functioning and impacts of AI systems. As such, this is a fruitful position to begin asking at each point in the AI lifecycle who the relevant stakeholders are, what their interests and concerns might be, and—crucially for responsible governance—what they would need to know about the system and what they would need to have influence upon in order to advocate for their interests and mitigate their concerns. This exercise recentralizes the perspective of the stakeholders, positioning them as active players in the development and operation of AI systems.6 Encompassing the entire AI lifecycle also breaks down the illusory distinction between internal and external stakeholders, thereby bringing in the values and risk perceptions of civil society and community groups.

Recentering Stakeholders, and thus Ethics, in Responsible AI

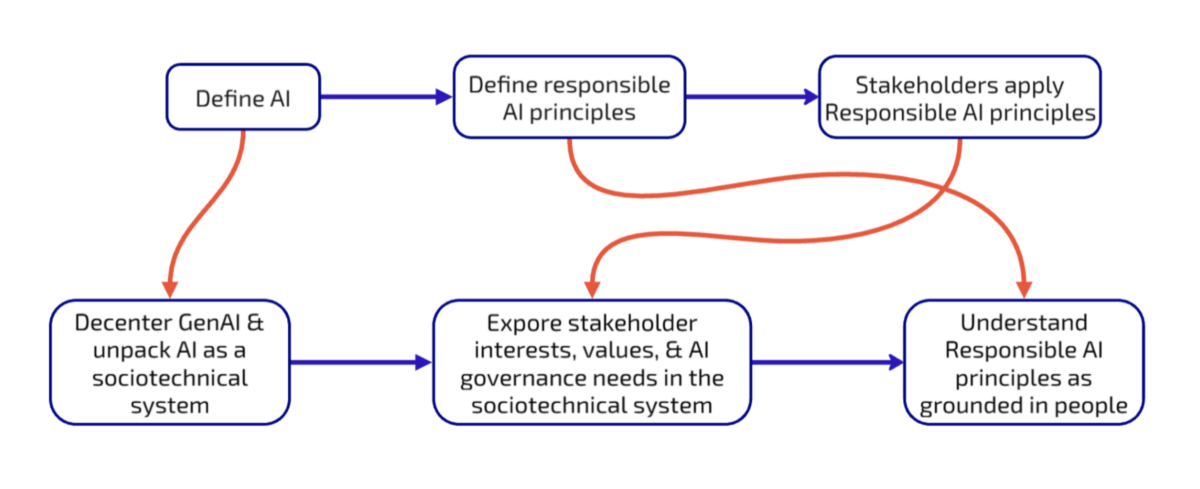

By recentering stakeholder engagement in the pedagogical process after the sociotechnical unpacking of AI and before the discussion of risks and values, the relevance, involvement, and agency of all stakeholders are foregrounded, and students can actively think through how their standpoints affect their interests, values, and risk perception (see Figure 1. above). Such an empathetic exercise encourages a far more effective and affective consideration of classic AI ethics principles like fairness, inclusion, and autonomy, or risks like bias, exclusion, exploitation, and disempowerment. Considered from multiple standpoints implicated throughout the sociotechnical AI system and across its lifecycle, these principles—and their tensions—come to life in a tangible and, for responsible AI, essential way.

Finally, having tackled this third challenge, this pedagogical approach opens a final, and critical door for responsible AI. Increasingly criticized for corporate, technocratic capture and reduction to governance lip service that no longer considers ethics and outcomes, responsible AI needs to ensure that the “to what end” of it is as important as the “how.” There is no consideration of the proper ends of responsible AI without the deep involvement of the multifarious stakeholder perspective. By anchoring responsible AI in their points of view, one can unpack what otherwise anodyne-sounding governance mechanisms like transparency, explainability, accountability, etc., must actually mean, how they must be operationalized in order to ensure that stakeholders can champion their values and mitigate their risks.

By refocusing responsible AI teaching on the heterogeneity of AI systems, by unpacking AI as a sociotechnical system with a lifecycle, and by embedding the standpoints of stakeholders throughout that system and lifecycle, the challenges of generative AI hype, anthropomorphism, and decontextualized values and risks can be effectively tackled, and responsible AI as a framework can continue to ensure that the primary focus and ultimate goal is ensuring that AI tools are used for the inclusive and equitable betterment of all in society.

Footnotes

- Thomas Linder is passionate about designing technological change that reduces inequality, empowers communities, and embeds equity and sustainability in social, political, and economic systems. He holds a PhD in Sociology of Technology and is currently the Senior Coordinator for Research and Delivery at Open North. His areas of expertise are digital inequality and data justice-informed responsible AI governance, data governance (CDMP), digital policy development, and risk and privacy impact assessment (CIPP/C). In addition, he is also a member of the CAIDP Policy Group (G7/G20), teaches Responsible AI at Concordia University, and works closely with several African NGOs on data justice and digital equity issues across the continent. He splits his time between Toronto and Cape Town. ↩︎

- (“What Are We Really Talking about When We Talk about AI?,” n.d.) ↩︎

- (Watson 2019; Salles, Evers, and Farisco 2020; Placani 2024) ↩︎

- (Chen and Metcalf 2024; Kudina and Van De Poel 2024) ↩︎

- (Zhang and Dafoe 2019; O’Shaughnessy et al. 2023) ↩︎

- (Domínguez Figaredo and Stoyanovich 2023; “Inclusive Research & Design”) ↩︎

Sources

- Chen, Brian J., and Jacob Metcalf. 2024. “Explainer: A Sociotechnical Approach to AI Policy.” Data & Society. https://datasociety.net/wp-content/uploads/2024/05/DS_Sociotechnical-Approach_to_AI_Policy.pdf

- Domínguez Figaredo, Daniel, and Julia Stoyanovich. 2023. “Responsible AI Literacy: A Stakeholder-First Approach.” Big Data & Society 10 (2): 20539517231219958. https://doi.org/10.1177/20539517231219958

- “Inclusive Research & Design.” n.d. Partnership on AI. Accessed February 7, 2025. https://partnershiponai.org/program/inclusive-research-design/

- Kudina, Olya, and Ibo Van De Poel. 2024. “A Sociotechnical System Perspective on AI.” Minds and Machines 34 (3): 21, s11023-024-09680–82. https://doi.org/10.1007/s11023-024-09680-2

- O’Shaughnessy, Matthew R., Daniel S. Schiff, Lav R. Varshney, Christopher J. Rozell, and Mark A. Davenport. 2023. “What Governs Attitudes toward Artificial Intelligence Adoption and Governance?” Science and Public Policy 50 (2): 161–76.

- Placani, Adriana. 2024. “Anthropomorphism in AI: Hype and Fallacy.” AI and Ethics 4 (3): 691–98. https://doi.org/10.1007/s43681-024-00419-4

- Salles, Arleen, Kathinka Evers, and Michele Farisco. 2020. “Anthropomorphism in AI.” AJOB Neuroscience 11 (2): 88–95. https://doi.org/10.1080/21507740.2020.1740350

- Watson, David. 2019. “The Rhetoric and Reality of Anthropomorphism in Artificial Intelligence.” Minds and Machines 29 (3): 417–40. https://doi.org/10.1007/s11023-019-09506-6

- “What Are We Really Talking about When We Talk about AI?” n.d. Accessed February 7, 2025. https://www.globalgovernmentforum.com/what-are-we-really-talking-about-when-we-talk-about-ai/

- Zhang, Baobao, and Allan Dafoe. 2019. “Artificial Intelligence: American Attitudes and Trends.” Available at SSRN 3312874. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3312874