🔬 Research Summary by Saud Alharbi, a principal AI Engineer at CertKOR AI, and is pursuing a Ph.D. in Computer Engineering at Polytechnique Montreal.

[Original paper by Saud Alharbi, Lionel Tidjon, and Foutse Khomh]

Overview: This research paper explores integrating ethical considerations into the machine learning (ML) pipeline. It examines “What AI engineering practices and design patterns can be utilized to ensure responsible AI development?” and proposes a comprehensive approach to promote responsible AI design.

Introduction

As AI technologies continue to advance, ensuring responsible AI design becomes paramount. This research paper explores integrating ethical considerations into the ML pipeline, addressing data pre-processing, model selection, and evaluation. The aim is to establish guidelines and frameworks that promote ethical design practices in AI systems. New design patterns are introduced, emphasizing the importance of AI ethics orchestration and automated response (EOAR) for auditing and testing.

Key Insights

How can we design responsible AI systems?

Responsible AI design is a critical aspect of AI development that cannot be overlooked. This research highlights the importance of integrating ethical principles throughout the ML pipeline. By doing so, we can build AI systems that minimize potential biases and harm. The findings of this research highlight the need for ongoing collaboration between different disciplines to ensure a holistic approach to ethical AI design.

To achieve responsible AI design, this paper conducted a thorough review of existing frameworks, identified key elements, and proposed an encompassing system that spans all stages of the ML pipeline. They introduce innovative design patterns that address ethical considerations and highlight the importance of AI ethics orchestration and automated response (EOAR) for continuous auditing and testing.

The authors employ a comprehensive methodology to advance responsible AI design. Their approach encompasses a thorough literature review of existing frameworks, a survey of AI practitioners, and detailed thematic analyses of the survey findings. The study aimed to uncover the challenges and best practices in responsible AI design while gathering invaluable feedback on the proposed framework. Additionally, a comparative analysis of existing ML design patterns was conducted, identifying gaps and proposing innovative design patterns for responsible AI. This research contributes to the field by providing practical insights and solutions to promote ethical and responsible AI development.

In this research, a diverse range of experts from leading organizations and domains collaborated to provide valuable insights into responsible AI design. Participants included renowned organizations such as Ethically Aligned AI, Thomson Reuters, IBM, Conseil de l’innovation du Québec, Université de Sherbrooke, CSIRO (Australia), Polytechnique Montréal, and the Ministry of Health, Quebec. The participants’ expertise spanned various domains related to AI and technology, including AI ethics, AI adoption, data management, responsible AI, cybersecurity, law and technology regulation, software engineering, and technology transfer. The participants held diverse roles, ranging from AI security architects and CEOs to legal and compliance experts, data engineering advisors, innovation and AI adoption directors, professors, principal research scientists, information security officers, and responsible AI specialists.

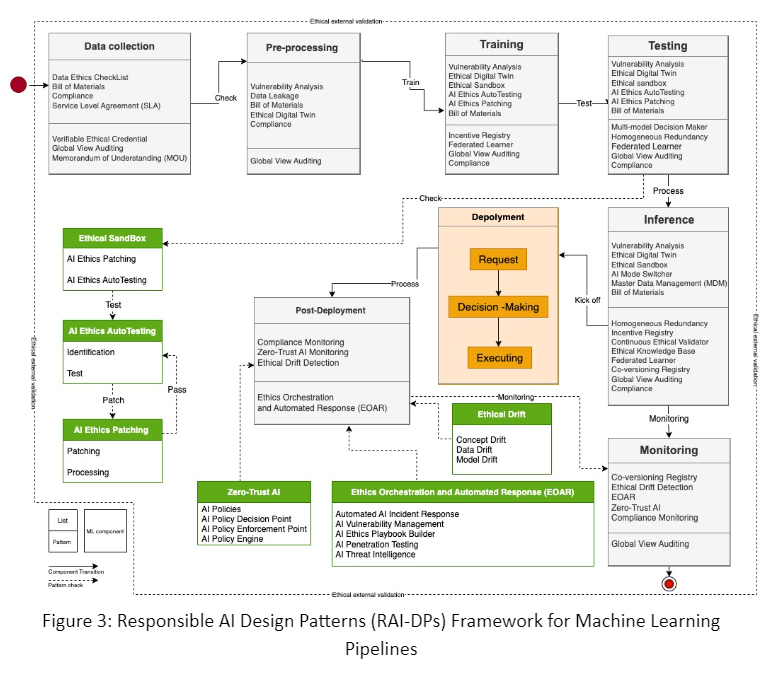

Introducing the Responsible AI Design Patterns (RAIDPs) Framework

The research paper introduces the Responsible AI Design Patterns (RAIDPs) Framework, propelling responsible AI practices to new heights in machine learning pipelines. Figure 3 of the paper showcases the comprehensive RAIDPs Framework, providing stakeholders with a clear overview of its structure and goals. This framework is a crucial tool for understanding the relevance of design patterns to AI projects or initiatives. The RAIDPs Framework consists of several key components that are important in ensuring responsible AI design in machine learning pipelines. These components include:

- Data collection: The process of gathering data used in the ML pipeline.

- Pre-processing: Cleaning and preparing the data for use in the ML pipeline.

- Training: The process of training the ML model using the collected data

- Testing: Evaluating the performance of the trained model

- Inference: Using the trained model to make predictions on new data

- Deployment: Deploying the trained model in a production environment

- Post-deployment: Monitoring the performance of the deployed model and making necessary updates or changes.

Extension-Related Patterns for Ethical Considerations

The RAIDPs Framework also introduces extension-related patterns that address ethical considerations in the ML pipeline. These patterns include:

- Ethical sandbox: Promotes sandboxed experimentation to ensure ethical considerations are met.

- AI Ethics Auto-Testing: Facilitates automated ethical testing of AI models.

- AI ethics patching Addresses ethical issues by providing mechanisms to fix and update AI models.

- Zero-Trust AI Emphasizes the importance of not simply trusting ML models and encourages continuous monitoring.

AI Ethics Orchestration and Automated Response (EOAR): Involves regular auditing and testing of ML models to ensure ethical principles are followed throughout their lifecycle.

Between the lines

This research emphasizes the significance of responsible AI design and provides a comprehensive framework for integrating ethical considerations into the ML pipeline. By following these guidelines and leveraging AI ethics orchestration and automated response, organizations can foster the development of ethically responsible AI systems. In the end, the aim is to create AI systems that not only excel in performance but also adhere to ethical principles. Additionally, future research should focus on evaluating the practicality and effectiveness of the proposed frameworks in real-world scenarios. The security of data management is another vital area that requires further exploration. Is there a schema for organizations to follow to mitigate risks in a specific use case?