🔬 Research Summary by Dr. Stephen MacNeil, an Assistant Professor of Computer and Information Sciences at Temple University, where he directs the HCI Lab. His work focuses on democratizing access to expertise and expert processes so that anyone can design software, products, and policies that affect them. His recent work has focused on how generative AI models can be used to facilitate learning, effective problem-solving, and creativity across diverse communities.

[Original paper by Stephen MacNeil, Andrew Tran, Joanne Kim, Ziheng Huang, Seth Bernstein, and Dan Mogil]

Overview: Generative AI models, like ChatGPT, have facilitated widespread access to advanced AI assistants for individuals lacking AI expertise. Yet, a persistent challenge remains: the absence of essential domain knowledge required to effectively instruct these agents and to skillfully elicit good responses through the “art” of prompt engineering. This work explores the question of how interfaces can best convey expert practices to users when using generative AI models.

Introduction

How can domain expertise be used to guide the prompting of generative AI?

Large Language Models (LLMs) are a recent advancement in natural language processing. This form of generative AI is capable of producing high-quality text based on natural language instructions, called “prompts.” However, crafting high-quality prompts is challenging, and currently, it is as much an art as it is a science.

To facilitate the creation of high-quality prompts, the PromptMaker system from Google Research guided users to generate prompts for few-shot learning by guiding non-experts through the process of articulating examples. A great first step in teaching non-experts the art of prompting, but ultimately, there is more to getting good responses from an LLM than generating “well-structured” prompts. Crucially, it is critical for users to know what models can do and what they should do.

As an example, consider the generative art model Midjourney. Based on a text prompt, it can generate artistic images that can mimic the styles of artists and photographers. However, individuals not well-versed in artistic nuances might grapple with the intricacies of designating particular artistic styles or contemplating essential elements such as framing, color palette, lighting, and focal points. When aiming for photo-realistic results, it is often necessary to specify the pixel resolution or even the camera aperture within the prompt. These considerations encompass more than mere prompt structuring; they encapsulate insights that seasoned artists inherently possess. This was the goal of our project: to integrate these expert decisions explicitly as interface affordances.

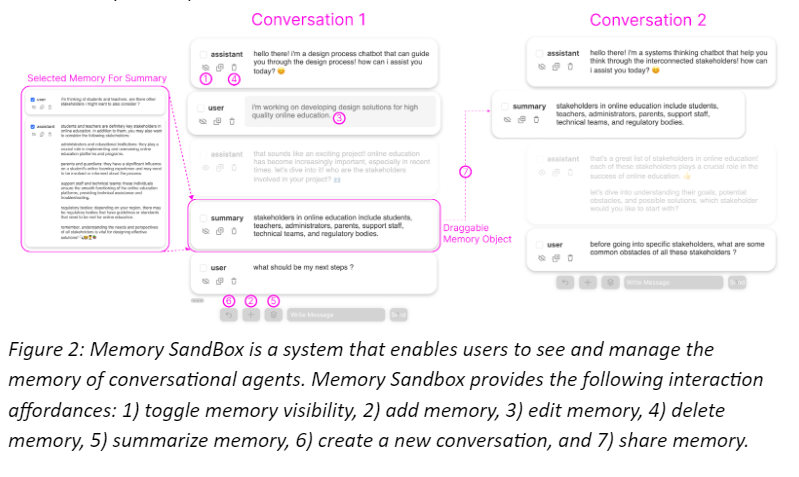

In our work, we explored how a user interface can communicate to non-experts the key considerations that guide domain experts. We introduce the concept of “Prompt Middleware,” which is a technique to communicate LLM capabilities through interface affordances such as buttons and drop-down menus. We instantiated this approach in a feedback system called FeedbackBuffet. This system exposes best practices in articulating feedback requests through interface affordances and assembles prompts using a template-based approach.

Key Insights

As LLMs become more widely used, we must consider how we educate people to use them effectively. Our research contributes to a growing body of work that is exploring how user interfaces can guide non-experts to devise high-quality prompts. Recent work at Google has focused on making it easier to develop few-shot learning prompts. However, two crucial challenges remain 1) techniques to scaffold domain expertise into the prompting process and 2) directly integrating LLMs into user interfaces. We propose Prompt Middleware as a framework for achieving these two goals by mapping options in the UI to generate prompts for an LLM. Prompt Middleware acts as a middle layer between the LLM and the UI while also embedding domain expertise into the prompting process. The UI abstracts away the complexity of the prompts and separates concerns between a user completing their tasks and the prompts that might guide LLMs to help them in those tasks.

The example shown in Figure 1 demonstrates how a simple button or drop-down menu can encode expert design considerations into the interface. This surfaces for the user the critical design decisions to facilitate design work. Prompt Middleware is the process by which templates are used to map those considerations to effective prompts. We demonstrated this vision with FeedbackBuffet, an intelligent writing assistant that automatically generates feedback based on text input. UI options offer users relevant feedback as “menu options” to guide users to articulate their feedback requests. These options are combined using a template to form a prompt for GPT-3. The template integrates best practices of feedback design and cues the feedback seeker to consider the qualities of good feedback. As future work, we are already evaluating the FeedbackBuffet system to understand how much agency and guidance people want when requesting feedback. We hypothesize that experts may want convenient buttons to quickly rephrase or critique their writing, while non-experts may be more interested in exploring the menu of options.

Between the Lines

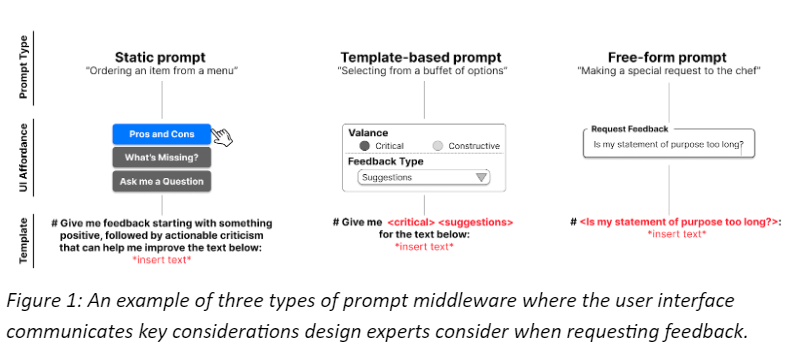

As non-experts continue integrating generative AI assistance into their everyday workflows, we must consider how these systems can better communicate their capabilities through interface affordances. The HCI Lab at Temple University is currently focusing on a series of projects that more explicitly communicate the capabilities of large language models. For example, an undergraduate researcher in our lab, Ziheng Huang, recently developed “Memory SandBox,” which is a system that exposes the memory model of an LLM-powered chatbot. This enables users to understand what the chatbot remembers from the conversation, given a limited context window (i.e., the chatbot can forget parts of the conversation that exceed the input size for the model). This work was recently published at ACM UIST as a demo paper. We hope that these affordances and advances in AI literacy learning will continue to facilitate the effective use of generative AI models by non-experts.