🔬 Research Summary by Dr. Emre Kazim and Airlie Hilliard

Emre Kazim, Co-CEO @ Holistic AI – AI Risk Management & Auditing Platform

Airlie Hilliard, Senior Researcher, Holistic AI | Doctoral Researcher, Goldsmiths, University of London

[Original paper by Emre Kazim (Holistic AI, UCL), Enzo Fenoglio, Airlie Hilliard (Holistic AI), Adriano Koshiyama (Holistic AI), Catherine Mulligan, Markus Trengove, Abigail Gilbert, Arthur Gwagwa, Denise Almeida, Phil Godsiff, and Kaska Porayska-Pomsta]

Overview: This experimental paper invites the reader to consider artificial intelligence as a new form of value capture – where traditional automation concerns physical labor, AI relates (perhaps for the first time) to the capture of intellectual labor. Our main takeaway is to invite the reader to consider artificial intelligence as a representation of the capture of value sui generis and that this may be a step change in the capture of value vis à vis the emergence of digital technologies.

Introduction

With the emergence of new digital technologies—such as the Web, blockchain, artificial intelligence (AI), and the Internet of Things—we have witnessed significant changes in the production and structuring of products and services. While much of the interest from commentators has been focused on the potential opportunities (economic, innovation, research, etc.), the potential harms (economic, ethics, climate, etc.), and forwarding the engineering techniques grounding such technologies, in concrete terms less has been explored in the conceptual realm regarding foundational and theoretical shifts brought about by these technologies. Indeed, many questions remain unanswered, such as what exactly are ‘‘digital things’’? What is the ontological nature and state of phenomena produced by and expressed in terms of digital products? And is there anything distinct about digital technologies in comparison with how technologies have been conceived of traditionally?

To investigate the question of what value is being expressed by an algorithm, we conceptualize it as a digital asset, defining a digital asset as a valued digital thing derived from a particular digital technology. Here, we frame an algorithmic system as a digital asset valued because it realizes a particular aim, such as a decision or recommendation for a set task.

Key Insights

Things

Philosophical literature has a considerable legacy and history of debate concerning what a thing is. At one extreme is the view that things are a product of human framing, while on the other extreme is the view that things can be thought of as discrete objects in themselves. Accordingly, a thing can be defined in several ways, but broad definitions map onto intrinsic and extrinsic approaches:

- Intrinsic (ontological categorization): an intrinsic definition of a thing or object relates only to the properties of the thing itself, independently of other objects.

- Extrinsic (relational ontology): an extrinsic definition of a thing conceives of it only in terms of how it relates to other things or objects. Here, what is critical to understanding the essential properties of an entity is to understand its position in a complex of relationships.

Digital things can be thought of in terms of ontological categorization (investigation into the intrinsic of the digital) and relational ontology (investigation into relationships between

digital things, persons, and digital things, etc.). Consequently, we can assert that in cases where an account of the physicality/materiality—that the digital is derived from—is required, the novel digital technologies (listed in the introduction as AI) provide this account.

Value

The relationship between a digital thing and value—a digital asset—can be thought of in numerous ways, each appealing to how value is understood. We conceptualize two ways of thinking about value and digital assets that do not appeal to the capture of new value:

- Neutral: In value terms, there is nothing particular about the value of digital assets.

- Utility: Digital assets are valuable due to their utility; the value of digital assets is new and created by new digital technologies themselves.

A digital asset is a store of information, and in accessing that information and rendering that information more useful (through its digitization), the digital asset’s value is understood. As such, we can express a digital asset in terms of the digital store of information; digital information is an abstraction of new digital technologies.

Capturing Intentionality

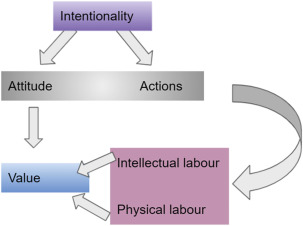

New digital technologies have the capacity to capture meaning sui generis because they represent (express), for the first time, a capture of aspects of intentionality, where we refer to intentionality as attitude plus action.

We reconstruct the labor theory of value as a theory that seeks to address the question of what determines the value of something (a good or service). At a high level, the labor theory of value claims that the value of something is proportionate to the socially necessitated labor required to produce it. Accordingly, action is the practical process of bringing about an end (where the end is the value that seeks to be realized) and can take two forms:

- Physical labor – the work required to bring about the end that is sought concerning the material world

- Intellectual labor (know-how) – the work required to solve the problem of bringing about the sought end.

AI as a Case Study

Mechanization has changed the action dimension of labor for physical labor; however, the know-how is still very much a derivative value that remains within the purview of humans. On the other hand, new digital technologies – such as AI – represent, for the first time, the capacity to capture higher-order forms of intellectual labor; digital assets that are derived are expressions of values that capture functions traditionally the exclusive purview of high-order human intelligence.

More explicitly, AI is a term used to describe the intelligence demonstrated by machines to solve intelligent problems. AI programs may mimic or simulate cognitive behaviors or traits associated with human intelligence, such as logical reasoning, problem-solving, object detection, and learning. However, it does not subsume human intelligence or replicate human intelligence processes.

An AI system is a black box for solving narrow problems making it hard to understand how a decision has been made. The paradox is that AI went from getting computers to do tasks for which humans have explicit knowledge to getting computers to do tasks for which we (humans) only have tacit knowledge. This is the capture of intellectual labor sui generis; the digital technology of AI is an expression of the problem-solving process that the algorithm undertakes is a derivative value tethered to this ultimate value.

Between the lines

The current form of AI is still a technological extender for transforming vast masses of data into actionable items that humans can consume; AI system learns the model directly from data under specific contexts during the training phase and relies on certain assumptions, leading to misoperation when the training assumptions are not met. This is what we refer to as sui generis value capture.

However, it is critical to state that when the assertion that AI is a capture of value is made, it is to be understood within the framework of relational ontology; it is not the AI as the expression of meaningful information in and of itself. Instead, it is in the metadata and in all the meta-learning, tacit knowledge, and ontologies that force the AI system to be meaningful and behave in practical terms.

An AI system does not express value (create and capture value) in isolation from human value ontologies but still operates and interacts with a vast society of cognitive agents to capture value so that machine epistemology and human cognition can form an organic interface, creating a new system of human-machine cognition in harmony.

Our main takeaway is to invite the reader to consider the broader notion of novel digital technologies as a representation of the capture of value sui generis (compared with traditional technologies) and that this may be a step change in the capture of value vis à vis the emergence of novel digital technologies.