🔬 Research Summary by Ruijia “Regina” Cheng, a PhD candidate in the department of Human Centered Design and Engineering at University of Washington. Her research focuses on improving the experience of people in learning about and working with data and AI.

[Original paper by Ruijia Cheng, Alison Smith-Renner, Ke Zhang, Joel Tetreault, and Alejandro Jaimes]

Overview: Automatic text summarization systems commonly involve humans preparing data or evaluating model performance. However, there is a lack of systematic understanding of humans’ roles, experiences, and needs when interacting with or being assisted by AI. From a human-centered perspective, this paper maps the design opportunities and considerations for human-AI interaction in text summarization.

Introduction

In this era where time is everything, we always want to quickly get the main idea from a news article, a social media post, or a research paper. Therefore, we have a large demand for high-quality text summaries. However, summarization is often difficult for humans, as it is time-consuming and requires much attention and domain knowledge. Recently, increasing attention has been given to AI for its application in text summarization. While many are inventing systems where humans and AI collaborate to produce summaries, there is a lack of systematic understanding of the design space—what are the different ways humans and AI can collaborate in text summarization? How can we design for user experience and needs with different types of AI summarization assistance?

To answer these questions, we first conducted a systematic literature review on human-AI text generation systems and identified five main types of human-AI interaction for text summarization. Then, we built interactive prototypes for text summarization that represent the five types of human-AI interaction. We had 16 writers interact with our prototypes and generate insights about their experience and needs in the different types of human-AI text summarization. We proposed several design recommendations for human-AI summarization systems.

Key Insights

We present a formative understanding of the different types of human-AI interaction in text summarization. Through a human-centered approach, we focus on what humans want from AI and how to improve their experience. Specifically, we conducted two studies to explore 1) the different ways humans and AI can interact in text summarization and 2) the human users’ experience and needs with different types of AI assistance in text summarization.

The five types of human-AI interaction in text generation

We dived into existing academic literature and conducted a systematic analysis to explore how humans and AI interact in text summarization. We systematically reviewed more than 600 academic papers about AI text generation and finalized 70 papers containing some types of human-AI interaction workflows. From the 70 papers, we identified five types of human-AI interaction featuring distinct human actions:

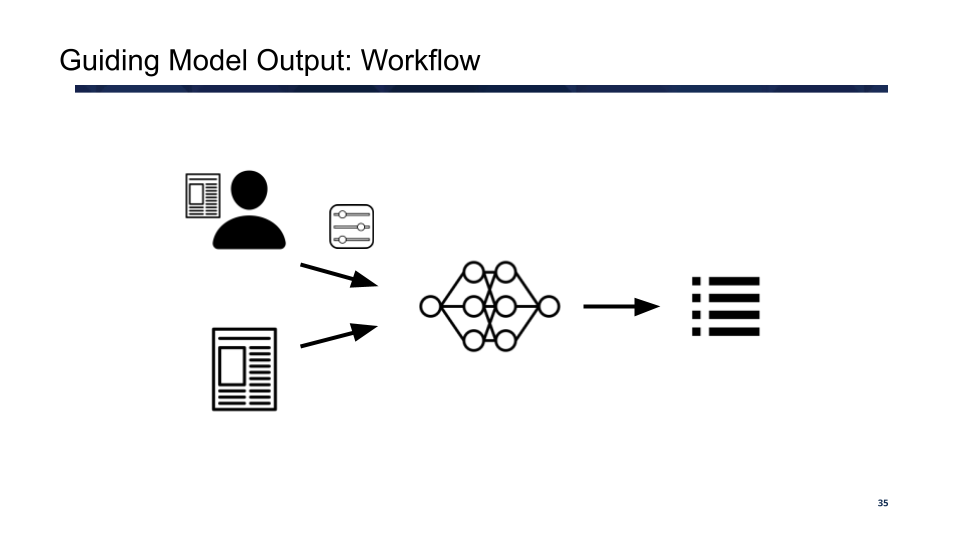

Guiding model output: in this type of human-AI interaction, human users are in the driver’s seat of the generation process with the power to initiate and control the model output. Humans provide preferences to the model in various forms, such as prompts and adjustments to the input text or parameters. The model, in turn, takes human input to generate the final product.

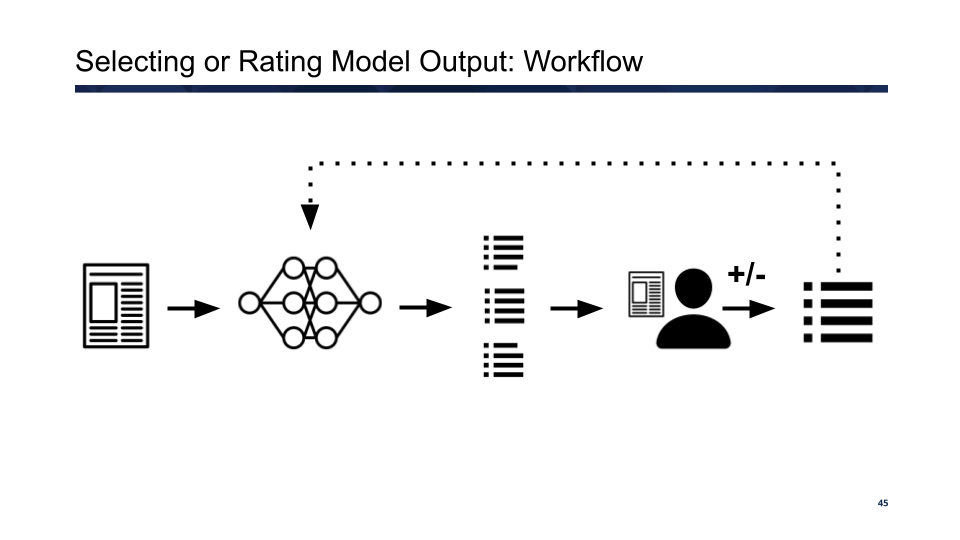

Selecting or rating model output: In this type of human-AI interaction, the model initiates the workflow by generating output candidates. The human user can select from or rate the candidates to support the final output. Such feedback can also be used for real-time, online model training.

Post editing: In this type of human-AI interaction, the workflow starts with a text drafted by AI, and humans edit the AI-generated text. The edited text can also be used for future model training.

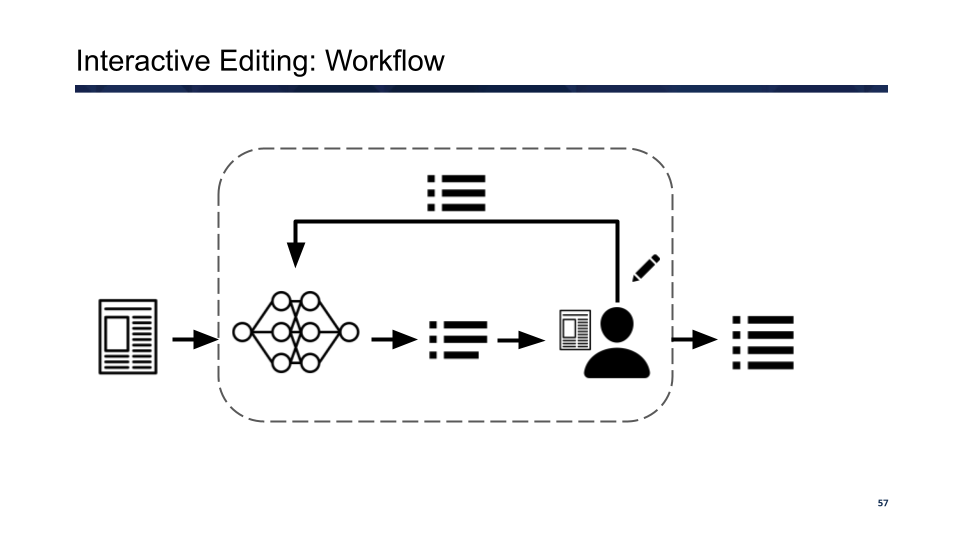

Interactive editing: In this type of human-AI interaction, similar to post-editing, the model generates a draft, and the human provides edits. But then the model iterates based on the human edits, updating and generating more text for the user to continue editing. The final output is a result of such iteration.

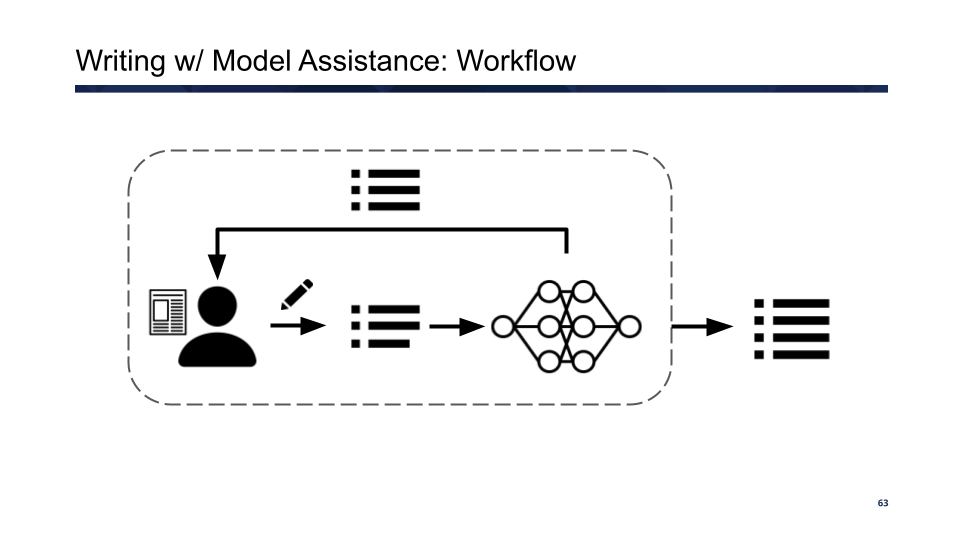

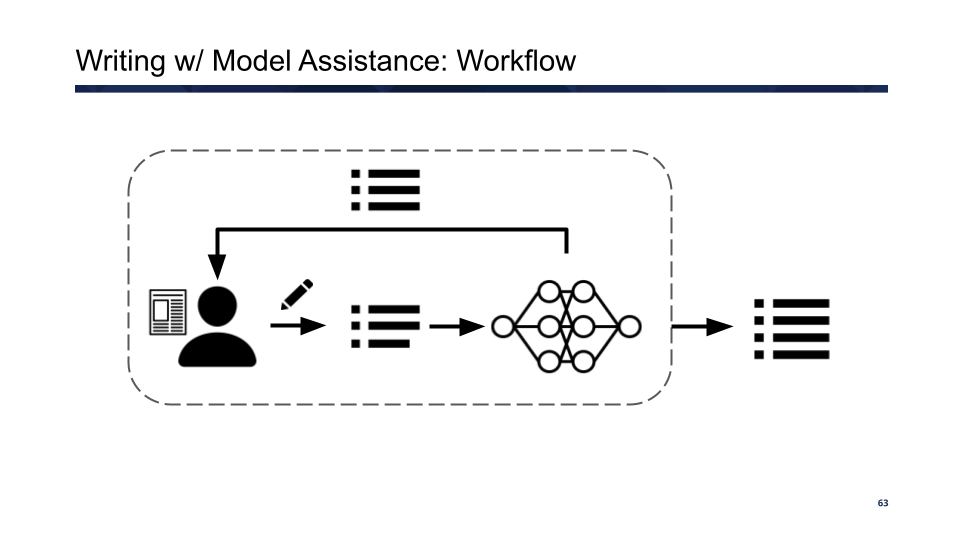

Writing with model assistance: In this type of human-AI interaction, humans initiate workflow by writing the summary. The model provides suggestions. Human users can revise their writing based on the suggestions. In this process, the model iterates, providing additional suggestions based on humans’ writing, and the final output is from this iteration.

Human needs in the five types of human-AI summarization

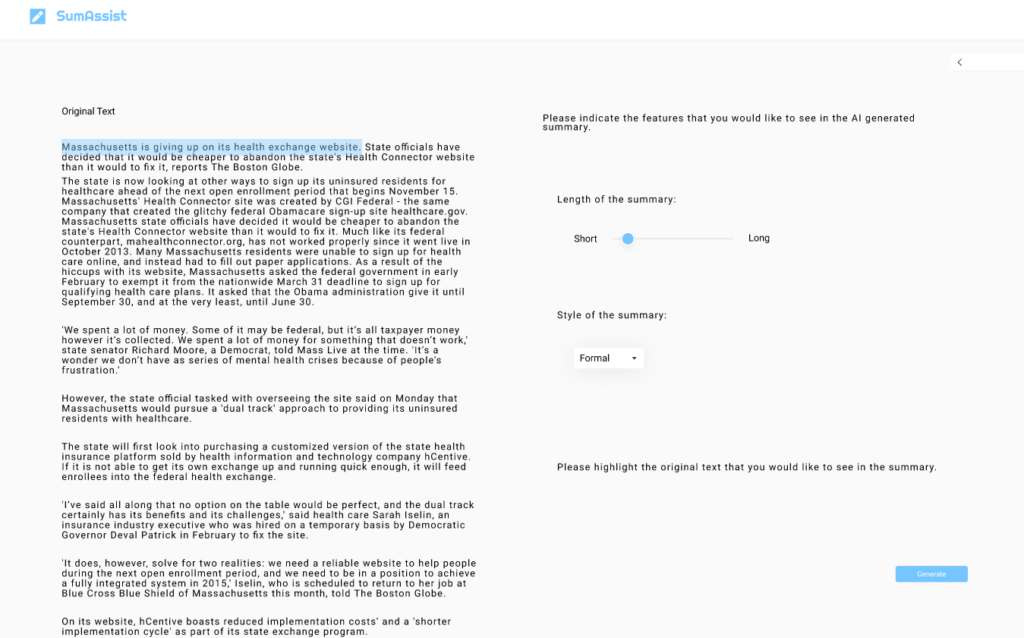

To study what experience and needs human users may have with each type of interaction, we designed interactive mockup prototypes that support the five types of human-AI interaction in text summarization. Below is a screenshot of our prototype for the guiding model output interaction. The specific prototypes can be found in the full paper.

We then showed the prototypes to 16 users in a design probe interview study, in which users interacted with the five prototypes in imaginary text summarization scenarios and shared their experiences and needs.

At a high level, we found that while users generally expect AI to make summarization easier, they always want the ability to inspect and intervene. They need transparency on how the AI generates summaries to know how to trust it.

For example, users found the AI-generated summaries helpful as a baseline when prompted to edit AI outputs (with the post-editing and interactive editing prototypes). However, they tended to treat the AI-generated summaries as authoritative, especially when working with an unfamiliar domain, resulting in the concern of overreliance.

Also, users valued their control over AI and preferred interactions that allowed them to explicitly prompt the model and participate in generating summarization (e.g., guiding model output and writing with model assistance). The balance of efficiency and control also depended on the stake of the summation task. Users demanded more control when summarizing important and serious text. At the same time, they were fine with giving away some control to AI to get summaries faster (e.g., with the selecting and rating model output prototype) if it was for something informal.

Our full paper contains a complete tour of our findings from the users. Our studies mapped the design space of human-AI interaction in text summarization and offered several design recommendations for future human-AI summarization systems.

Between the lines

Our study is the first to explore the design space of the different types of human-AI interaction in text summarization. We present a series of suggestions for the future design of human-AI text summarization systems, for example:

- Systems should help users develop an appropriate level of trust and reliance on AI by offering support for explanation and allowing users to participate in the summarization process.

- Systems should allow human users to validate and have the “final say” of AI outputs. Regardless of interaction type, users should have the option to post-edit AI output.

- Systems should ensure it does not surprise users. For example, systems can offer customizable timing of when the AI input comes in and provides previews of interactive suggestions.

- Systems should support users to tailor AI outputs to different audiences and purposes.

- Systems should account for the various user values on efficiency and control in different scenarios, predicting user priorities or allowing users to choose their priorities in the interaction.

We hope our paper can inspire the future designs of human-AI text summarization systems. We also hope future researchers and developers can evaluate our design space through realistic implementations and large-scale experiments.