Written by Connor Wright, research intern at FairlyAI — a software-as-a-service AI audit platform which provides AI quality assurance for automated decision making systems with focus on AI equality.

The hiring process is becoming ever more automated, with companies implementing algorithms to conduct keyword searches as well as video analysis. So, where is humanity’s place in this ever-more automated system? To demonstrate this, I refer to both federal and state efforts to preserve its position, with individualised city efforts also featuring prominently in this effort. I then consider how businesses can navigate this field and ensure they too maintain humanity’s place in the process. Despite control being ever-more ceded to automation, state and federal efforts have ensured that some of this has gone back to the candidates involved.

Thanks to the power of automation, more and more businesses are using automated hiring processes to help with processing candidates. From Artificial Intelligence (AI) analysis of videos to keyword searches, the hiring process is slowly ceding its human touch to algorithms. So, how is the hiring process adhering to what human aspects are left? Here, I’ll take a look at how pivotal a role the Civil Rights Act still plays, while also referring to how different states and cities have tackled the issue. To round off, I’ll look at how businesses are being advised to navigate this novel challenge, and how the human touch can still be adequately preserved in an increasingly automated field.

Upon design, the Civil Rights Act was to last for hundreds of years, protecting the rights of the general population, while facing bias and injustice head-on. In this way, the impact-orientated act still guards humanity against the frights of the automated hiring process. Any algorithm that was to discriminate candidates based on qualities such as race, or conditions such as marital status will come face to face with the act itself. For example, an algorithm can only dismiss candidates based on race if the business involved is in the process of committing to affirmative action. In this way, the human can still be seen as somewhat protected within the automated hiring process. However, what about other aspects of life such as financial, marital and religious information? In steps the Equal Employment Opportunities Commission (EEOC).

The EEOC has ensured that the hiring process (whether automated or not) is still adherent to the protection of further aspects of human existence. Here, questions on marital status cannot be used as the basis of discrimination in any algorithmic hiring process, even if asked to both men and women. This is deemed to harbour the intent to discriminate based on the likelihood of employees starting families and hence booking parental leave. Likewise, questions on financial information cannot be used as a filter either. Beyond this, religious information is not to be the basis of candidate selection unless it is a bona fide qualification. The qualification is to label those attributes which require a special exception to be considered in the selection process, such as being required to be Jewish to become a Rabi. Hence, while some decisions are becoming automated, there are decisions that cannot be made by the algorithm, that preserve the humanity within the hiring process. Now, what have other states and cities done to go one step further?

The California Consumer Privacy Act (CCPA), Illinois Video Act (IVA), and state approaches to height and weight discrimination immediately jump to mind. In 2018, the CCPA granted Californian consumers more rights over the data that businesses collect on them, now being able to exercise the right to request their deletion of their data, the right to know what data the business has, and the right to non-discrimination based on the exercising of these rights. In terms of the hiring process within California, candidates will be able to emphasise their awareness of the importance of their data, and remind businesses that they are more than mere data points.

The IVA is somewhat more specified, but just as interesting. Here, the Illinois General Assembly in 2019 passed the IVA which detailed how candidates must give their explicit consent for their video submission (as part of the hiring process) to be analysed by AI. Aspects such as the candidate’s body language and facial expressions would all be analysed and serve as indicators to the recruiters of the talent at hand. As a result, in order to utilise the technology in this way, the recruiters in Illinois must inform the candidate of their intended use, how it will analyse the video, and comply to deleting the video within 30 days of a candidate’s request to do so. While only a small aspect of the hiring process, the human now has more control over how they are assessed, with the option to ‘opt-out’ proving crucial to this increase in control.

In the arena of height and weight discrimination, various states have adopted measures to prevent any hiring process (automated or not) from including discrimination based on these two aspects. For example, the Elliot-Larsen Civil Rights Act of 1977 in Michigan prohibits the discrimination of 10 categories in the hiring process, including weight. Furthermore, cities such as Binghamton, NY, Madison, WI, and San Francisco, CA have added height and weight (with Madison adding “physical appearance” to their municipal codes. In this sense, automated hiring processes would not be able to discriminate based on these two aspects, particularly interesting based on how algorithms don’t actually ‘see’ the candidate. Nevertheless, again more affordances are being offered to preserving humanity in the hiring process, especially given the potentially damaging nature of being discriminated based on physical appearance by an algorithm that doesn’t even ‘see’, but is coded to discriminate in that way.

So, how can businesses help themselves navigate these considerations? Some of the advice offered is offering candidates advice on how the automated process works (like in the IVA requirements), as well as considering the relevance of the data being collected and if it’s actually needed to be connected. Personally, some advice I would give is for businesses to potentially think about establishing a helpline for their automated services, so candidates know who to direct their questions to. Furthermore, different positions will require different amounts of data to be collected, such as the difference between a new accountant and a new CEO. In this case, companies ought to be aware of what data they can actually collect that will help their decision, not just collect data for data’s sake.

Overall, the hiring process’ increasing automation has rightly meant that humanity’s place in the process has come into consideration. However, I believe that humanity is still able to be preserved in the practice. Both federal and state efforts have been realised, especially with the Civil Rights Act being able to feature in protecting this human aspect. While some state efforts are more developed than others, candidates are still being afforded more control over a process that is slowly relinquishing it to algorithms. Businesses have ways of conducting the future appropriately to ensure the human still features as it should, and hopefully, legislation continues to reflect that.

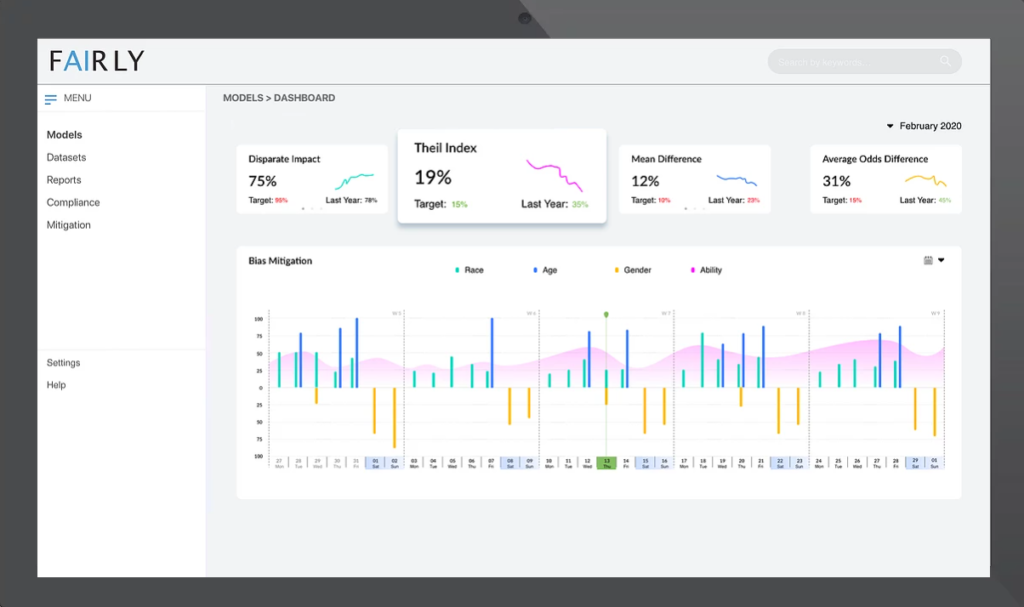

Fairly’s mission is to provide quality assurance for automated decision making systems. Their flagship product focuses on providing an easy-to-use tool that researchers, startups and enterprises can use to compliment their existing AI solutions regardless whether they were developed in-house or with third party systems. Learn more at fairly.ai.