🔬 Research Summary by Majeed Kazemitabaar, a PhD student in Computer Science at the University of Toronto researching the intersection of Human-Computer Interaction and Education, specifically focusing on AI and Programming in learning environments.

[Original paper by Majeed Kazemitabaar, Runlong Ye, Xiaoning Wang, Austin Z. Henley, Paul Denny, Michelle Craig, Tovi Grossman]

Overview: LLM-powered tools like ChatGPT can offer instant support to students learning programming; however, they provide direct answers with code, which may hinder deep conceptual engagement. We developed CodeAid, which uses LLMs to produce helpful and technically correct solutions without revealing direct code. CodeAid answers conceptual questions, generates pseudo-code with line-by-line explanations, and annotates students’ incorrect code with suggested fixes. We deployed CodeAid in a large class of 700 students over a 12-week semester to explore students’ usage patterns and their perceptions of AI assistance, CodeAid’s response quality, and educators’ perceptions of CodeAid.

Introduction

Tools like ChatGPT can assist students requiring help in programming classes. They can explain code snippets and coding concepts, fix students’ incorrect code, and even write entire code solutions. However, their productivity-driven and direct responses are concerning in educational settings. Currently, many educators are prohibiting their usage in introductory programming classes to avoid academic integrity issues and students’ over-reliance on AI.

In this research, we explored the design and evaluation of “pedagogical” LLM-powered coding assistants specifically designed to scale up instructional support in educational contexts.

We developed CodeAid and deployed it in a programming class of 700 students as an optional resource similar to office hours and Q&A forums for their entire 12-week semester.

Overall, we collected data from the following sources:

- [ ✏️📋] Weekly surveys about using CodeAid vs. other non-AI resources

- [ 🤖⭐] CodeAid’s log data

- [ 🎤👩🎓] Interviews with 22 students about why and how they used CodeAid

- [❓📝] Final survey asking students to compare CodeAid with ChatGPT

- [ 👨🏫🎤] Interviews with 8 computing educators about CodeAid, their ethical and pedagogical considerations, and comparison with other resources.

During the deployment, 372 students used CodeAid and asked 8,000 queries. We thematically analyzed 1,750 of the queries and CodeAid’s responses to understand students’ usage patterns and types of queries (RQ1), and CodeAid’s response Quality in terms of correctness, helpfulness, and Directness (RQ2). Furthermore, we qualitatively analyzed data collected from the interviews and surveys to understand the perspectives of students (RQ3) and educators (RQ4) about CodeAid.

CodeAid

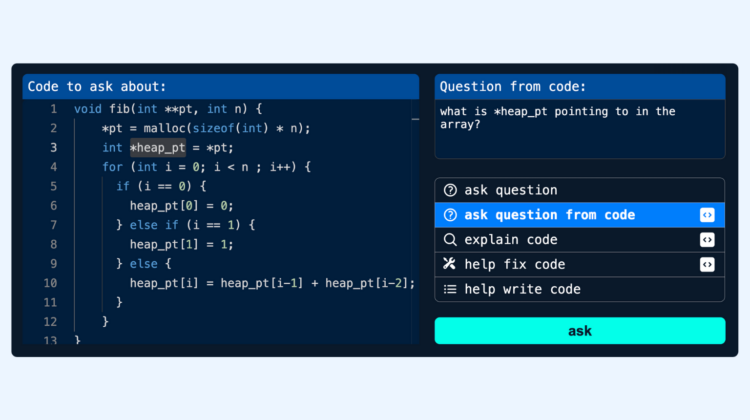

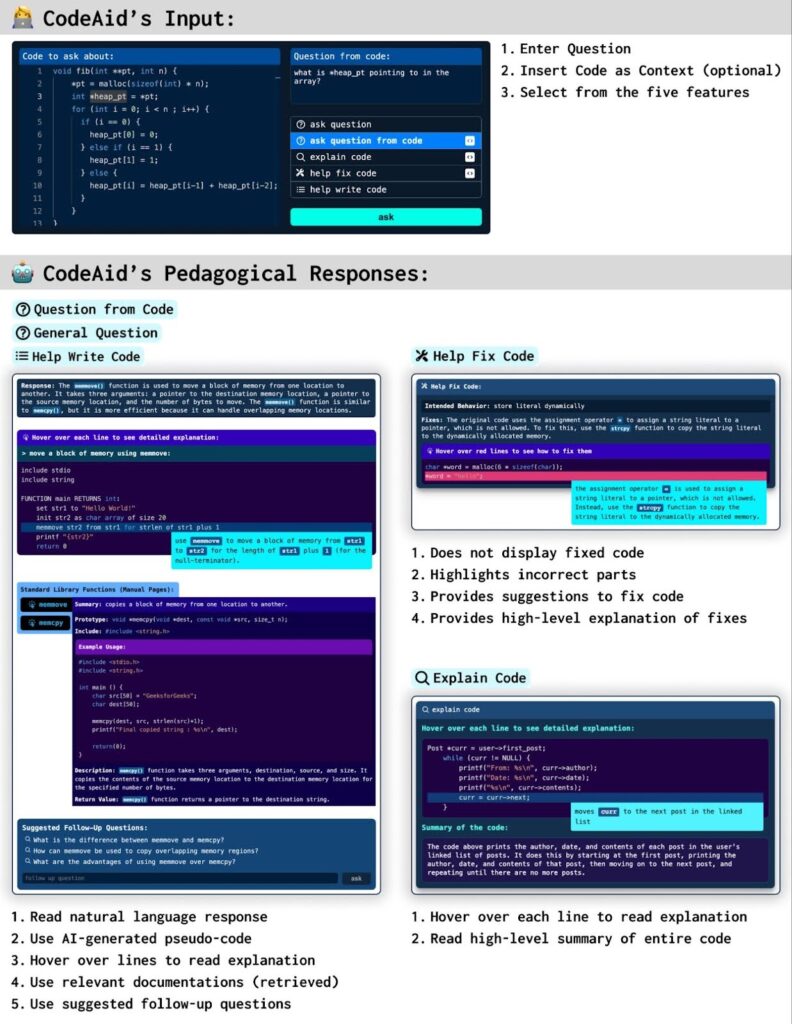

CodeAid was developed with five main features that were iteratively updated during the deployment based on student and educator feedback:

- General Question

- Question from Code

- Help Write Code

- Explain Code

- Help Fix Code

Below are some of the unique properties of CodeAid:

- Interactive Pseudo-Code: Instead of generating code, CodeAid generated an interactive pseudo-code. The pseudo-code allowed students to hover over each line to see a detailed explanation about that line.

- Relevant Function Documentations: Not everything needs to be AI-generated. CodeAid uses Retrieval Augmented Generation (RAG) to display official and instructor-verified documentation of functions relevant to students’ queries. These were designed to save time, as well as to allow students to see code examples to learn about using those functions.

- Suggested Follow-Up Questions: CodeAid also generates several suggested follow-up questions for students to ask after each response.

- Annotating Incorrect Code: when using the Help Fix Code, CodeAid does not display the fixed code. Instead, it highlights incorrect parts of the students’ code with suggested fixes.

- Interactive Explain Code: Instead of displaying a high-level explanation of the entire code in a paragraph, CodeAid renders an interactive component in which students can hover over each line to understand the purpose and implementation of each line of the provided code.

- Stream Rendering of Interactive Components: CodeAid renders interactive components as the response is streamed from the LLM, enabling a more interactive experience with less delay.

Deployment Results

What was interesting in this research was the deployment of CodeAid in a large class for an entire semester. Below are some of the high-level results we found in this deployment.

RQ1: Students’ Usage Patterns and Type of Queries

First, let’s look into the high-level statistics of students’ usage of CodeAid:

- Overall, 372 students used CodeAid, 300 consented to use their data. About 160 students used CodeAid less than 10 times, whereas 62 students used it more than 30 times.

- Furthermore, women used CodeAid significantly more frequently than men (33.8 queries vs. 18.4 queries) while representing only 30% of the entire class.

The thematic analysis revealed four types of queries from CodeAid:

- Asking Programming Questions (36%)

- Code and conceptual clarification queries (70%) about the programming language, its syntax, its memory management, and its operations.

- Function-specific queries (15%) about the behaviour, arguments, and return types of specific functions.

- Code execution probe queries (15%) in which students used CodeAid, similar to a compiler, to verify execution or evaluate the output of their code on particular inputs.

- Debugging Code (32%)

- Buggy code resolution queries (68%) that focused on fixing their incorrect code based on a provided behaviour.

- Problem source identification queries (23%) in which students asked CodeAid to identify the source of the errors in their code.

- Error message interpretation queries (9%) to better explain the error that they are receiving.

- Writing Code (24%)

- High-level coding guidance queries (56%) in which students asked “how- to” questions about a specific coding task.

- Direct code solution queries (44%) where students asked CodeAid to generate the direct solution (by copying the task description of their assignment)

- Explaining Code (6%): such as explaining the starter code provided in their assignments.

RQ2: CodeAid’s Response Quality

The thematic analysis showed that about 80% of the responses were technically correct and The General Question, Explain Code, and Help Write Code features all responded correctly in 90% of times, while the Help Fix Code and Question from Code were correct in 60% of times.

In terms of not revealing direct solutions, CodeAid almost never revealed direct code. Instead, it generated:

- Natural language responses (43%)

- High-level response with pseudo-code of generic example code (16%)

- Pseudo-code of a specifically requested task (6%)

- Suggested fixes for minor syntax errors (16%)

- Suggested fixes for semantic issues (8%)

RQ3: Students’ Perspectives and Concerns

Based on the student interviews and surveys:

- Students appreciated CodeAid’s 24/7 availability and being “a private space to ask questions without being judged”.

- Students also liked CodeAid’s contextual assistance, which provided a faster way to access relevant knowledge, allowed students to phrase questions however they wanted, and produced responses that were relevant to their class.

- In terms of the directness of responses: some students indicated that they wanted CodeAid to produce less direct responses, like hints. Interestingly, some students regulated themselves to not use features that produced more direct responses.

- In terms of trust, some students trusted CodeAid while others found that “it can lie to you and still sound confident.” Some students trusted CodeAid because it was part of the course, and the instructor endorsed it.

- When asked students about their reasons for NOT using CodeAid, they mentioned a lack of need, a preference to use existing tools, wanting to solve problems by themselves, or a lack of trust in AI.

- Comparing CodeAid with ChatGPT: even though ChatGPT was prohibited, students reported using it slightly more than CodeAid. They preferred its more straightforward interface and larger context window to ask about longer code snippets. However, some students did not like ChatGPT since it did much of the work for them.

RQ4: Educator’s Perspectives and Concerns

- Overall, most educators liked the integration of pseudo-code with line-by-line explanations as it provides structure, reduces cognitive load, and is better than finding exact code solutions that are available on the internet. However, some educators were concerned that when the algorithm is what students need to learn, revealing its pseudo-code will harm their learning.

- Most educators wanted to keep students away from ChatGPT. They would rather encourage students to use CodeAid instead and even suggested integrating code editors with code execution inside CodeAid.

- Educators wanted the ability to customize CodeAid with course topics or when to activate/deactivate displaying pseudo-code.

Lastly, most educators wanted a monitoring dashboard to see a summary of asked questions and generated responses as well as to reflect on their instruction. However, other educators mentioned that students should not feel like they are being watched.

Between the lines

Looking deeper into our findings, we were able to propose four major design considerations for future educational AI assistants, positioned within four main stages of a student’s help-seeking process:

- D1 – Exploiting AI’s Unique Benefits: for deciding between AI vs non-AI assistance (like Q/A forums, debuggers, and documentation)

- D2 – Designing the AI Querying Interface: once the user decides to use AI assistance, how should students formulate questions and provide context? Particularly in terms of problem identification, query formulation, and context provision.

- D3 – Balancing the Directness of AI Responses: once the user asks a question, how directly should the AI respond? And who should have control agency over the directness level?

- D4 – Supporting Trust, Transparency, and Control: upon receiving a response, how can students evaluate it and, if necessary, steer the AI towards a better response?

CodeAid is one of the first steps in the ethical and pedagogical usage of LLMs to scale up instructional support in educational settings. Hopefully, these results will guide the design and development of future LLM-powered educational assistants in all learning domains.