This guest post was contributed by Jen Brige, who is a blogger who believes in our ability as a species to create a better future through advancements in technology.

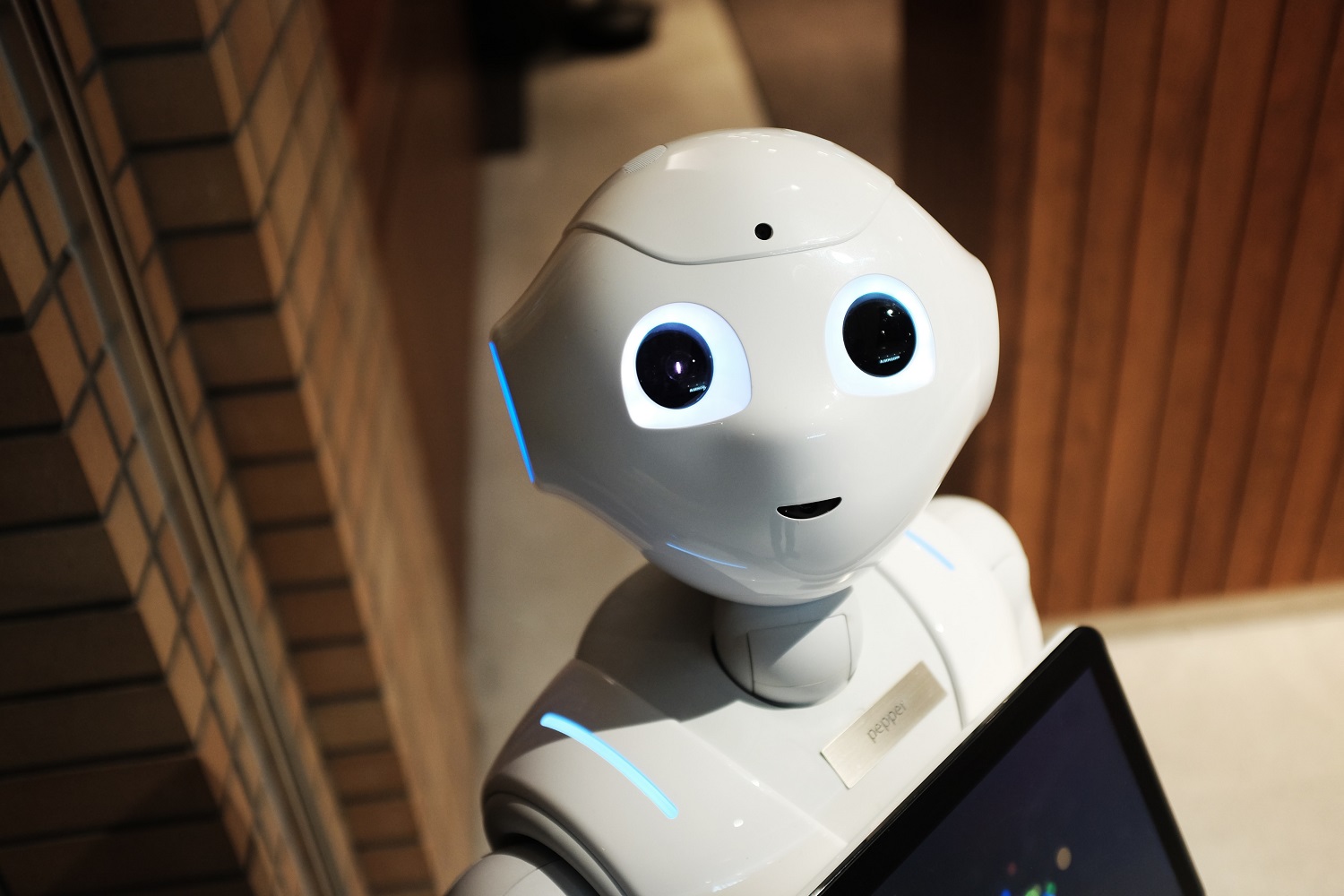

Artificial intelligence and robots have gotten steadily more advanced in recent years. It’s been a long road to that point, but as Wired’s history of robotics puts it, the technology “seems to be reaching an inflection point” at which processing power and AI can produce truly smart machines. This is something most people who are interested in the topic have come to understand. What comes next though might be the question of how human we can make modern robot — and whether we really need or want to.

Breaking down these questions a little bit, we’ll begin with perhaps the most fundamental one:

Do We Need Robots to Be More Human?

The idea of humanoid robots armed with full intelligence has always been appealing in a certain way. It makes us imagine easier and more comfortable futures, and for some it calls to mind endearing sci-fi characters from film and literature. We like the idea of robots we can befriend, or which can help us around the house. But do we really need humanoid AI machines?

It’s a particularly valid question when you think about some of the aspects of modern business and society that make for the broadest use of AI and machine learning today.

For instance, many associate AI with the impending driverless car revolution, and the idea of the IoT connecting smart cities. These are exciting ideas that may ease us into a future of safer, cleaner roads, both in cities and on highways. But for the most part, the AI involved in these scenarios has nothing to do with robots; we don’t imagine metallic, humanoid figures taking the wheel, and indeed we already have cars that will steer and guide themselves without such props.

Or consider the use of AI in modern marketing, which is perhaps more subtle yet extremely widespread. An overview of marketing practices by Ayima clearly discusses the fusion of technology with marketing teams, and specifically speaks to the importance of machine learning for today’s companies. Advanced, highly capable AI programs can be used to comb through a company’s content and the web at large in order to gather and analyze data, all as a form of automated research for marketing efforts. It’s an intelligent technological capability that is getting more impressive (and being more widely used) with each passing year — but it doesn’t raise any need for humanoid robots.

Examples like these go on and on. Some restaurants are using AI to handle orders; some financial firms use AI to analyse markets; the technology is being applied in agriculture to responsibly improve crop yields and minimize waste and environmental effects. All of these represent expanding frontiers for AI that do not and will not, for the foreseeable future, require actual robotic figures.

Should We Make Robots More Human?

Despite the examples above, there are of course some instances in which more humanoid AI machines can be of use. And even if there weren’t, innovators in the related fields would likely be working toward such machines simply to push boundaries and create new products. So, since we seem to be moving in that direction anyway, it’s also fair to ask if we should be trying to make robots more human.

There are certainly some negatives to consider when addressing this question. Our post on ‘Social Robots and Empathy’ addressed some of these negatives, essentially by theorizing that human-like, empathetic robots could actually cause us to empathize less with other people. Many would agree that we already struggle enough with empathy in modern society. And if we eventually begin to socialize often with humanoid robots designed to respond to us the way we want them to, this problem could become greater. Empathizing with other human beings — with their own complex circumstances and desires — may simply begin to feel difficult in comparison to empathizing with robotic beings which, effectively, want what’s best for us.

There are also the job concerns to keep in mind. Some have taken to arguing that an age of robots won’t actually be a net-negative development for job markets. AI can create jobs about as easily as it can take them away, and we’re a long way away from a robot workforce. Nevertheless, it’s a virtual certainty that more human robots will eventually be considered for some jobs that AI systems can’t perform. For instance, an AI program can take your order in a restaurant, but a humanoid robot could deliver food, refill your water, and check back in to ask how you’re enjoying your meal. An AI program can check you into a hotel and confirm your reservation, but a robot could come to carry your bags and escort you to your room. Of course, robots that look like robots (and not humans) could do these jobs too. But it’s conceivable that employers and customers alike might be more willing to accept human-like machines in these scenarios.

On the other hand, there are plenty of “pros” to the idea of making human-like robots as well. They might appear to understand us better; they might blend into society more effectively; they might be able to comfort our children or assist our elderly. It’s just a matter of whether or not we can make them “human” enough to fulfil these potential benefits.

So – Can We Do It?

Whether or not we can make robots more fully human depends, naturally, on a range of technological innovations. We need to see even better AI and even greater processing power, more fluid movements, and more convincing designs. Ultimately though, the effort may come down to two main goals: bridging the uncanny valley and developing theory of mind.

The uncanny valley is something you’ll see referenced in virtually every modern piece about robot and human interaction. In Phys’s ongoing coverage of science and tech, it once summed up the uncanny valley issue nicely, explaining in a 2018 post that it describes a point at which robots stop being comforting and start being disturbing. Research has shown that we’re comfortable with robots when they have features we identify with — but that if they start to resemble us too closely, we begin to find them disturbing, or even repulsive. Bridging this gap, or perhaps deliberately stopping short of it, will be crucial in the creation of human-like robots. On the one hand, it’s conceivable that some robots in the future will become so advanced that they’re nearly indistinguishable (think Westworld, if you’re an HBO fan). On the other, it may be more likely that the push for more human robots stops short of exact physical features — such that we get robots that talk, think, and move like us, but are not made to exhibit fully humanoid features.

As for theory of mind, it’s something that was discussed in a Quartz article about teaching robots to “read minds” in order to make them more human-like. The article was not referring to the idea of robots actually “reading minds” in the traditional sense. Rather, it explained the need to teach them theory of mind — which it defined as the ability to attribute mental states (beliefs, goals, desires, etc.) to others. In other words, it means teaching robots to recognize us as the complex, conscious beings we are, as opposed to “something purely mechanistic and inanimate.” Oddly enough, to teach robots to be human, we need to first teach them to recognize fully that we are human.

One way or another, this will all be a process. There likely won’t be a point at which we make a collective decision of just how human to make robots, nor will there be a time when we recognize a machine as being the most human-like we’re going to produce. But there’s no end to the intrigue as we continue to see advances in AI and robotics.

Article specially written for montrealethics.ai

By Jen Brige