🔬 Research Summary by Travis Greene, an Assistant Professor at Copenhagen Business School’s Department of Digitalization with an interdisciplinary background in philosophy and research interests in data science ethics and machine learning-based personalization.

[Original paper by Travis Greene, Amit Dhurandar, and Galit Shmueli]

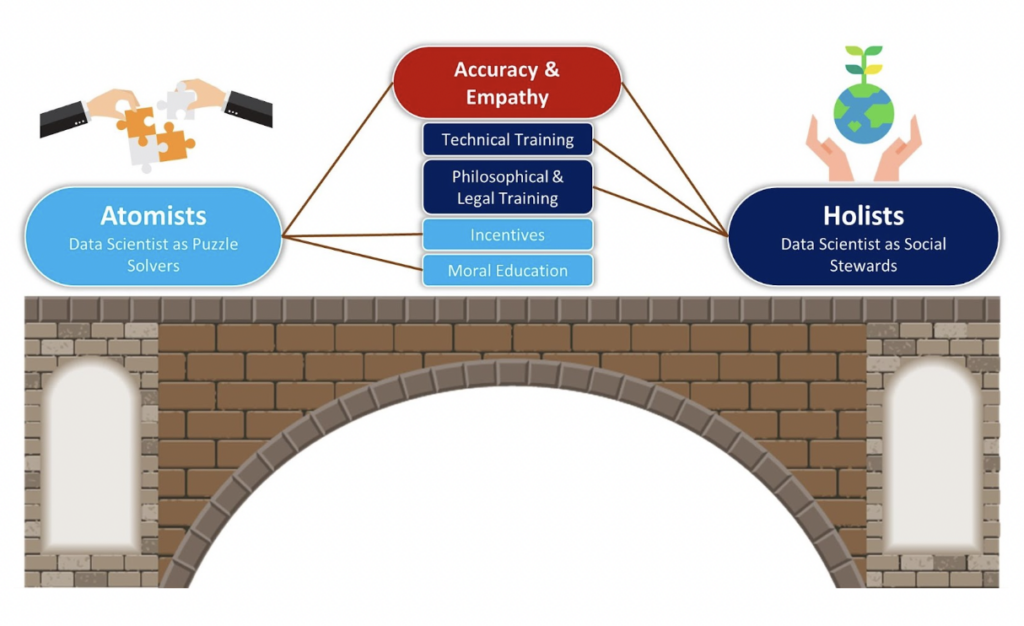

Overview: The role of ethics in AI research has sparked fierce debate among AI researchers on social media, at times devolving into counter-productive name-calling and threats of “cancellation.” We diagnose the growing polarization around AI ethics issues within the AI community, arguing that many of these ethical disagreements stem from conflicting ideologies we call atomism and holism. We examine the fundamental political, social, and philosophical foundations of atomism and holism. We suggest four strategies to improve communication and empathy when discussing contentious AI ethics issues across disciplinary divides.

Introduction

As public concern mounts over the use of AI-driven research to enable surveillance technologies, deep fakes, biased language models, misinformation, addictive behaviors, and the discriminatory use of facial recognition and emotion-detection algorithms, data scientists and AI researchers appear divided along ideological lines about what to do.

While historically, many eminent scientists and mathematicians have held the view that science and values are and should be kept separate, today, a growing number of data science researchers see things differently. They argue that values, particularly those related to the desirability of social and political goals, implicitly influence the foundations of data science practices. While some may view the inclusion of ethics impact statements in several major AI conferences and journals as a sign that the value-neutral ideal of science is no longer tenable, a vocal cadre of data scientists is pushing back and asserting the importance of academic freedom and value neutrality.

We worry that the conflict between these two ideological camps threatens to polarize the AI and data science communities, lowering the prospects that new AI-based technologies will contribute to our collective well-being.

Key insights

An ideology is an all-encompassing worldview advancing a political and ethical vision of a good society. To better understand the nature of ethical disagreements in data science, we introduce a simple ideological taxonomy we call atomism and holism. Our taxonomy aims to make each ideology’s implicit beliefs, assumptions, and historical foundations more explicit so they can be reflected on, refined, and more openly discussed within the AI and data science community as ethical disagreements arise.

The “two cultures” within the data science community

In 1959, at the height of the Cold War, and as the US military-industrial complex established itself, scientist and writer C.P. Snow worried about a growing divide between two academic cultures—those from the “hard sciences” and the “humanities”—whose specialization rendered them increasingly hostile and unmotivated to communicate with one another. We suggest that a similar dynamic may be stoking division within the larger data science community. Inspired by philosopher and historian of science Thomas Kuhn, we sketch two guiding metaphors capturing core differences between rival atomist and holist research communities.

Atomists: Data scientists as puzzle solvers

Through a process of disciplinary socialization and training, atomists see themselves as acquiring a constellation of shared beliefs, values, and techniques—in short, a paradigm—that permits progress on open problems, or “puzzles,” that the paradigm identifies as solvable. Commitment to the paradigm identifies a researcher as a member of a distinct scientific community. During “normal” science periods, the legitimacy of the paradigm’s values and traditions is presumed, narrowing researchers’ focus on the task of more reliably and efficiently gathering relevant facts. Because the atomist’s scientific identity stems from loyalty to the paradigm, atomists worry that undue focus on external social and ethical issues not only slows down puzzle-solving but threatens both the integrity of the paradigm and their autonomy.

Holists: Data scientists as social stewards

In contrast, holist data scientists view the growing public concern over the social impact of AI as anomalies signifying a paradigmatic crisis ultimately requiring a paradigm shift. Holists thus propose revolutionary changes in perspective and new disciplinary procedures, open problems, and traditions in AI and data science. In the emergent holist paradigm, data scientists see themselves as social stewards or fiduciaries working on behalf of society and advancing substantive social values and human interests through data science research and applications.

Holists are concerned about unjust power differentials and coercive dependency relationships that may arise due to the applications of AI-based technologies. The role of social steward or fiduciary aligns with holist beliefs that the self is constituted through social relations and that social responsibilities and caring relations are essential for our psychological well-being.

The table below summarizes the “atomist” and “holist” ideologies in the data science community along several core dimensions.

| Atomists | Holists | |

| Guiding metaphor | puzzle solver | social steward |

| Facts and values | separate | inseparable |

| Associated “isms” | (neo)liberalism, libertarianism, logical positivism, modernism | communitarianism, feminism, post-positivism, post-modernism |

| Social orientation | individualist | collectivist |

| Self-concept | autonomous | relational |

| Means of social coordination | incentives and markets | shared moral values and dialogic exchange |

| Key moral concepts | rights, duties, contracts, impartial justice | empathy, caring, connection, responsivity to vulnerable others |

| Vision of the good life | neutral and constrained | substantive and unconstrained |

| Scientific methodology | data-driven, empiricist, neutral | theory-laden, rationalist, perspectival |

| Extreme form leads to | technocracy/nihilism/alienation | totalitarianism/dogmatism/tribalism |

Between the lines

As AI-based technologies increasingly impact society, AI ethics is more relevant than ever. But a more central role for ethics in AI research means that members of long-estranged academic disciplines will be forced to engage with one another, disrupting the current intellectual division of labor and posing new barriers to communication and mutual understanding. We suggest several recipes for improved interdisciplinary dialogue among data scientists, including expanding the educational curriculum of data scientists to reflect the social impact of AI and foster the promotion of various intellectual virtues.

Data scientists and AI researchers holding various ethical viewpoints must learn to empathetically and productively discuss controversial AI ethics issues without resorting to name-calling and threats of violence or cancellation.