🔬 Research Summary by Meredith Ringel Morris, Director of Human-AI Interaction Research at Google DeepMind; she is also an Affiliate Professor at the University of Washington, and is an ACM Fellow and member of the ACM SIGCHI Academy.

[Original paper by Meredith Ringel Morris, Carrie J. Cai, Jess Holbrook, Chinmay Kulkarni, and Michael Terry]

Overview: This paper explores the intersection of AI and HCI (Human-Computer Interaction) by proposing two ontologies for considering the design space of generative AI models. The first considers how HCI can impact generative models (e.g., interfaces for models), and the second considers how generative models can impact HCI (e.g., models as an HCI prototyping material).

Introduction

Card et al.’s seminal paper “The Design Space of Input Devices” established the value of design spaces and similar ontologies for analysis and invention. In this position paper, we seek to advance discussion and reflection around the marriage of HCI and emerging generative AI models by proposing two design spaces: one for HCI applied to generative models and one for generative models applied to HCI. We hope these frameworks spark additional research in further refining these taxonomies and spurring the development of novel interfaces for generative models and novel AI-powered design and evaluation tools for HCI broadly.

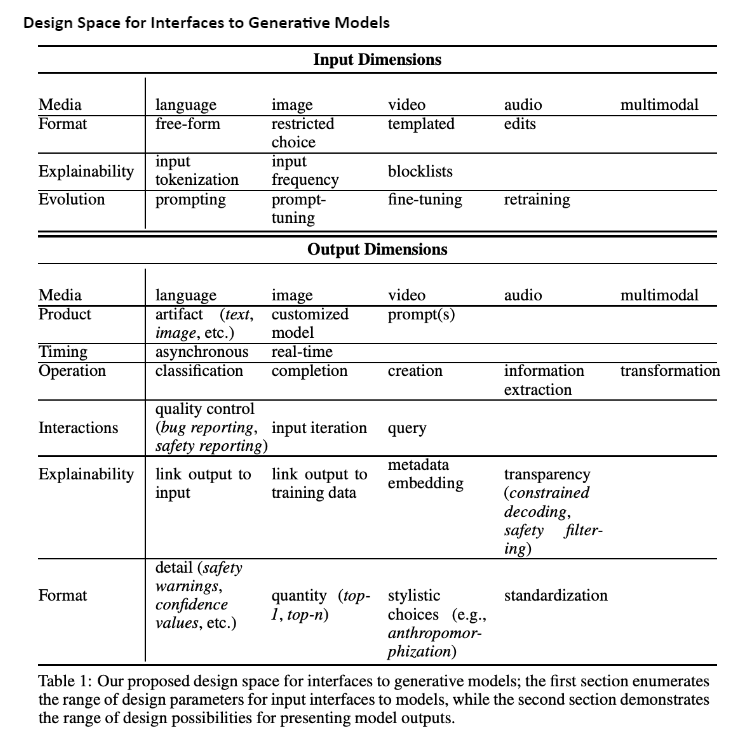

Our first design space considers the application of HCI to generative AI models. The interfaces designed for interacting with such models have the potential to influence who can use the models (e.g., ML engineers vs. general users), model safety (e.g., supporting identification or interrogation of undesired outputs), and model applications (e.g., by altering the time and effort involved in prompt engineering). Our proposed taxonomy considers both the design possibilities for interfaces for providing input to models and those for presenting model outputs.

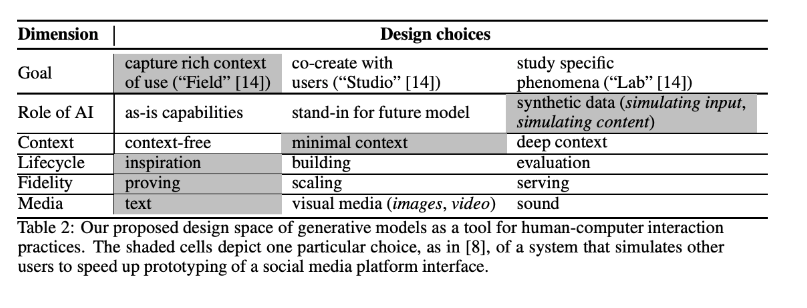

Generative models’ increasing variety and power will fundamentally change HCI research and practice by enabling a new generation of assets, tools, and methods. These artifacts will impact the full spectrum of HCI, including new tools to support design through creative ideation, new tools to build rapid prototypes of novel, interactive experiences, and new tools to support the evaluation of interfaces and ecosystems, such as through simulation. Our second design space illustrates the myriad ways in which generative AI can impact the practice of HCI.

Key Insights

Our paper discusses the meaning of each design space dimension from Table 1 in detail; in this summary, we focus on examples of how to apply this design space.

Consider PromptMaker [Jiang et al., CHI ‘22], an application intended to support prompt programming, as an example of how this taxonomy can be used to describe particular interfaces. Along the input dimensions, PromptMaker accepts language (text) as input (media) in a free-form format and provides explicit support for zero- and few-shot prompting. It provides no explainability capabilities or evolution of the prompts (an oversight our taxonomy might make visible to the PromptMaker creators, suggesting an area for future development! Along the output dimensions, PromptMaker produces language (text) as output (media), where this text is the final artifact (product). It is real-time (timing) and can support several types of operations (classification, completion, creation, and information extraction). Safety reporting mechanisms (interactions) and warnings about the potential for undesirable content (presentation) are built into the interface.

In addition to being descriptive, our taxonomy can also be generative, identifying new opportunities for design and research. For example, status quo model interfaces typically produce a static output. Still, interactive outputs, such as those that support input iteration, could dramatically change the pace at which people can experiment with and create content using generative models. Altering the media used as input could also afford novel experiences – sketch-based, photograph-based, or even audio inputs rather than natural-language strings could all afford new means of expression and creativity with generative image models.

Many considerations, including the use context and target end-users, will factor into how to select among these design parameters when developing interfaces for generative models. While some dimensions may seem at first glance to be inherently “good” or “bad,” such choices are not always clear-cut. For instance, while there are many risks in inappropriate or undisclosed anthropomorphization of AI, this may nevertheless be a desirable interface for some customer service or entertainment applications. On the other hand, while explainability is generally viewed positively, consider the scenario of whether to include the output explainability feature of metadata embedding in a generative image model. If the use context is in an application for professional artists creating original works, embedding the prompt as metadata may be undesirable since the artist may view it as their intellectual property. However, if the use context is in an application for generating custom clip-art for use in documents and slide decks, then embedding the prompt as metadata may be highly beneficial since it could function as alternative text for screen readers, thereby ensuring that documents produced with this clip art are accessible to blind end-users.

Design Space for Generative Models as an HCI Prototyping Material

Our paper discusses the meaning of each design space dimension from Table 2 in detail; in this summary, we focus on examples of how to apply this design space.

As with our “interfaces for model inputs & outputs” taxonomy, this second design space can stimulate research, design, and reflection by supporting descriptive and generative functions. The shading in (Table 2) reflects a descriptive example, illustrating a classification for Park et al.’s “Social Simulacra” work [Park et al., UIST 2022], which used GPT-3 to populate parallel hypothetical futures of proposed social media systems to allow social media designers to tweak system features to facilitate desired interaction styles.

This ontology can also suggest directions for future investigation of the potential for generative

models to further the field of HCI. For instance, models that generate text could be used in the design stage of the HCI lifecycle to create personas that would inspire a practitioner to consider a wide range of design concepts. Similarly, language models could be used to co-create productivity tools with users, for instance, embedding the context of their work into writing aids in word processors.

Between the lines

Bridging the gaps across AI and HCI is vital to enhancing progress in both of these computing subdisciplines. These two fields have much to learn from each other, and improving the bandwidth of cross-communication and cross-pollination remains an open goal for the research community. Workshops that attempt to bridge this gap (such as the NeurIPS 2022 workshop where this paper was presented and the recent ICML 2023 workshop on AI & HCI) are a step in the right direction.