🔬 Research Summary by Balambiga Ayappane, Rohith Vaidyanathan, and Srinath Srinivasa.

Balambiga Ayappane and Rohith Vaidyanathan – MS by Research Scholar from Web Science Lab at Information Technology – Bangalore (IIITB), India.

Prof. Srinath Srinivasa heads the Web Science lab and is the Dean (R&D) at the International Institute of Information Technology – Bangalore (IIITB), India.

[Original paper by Balambiga Ayappane, Rohith Vaidyanathan, Srinath Srinivasa, and Jayati Deshmukh]

Overview: Recent years have seen the emergence of Digital Public Infrastructures (DPIs), enabled by data trusts and other forms of data intermediaries that facilitate legitimate, open-ended dissemination of proprietary data. An important issue of concern for such data intermediaries is consent management and data access mechanisms. Our paper proposes a modular, extensible framework called “Multiverse” to manage the legitimate exchange of sensitive personal and non-personal data in an open-ended fashion, crossing organizational boundaries and applying policies of multiple stakeholders in the data exchange.

Introduction

Data is a new asset that can lead to overall progress when shared and aggregated from different sources. To facilitate the legitimate sharing and dissemination of data, different forms of DIgital Public Infrastructures (DPIs) have been emerging in different parts of the world. An important building block of a DPI is the Data Trust, or a Data Exchange, which facilitates consensual, policy-driven data-sharing among different stakeholders. Data exchanges must contend with three important dimensions of data sharing – transparency, privacy, and security.

Data exchange needs to be transparent and explainable based on data exchange policies while providing assurances against potential privacy and security breaches. Data exchanges do not have an overarching organizational structure whose policies cover all datasets that are being managed by it. Each organization sharing data through the data exchange may have its policies. In addition, one or more applicable regulations could apply to specific forms of data sharing.

Hence, data exchanges need an extensible framework where data-sharing policies are distributed across several participating organizations. A given piece of data, for example, COVID-19 vaccination data, may need to be legitimately shared across several organizations to enable certain services. At the same time, such sensitive data are also subject to state or federal regulations that must be complied with.

Key Insights

Data Trusts and Open-ended data exchange

Data Trusts, also known by other names such as data intermediaries, data commons, etc., are emerging paradigms to manage and exchange data in a fiduciary capacity on behalf of data owners. These data owners could be communities or individuals. Such data trusts play a central role in data-sharing ecosystems where multiple participants can share data in an open-ended manner.

Ensuring legitimate Open-ended data sharing

Data protection laws such as the General Data Protection Regulation (GDPR) and, more recently, the Digital Personal Data Protection Act (DPDA) of India 2023 lay down guidelines to enable the legitimate sharing of data. An important aspect of such legitimate data sharing common across all such laws is consent. It has been identified as a domain-agnostic issue of concern to enable safe and legitimate data sharing. Many consent frameworks have been proposed to help data controllers and data subjects/principals manage consent. A trend across such consent frameworks is coupling regulation specifications of consent with access control frameworks like RBAC, ABAC, etc.

Such access control frameworks need an overarching authority to establish rules to govern access. However, this may not be suitable for cases like data trusts and related constructs where multiple organizations participate with no overarching authority to decide such rules.

Multiverse framework for consent management

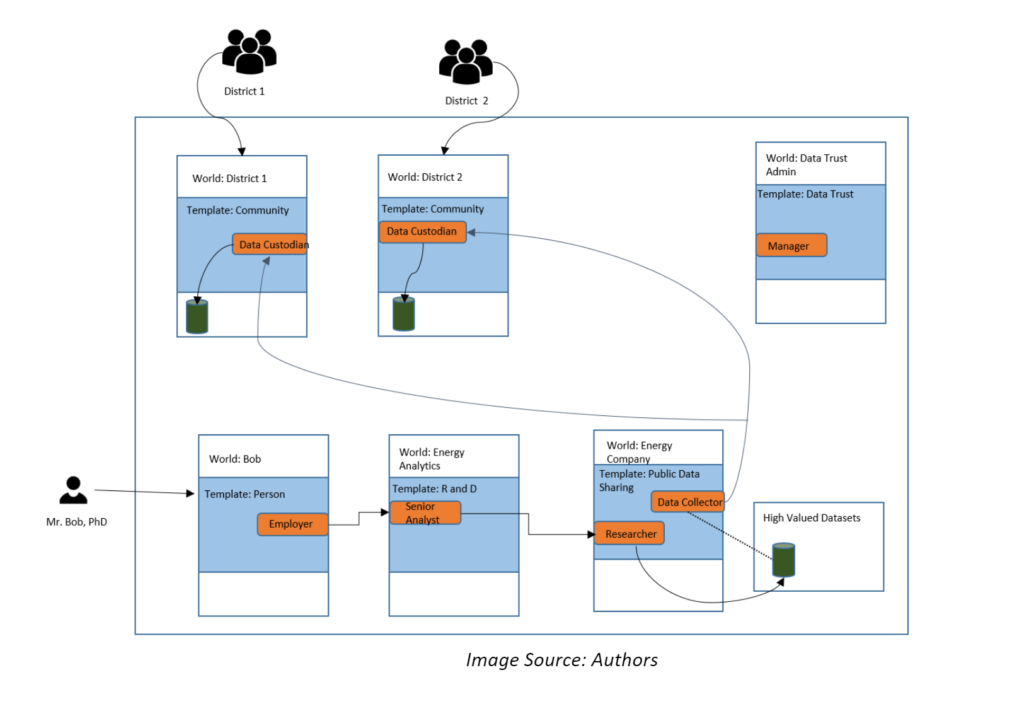

Against this backdrop, we discuss ‘Multiverse,’ an extensible framework proposed in this work, where data exchange policies are distributed across several participating organizations. Each entity representing a semantic boundary for a given policy is called a “world.” Data access is facilitated by a mechanism called “role tunnels” that connect specific roles in one world to specific other roles in another world. This granting of a role in a target world based on the role that one plays in a given source world is called the legal capacity of the role player in the target world. Transitive application of legal capacities based on a given source role in a given world is said to create a “role tunnel” that enables legitimate access to certain specific datasets from a given organization B while operating from a source organization A, even though there is no direct agreement between B and A.

The framework can be extended to even represent individuals as separate “worlds” in themselves, where they store personal or personally identifiable data. They can then issue their policies for consensual PI or PII data access.

Multiverse also has a construct called “templates,” where certain common policy constructs are already encoded into roles and access privileges. Worlds can inherit a template and modify it to their needs rather than building their policy framework anew.

Agents are users or applications that produce and consume resources. Every agent has a world where they can read and publish data. Legal capacity is the string of role and world specifications that leads an agent to the data source. This legal capacity determines the legitimacy of the data access, and consent-related elements are managed through this.

Here’s an example of how the framework can be used in Data Trusts:

Here, the agent is represented by a user named Mr. Bob, a Senior Analyst with Energy Analytics. He needs to access some data stored in a world called Energy Company. Energy Company has implemented a template called Public Data Sharing, which is in relationship with Energy Analytics, which has implemented a template called R&D.

The world for the user Ajay is also in a relationship with Energy Analytics through the role of Senior Analyst. The relationship between the R&D and Public Data Sharing enables a Senior Analyst of the R&D to appear as a Researcher in the Public Data Sharing template, which gives him some privileges over the ’High-Valued dataset.’

Between the lines

Existing consent management solutions use traditional access control frameworks like ABAC, RBAC, etc., which usually need an overarching authority to establish rules to govern access, which may not be possible in open-ended data-sharing scenarios like data trusts. In this work, we address the problem of managing consent in data trusts where multiple participants share data with no overarching authority to make access control decisions while complying with relevant regulatory frameworks.