🔬 Research Summary by Nick Bryan-Kinns, Professor of Creative Computing at the Creative Computing Institute, University of the Arts London, where he researches the human-centred approaches to the use of AI in the Arts.

[Original paper by Nick Bryan-Kinns, Berker Banar, Corey Ford, Courtney N. Reed, Yixiao Zhang, Simon Colton, and Jack Armitage]

Overview: Deep learning AI can now generate high-quality and realistic outputs in various art forms, from visual arts to music. However, these AI models are incredibly complex and difficult to understand from a user perspective. This paper explores how explainable AI (XAI) could be used to make a generative AI music system more understandable and usable for users, especially musicians.

Introduction

Deep learning AI models can now produce realistic and high-quality artistic outputs such as music (e.g., Magenta, Jukebox) and images (e.g., Stable Diffusion, Dall-E). However, it is very difficult to understand how the output was produced or to control features of the production process. In this paper, we survey 87 generative AI research papers and find that most do not explain what the AI model is doing and hardly any real-time control of the generative process. To address this lack of transparency and control, we explore applying eXplainable AI (XAI) techniques to design a generative AI system for music. We introduce semantic dimensions to a variational autoencoder’s (VAE) latent space, which can be manipulated in real-time to generate music with various musical properties. We suggest that in doing so, we bridge the gap between the latent space and the generated musical outcomes in a meaningful way, making the model and its outputs more understandable and controllable by musicians.

Key Insights

Explainable AI and the Arts

The field of eXplainable AI (XAI) examines how machine learning models can be made more understandable to people. For example, XAI projects have focused on creating human-understandable explanations of why an AI system made a particular medical diagnosis, how the AI models in an autonomous vehicle work, and what data an AI system uses to generate insights about consumer behavior. However, current XAI research is predominantly focused on functional and task-oriented domains, such as financial modeling, so it is difficult to apply XAI techniques to artistic uses of AI directly. Moreover, in the Arts, there is typically no “right answer” or correct set of outputs that we are trying to train the AI to arrive at. In the Arts, we are often interested in surprising, delightful, or confusing outcomes, as these can spark creative engagement.

Our research explores how XAI techniques can be applied in and through the Arts to improve the use and understanding of AI in creative practice. The Arts, especially music, also provide a complex domain to test and research new AI models and approaches to explainability. Compared to domains such as healthcare and automotive industries, the arts require similar levels of robustness and reliability from their AI models but have significantly fewer ethical and life-critical implications, making the Arts a great test-bed for AI innovation.

Explainable AI and Generative Music

In our paper, we surveyed 87 recent AI music papers regarding the role of the AI in co-creation, what interaction is possible with the AI, and how much information about the AI’s state is made available to users. Our perspective is that the explainability of creative AI is a combination of the AI’s role, the interaction it offers, and the grounding that can be established with the AI. We found a small number of excellent examples of collaborative AI, interactive AI music systems, and AI models that make internal AI state available to users. However, the vast majority of AI music systems offer very little of any of these explainability features.

Explaining Latent Spaces in AI Music Generation

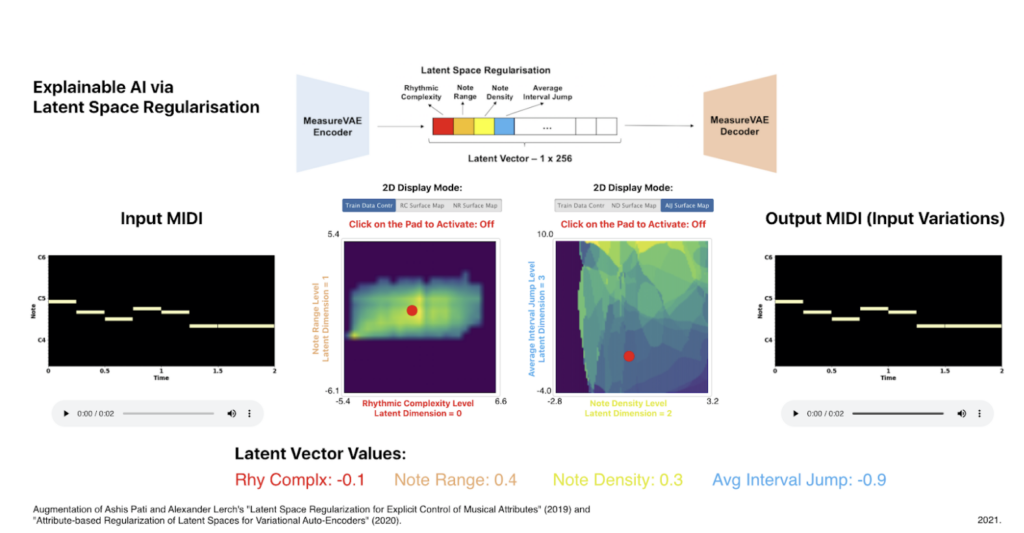

To explore how to make generative AI models more explainable, we build on the MeasureVAE generative model, which produces short music phrases. In our version, we force 4 of the 256 latent dimensions of the AI model to map to musical features. We then visualize these features in 2D maps in a web app that users can navigate in real-time to generate music.

In this way, we increase the explainability of the generative AI model in two ways: i) key parts of the AI model are exposed to the user in the interface and meaningfully labeled (in this case, with relevant musical features), and ii) the real-time interaction and feedback in the user interface allows people to explore the effects of these features on the generative music and thereby implicitly learn how the model works.

In our implementation, the AI acts somewhat like a colleague – the response to the user is given in real-time, as would be done in a human-to-human musical interaction. This drives a feedback loop between a user and the AI, whereby a person’s reaction to the AI’s response informs the subsequent interaction. Thinking musically, this resembles a duet in creative improvisation, where the players make real-time decisions based on their colleague’s performance.

Between the lines

Generative AI is a source of concern and excitement in the Arts. On one hand, AI offers new tools, opportunities, and sources of inspiration for creative practice and exploration. On the other hand, there are ethical concerns about the lack of attribution and IP recognition in AI training sets, concerns about the deskilling of creative work, and concerns about bias in generative AI. We can proactively work to ensure that the artist remains key to the creative process through eXplainable AI and the design of user interfaces that embrace real-time interaction with the AI model. Indeed, working with artists to design and implement eXplainable AI systems will help mitigate concerns about the impact of AI on creativity.