🔬 Research Summary by Dr. Philippe Page, trained as a theoretical physicist developed a career in international banking before focusing energy on the next generation of internet as a Trustee of the Human Colossus Foundation.

[Original paper by Philippe Page, P.Knowles, and R.Mitwicki]

Overview: Effective AI relies on accurate data and robust societal governance. The emergence of powerful Large Language Models compels researchers, organizations, governments, and supranational entities to grapple with the complexities of human-machine interactions. Our research into distributed governance models provides a framework for governance design and, by harnessing recent software advancements in decentralized authentication and semantics, a structured approach to foster accurate and legally admissible data generation within this evolving landscape.

Introduction

“Did I receive and can I get the right information?”, “Is the response to my question meaningful for me in my context?”

These are the questions we all have to answer before making decisions. The responsibility related to these decisions applies to us as individuals and professionals in the name of our organization or public servants in governmental institutions. Ultimately, our research and solutions aim to provide governance and tools for the hyperconnected digital age.

Humans develop the instinct to assess the veracity of the information we use to make decisions. To protect their values, human societies developed regulatory and ethical rules over centuries to guide our decisions and defend against decisions made by others. As a result, the concept of legal entities (i.e., “personne morale” in French) was born. Today, we have access to a complex mesh of credentials, contracts, charters, etc., to help us assess the veracity of information received before we decide to act.

Post-digital transformation, information moves and is processed at the speed of electricity. In addition, AI independently creates values through algorithmic generation of information. Informational agents provide tsunamis of information. New risks brought a new dimension to the governance debate. Therefore, decision-making has entered a new age where decision-makers must assess the veracity of information from both Human and algorithmic origins.

Our research, part of the EU New Generation Internet programs, casts the question within the principal-agent problem. The novelty of our approach rests on recent developments in software engineering. Decentralized authentication and semantics enable the creation of the digital self, dual to the self in the physical space. By splitting the intrinsic and extrinsic data properties, this approach provides a functional definition of governance to bridge human and data governance.

Distributed Governance Models

Aligning Digital Governance

Distributed governance, a familiar term in policymaking, involves dispersing authority and power across multiple entities or individuals. This model empowers individuals to navigate their lives by clearly delineating which authority is responsible for various aspects. For instance, the federal government issues passports, states provide driver’s licenses, schools award diplomas, and organizations offer employment contracts. This approach fosters inclusive and transparent decision-making, allowing diverse participants to collectively manage resources, make choices, and influence a system or organization’s trajectory.

Consider the passport example in more detail. Issued by a government, other sovereign nations recognize them, each applying their governance to determine the conditions under which passport holders from one country can enter another (e.g., visa requirements). Similarly, governments willingly delegate certain aspects of passport-related governance to international bodies like the International Civil Aviation Organization (ICAO), which sets standards for machine-readable passports to facilitate air travel. The global systems work because authority is dispersed to legitimate stakeholders.

However, the digital realm has introduced a different model: “platform governance,” a natural evolution of the client-server models in current service-oriented architectures. Unfortunately, this model does not easily align with distributed governance. Data governance in platform models is delegated to the platform’s hosting services, making it challenging to regulate data usage.

While legislative efforts worldwide, including the EU’s Council of Europe Convention, aim to protect fundamental rights, they must catch up in a hyperconnected world where data’s value lies in its flow, not just the data itself.

Two healthcare examples are pertinent to ethical AI and highlight the need for change:

- Dynamic updating of datasets with accurate data. While AI offers benefits in healthcare, its adoption faces hurdles. To meet clinical treatment’s contextual requirements, capturing accurate data and dynamic updating of datasets are crucial. Therefore, AI solutions must handle unstructured real-world data and frequent real-time updates. A distributed governance model will bring closer proximity between the data producer, data user, and their governing authority.

- Lawful data Portability. The healthcare ecosystem, complex with multiple stakeholders, revolves around patient-centricity. Digital healthcare requires sensitive data to flow from one stakeholder to another, which is problematic in the current platform-based paradigms. Therefore, governing bodies put forward initiatives like the European Health Data Space to emphasize inclusive access to healthcare and respect for patients’ rights. A distributed governance model can facilitate data access across platforms, ensuring equitable healthcare access while safeguarding patient data.

Our approach provides a way to functionally design distributed governance models in a manner that can be applied in the digital space.

Introducing Autonomous Principals

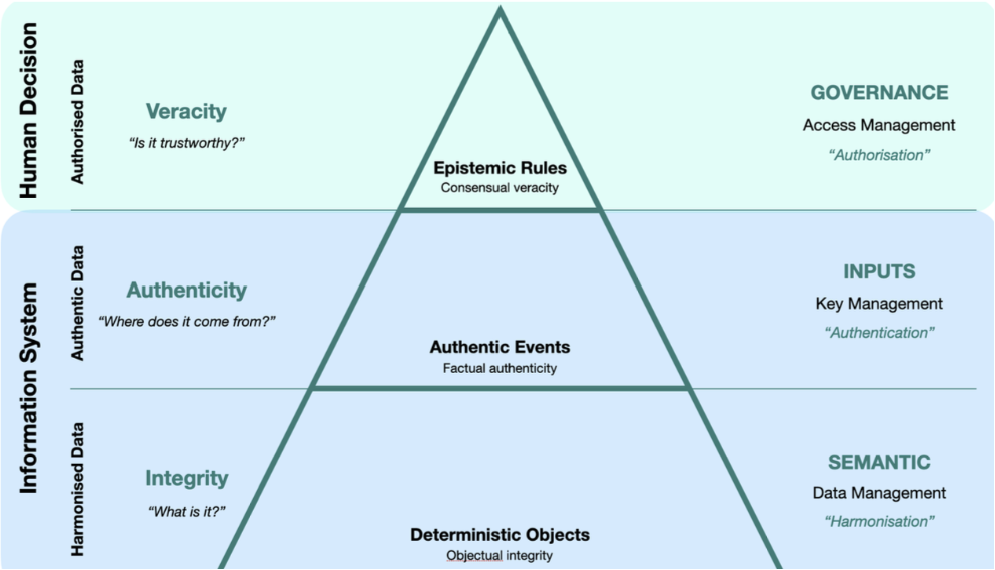

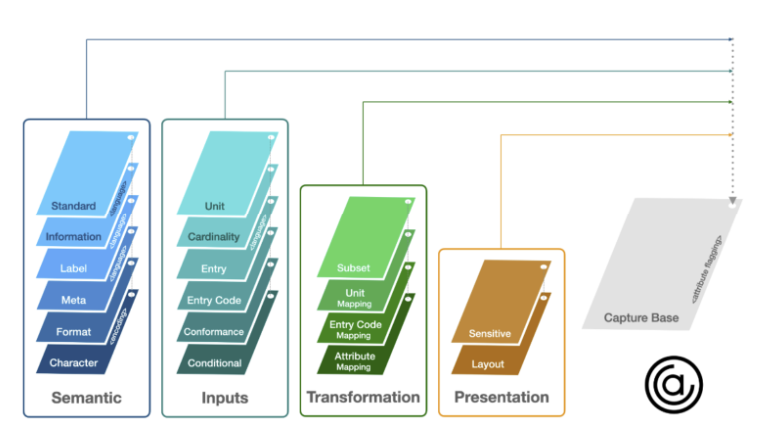

Our exploration into implementing distributed governance began with two recent breakthroughs in software engineering, paving the way for a data-centric approach: decentralized semantics and decentralized authentication. Together, these innovations empower us to introduce integrity into data objects and ensure the factual authenticity of events throughout a data object’s lifecycle.

“Decentralized semantics” encompasses distributing and organizing data semantics in a decentralized fashion. It involves deploying semantic models, ontologies, and vocabularies to facilitate interoperability and comprehension of data across distributed systems and networks. By decentralizing semantics, we aim to transcend the limitations of traditional centralized approaches, fostering more adaptable, scalable, and collaborative data environments. This decentralization is pivotal in enabling semantic interoperability, ensuring that data from various sources can be consistently and meaningfully exchanged, integrated, and understood, even within decentralized and heterogeneous environments.

“Decentralized Authentication” represents an advanced mechanism allowing network participants to authenticate securely without relying on a third-party intermediary. This authentication relies on asymmetric cryptography, necessitating a distributed key management system (DKMS) that binds an identifier to a pair of keys to ensure identifier stability, even if the keys change. Moreover, DKMS offers mechanisms to discover keys associated with an identifier, and it can function as a centralized, federated, or fully decentralized system to guarantee interoperability.

These advancements empower us to define data’s intrinsic and extrinsic properties precisely. Drawing an analogy from physics, a particle’s mass is intrinsic, while its weight is extrinsic and depends on the presence of an external gravitational field.

In digital identity, a hot topic worldwide, identifiers represent intrinsic properties, while the credentials linked to these identifiers represent extrinsic properties. Stated differently, “identity” lives in the human space while “identifiers” reside in the technological space.

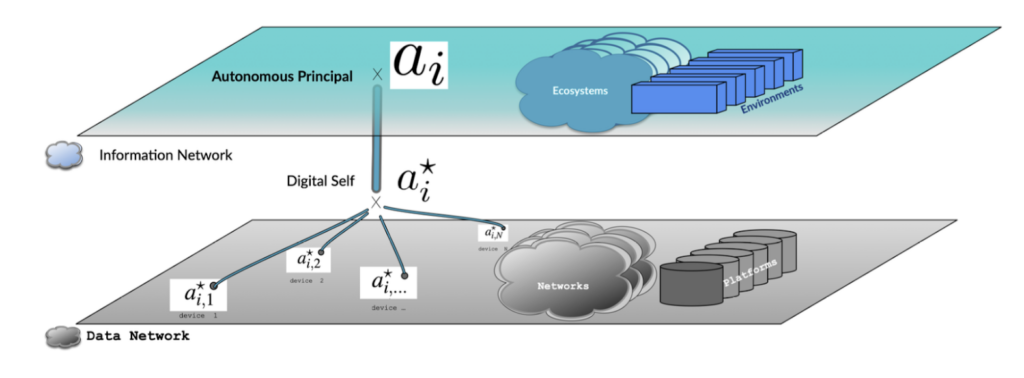

This framework enables us to create digital objects termed “Digital Self,” representing the intrinsic properties of a Self that can stand for a human, organization, or any entity endowed with choice, i.e., free will. The capacity for choice implies liability for decisions within a specific governance. In the distributed governance model, we introduce the term “autonomous principals” to denote that governance can be applied offline, online, or both.

Notably, autonomous principals cannot be algorithms (such as robots) because, as of today, algorithmic decision-making does not carry the same governance status as decisions based on free will.

The Core Element: Introducing Ecosystems

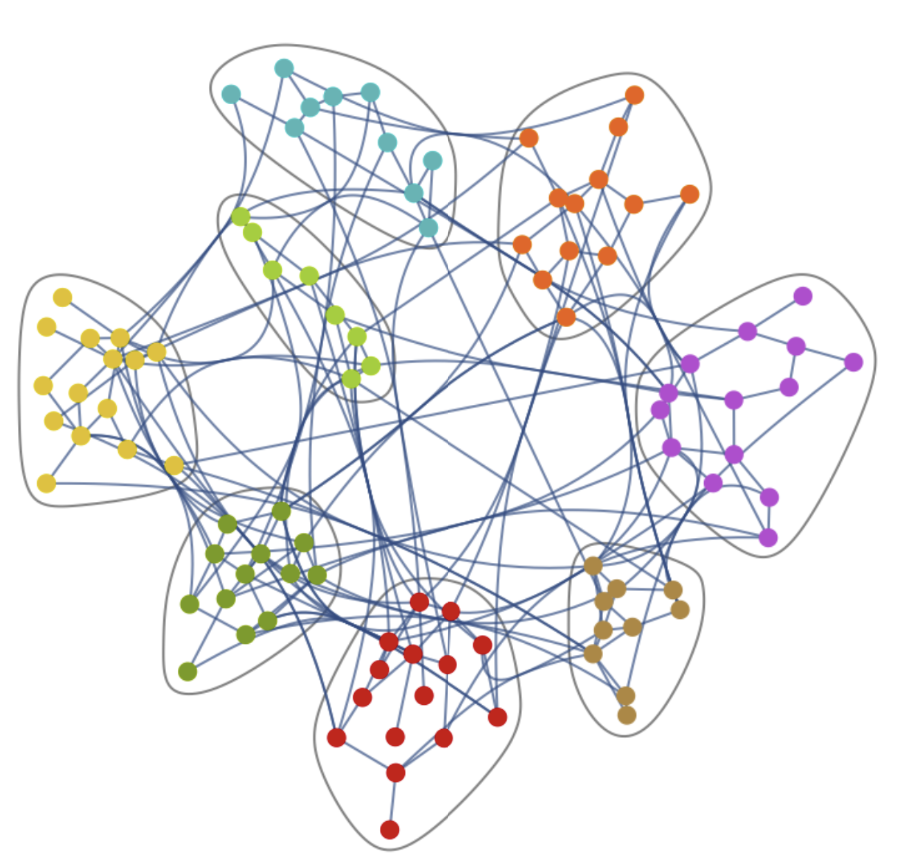

Section 2 of our publication introduces a functional definition of ecosystems as “units of governance.” An ecosystem comprises a population of autonomous principals, a legitimate authority, and a legitimate administration enforcing governance for the legitimate authority. This abstract concept of ecosystems applies to virtually any environment where a centralized authority holds governance.

The model assumes that an autonomous principal may participate in multiple ecosystems, using its capacity for choice to join or leave ecosystems under conditions specified by the ecosystem’s governance. The model also outlines how autonomous principals interact with ecosystems and other autonomous principals, drawing on the legal concept of the privacy sphere. This framework lays the groundwork for implicit consent, upon which explicit consent can be constructed.

Almost any human legal construct aligns with this ecosystem definition, forming a complex web of interconnected ecosystems within our societies. The primary achievement of our work lies in the fact that thanks to advancements in decentralized authentication and semantics, a data-centric infrastructure can be implemented, and the exact ecosystem definition can be extended to the digital realm. To illustrate this point, section three introduces the “Dynamic Data Economy,” a framework built upon the distributed governance model.

Distributed Governance: Bridging the Digital Divide

The distributed governance model offers a more suitable approach than platform governance for aligning digital governance with pre-digital transformation governance. In AI applications, two main avenues for further exploration emerge. First, the model should simplify lawful access to data when the ecosystem approves the purpose. Second, it emphasizes the deployment of AI and its impact on ecosystems rather than delving into the intricacies of AI technology itself. By treating AI as the algorithmic agent serving a population of autonomous principals, the model establishes a clear distinction between AI development and AI deployment—making the latter easier to regulate.

Between the lines

“What is illegal offline must be illegal online” is a quote echoing across various platforms, central to the EU Digital Service Act proposal announced on April 23, 2023, by Ursula von der Leyen, the EU Commission President. Yet, the challenges of implementing this principle are complex.

Through our research, we aim to illustrate that comprehending the core technological facets of digital transformation is necessary for policymakers and technologists. Crafting data and algorithm governance that aligns with global and local communities’ ethical and security requirements is a multi-stakeholder endeavor.

The 2023 edition of the International Telecommunication Union (ITU) annual forum, AI for Good, underscored the pivotal role of AI: the United Nations Sustainable Development Goals (SDGs) must catch up to meet their 2030 targets. AI holds the potential to either propel SDGs towards success by providing the necessary tools for coherent and sustainable management of our intricate societies or plunge us into chaotic uncertainties, serving only a select few. We stand at a crossroads, and AI governance assumes paramount importance. In such a scenario, any step in the right direction can yield substantial benefits.

We hope that our work in governance fosters understanding among technologists and practitioners, facilitating informed decision-making for a better, inclusive future supported by AI.