🔬 Research Summary by Michelle S. Lam , a Computer Science Ph.D. student at Stanford University in the Human-Computer Interaction Group, where she builds systems that empower everyday users to actively reshape the design and evaluation of AI systems.

[Original paper by Michelle S. Lam, Ayush Pandit, Colin H. Kalicki, Rachit Gupta, Poonam Sahoo, and Danaë Metaxa]

Overview: What’s on the horizon in Algorithm Auditing? In addition to the algorithms, we must investigate how users change in response. We introduced Sociotechnical Audits & the Intervenr system to address this challenge! With a case study in targeted advertising, we find that ad targeting is effective but likely via repeated exposure rather than inherent user benefit.

Introduction

Algorithm auditing is a powerful tool to understand black-box algorithms by probing technical systems with varied inputs and measuring outputs to draw inferences about their inner workings. An algorithm audit of an ad targeting algorithm could surface skewed delivery to users of different races or genders. However, it could not capture how users interpret and internalize the ads they receive en masse, how these ads shape their beliefs and behaviors, and how the targeting algorithm, in turn, would change in response to these shifts in user behavior. We need auditing methods that allow us to consider and measure systems at the sociotechnical level.

- Noting a gap in the lens of algorithm audits, we introduce the sociotechnical audit (STA) as a method to systematically audit the algorithmic components of a system and the human components—how users react and modify their behavior in response to algorithmic changes.

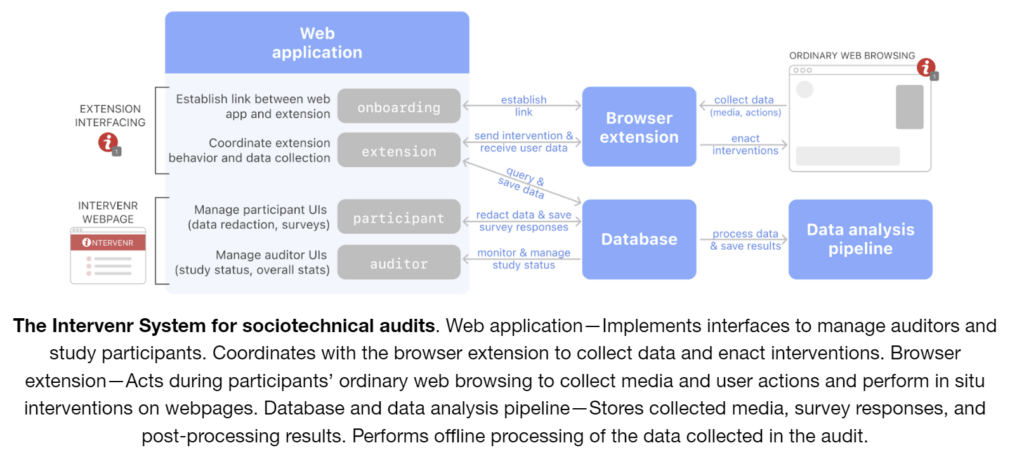

- We instantiate the sociotechnical auditing method in a system called Intervenr for conducting sociotechnical audits by coordinating observation and in situ interventions on participants’ web browsers.

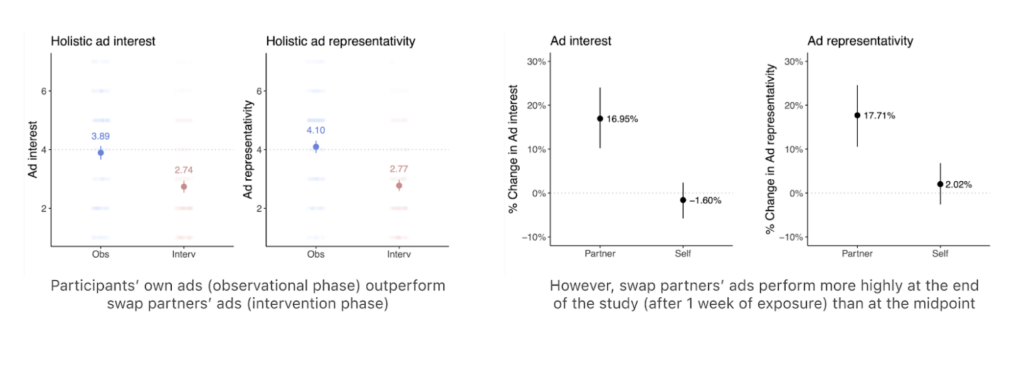

- We demonstrate a two-week sociotechnical audit of targeted advertising (N=244) investigating the core assumption that targeted advertising performs better on users. We find that targeted ads indeed perform better with users but also that users begin to acclimate to different ads in only a week, casting doubt on the primacy of personalized ad targeting given the impact of repeated exposure.

Key Insights

Sociotechnical Auditing

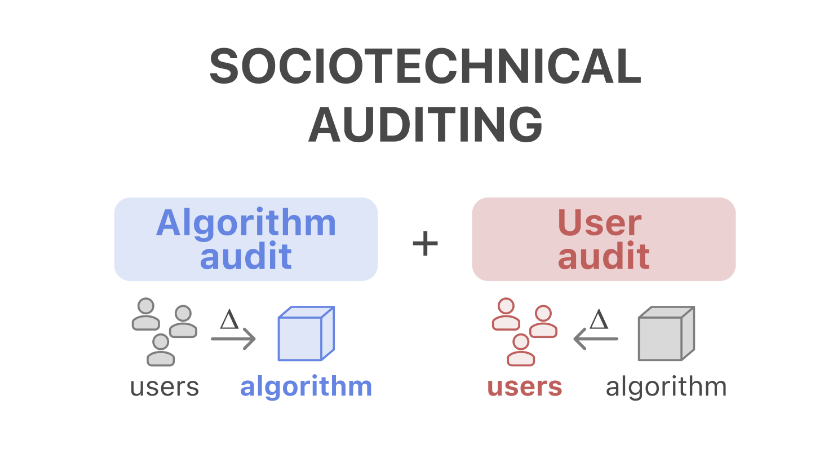

Our work addresses this gap by proposing the concept of a sociotechnical audit (or STA). Formally, we define an STA as a two-part audit of a sociotechnical system consisting of both an algorithm and a user audit. We define an algorithm audit as an investigation that changes inputs to an algorithmic system (e.g., testing for a range of users or behaviors) and observes system outputs to infer properties of the system. Meanwhile, we define a user audit as an investigation that changes inputs to the user (e.g., different system outputs) and observes their effects to conclude users.

The CSCW community has long championed a sociotechnical frame, but the problem is that it is challenging to instantiate the kind of sociotechnical audit we describe. Just as algorithm audits must understand technical components by probing them with varied inputs and observing outputs, sociotechnical audits must understand human components by exposing users to varied algorithmic behavior and observing the impact on user attitudes and behaviors.

Intervenr: A System for Sociotechnical Auditing

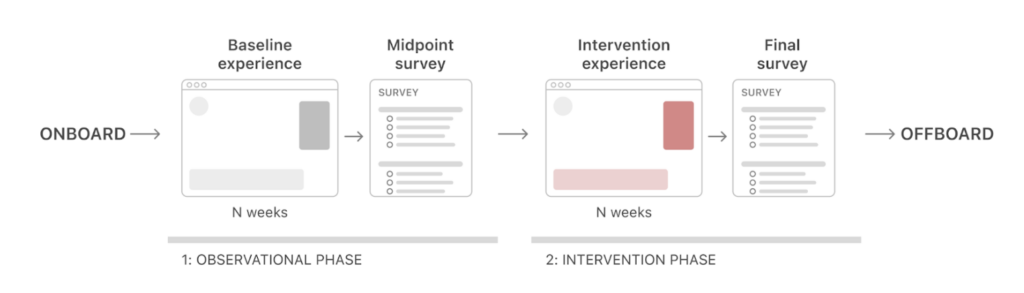

To address these challenges, we developed a system called Intervenr that allows researchers to conduct sociotechnical audits in the web browsers of consenting, compensated participants. Intervenr is designed to perform sociotechnical audits in two phases, comprising a browser extension and a web application.

In the initial observational phase, Intervenr collects baseline observational data from a range of users to audit the technical component of the sociotechnical system. Then, in the intervention phase, Intervenr enacts in situ interventions on participants’ everyday web browsing experience, emulating algorithmic modifications to audit the human component.

Case Study: A Sociotechnical Audit of Targeted Advertising

To demonstrate the new insights afforded by sociotechnical audits, we deploy Intervenr in a case study of online advertising to answer this central question: Does ad targeting indeed work better for users? Given the opacity of ad platforms and ad targeting’s reliance on invasive data collection and inference practices, questions remain regarding how targeted ad content impacts users over time and whether its costs are justified—questions that require a sociotechnical approach to answer.

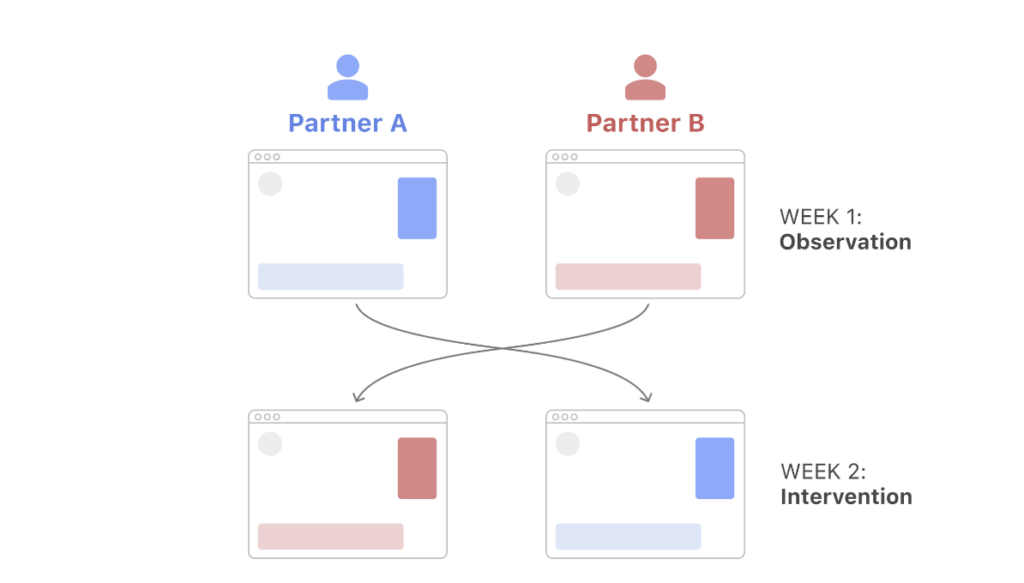

Study design. We pair a one-week observational study of all the ads users encounter in their web browser (an algorithm audit) with a one-week ablation-style intervention study that allows us to measure user responses to ads when we “break” targeting (a user audit). In the first week of our case study, we passively observed all ads delivered to participants. This traditional audit portion of our study allows us to measure canonical metrics like views and clicks but also important dimensions at the locus of the user, like users’ interest and feeling of representation as they relate to ad targeting. In the second week, we randomly paired participants, swapping each participant’s ads with ads originally targeted to their partner. In addition to observing user behavior, we conduct participant surveys after each study phase that cover a subset of the ads collected; together, these produce both user-oriented metrics (ad interest and feeling of representation in ads) and advertiser-oriented metrics (ad views, clicks, and recognition). Findings. Over the two-week study, we collected over half a million advertising images targeted to our study participants. Overall, we find that participants’ own targeted ads outperform their swap partners’ ads on all measures throughout the study, supporting the premise of targeted advertising. However, we also observe that swap partners’ ads perform more highly with users at the close of the study (after only a week of exposure) than at the midpoint (before participants were exposed to their partners’ ads). This is evidence that participants acclimate to their swap partners’ ads, suggesting much of the efficacy of ad targeting may be driven by repeated exposure rather than the intrinsic superiority of targeting.

Between the lines

While an algorithm audit could reveal whether today’s existing targeting methods provide user benefit, a sociotechnical audit allows us to discover how that user benefit changes in response to alternative algorithmic methods. In particular, this approach reveals that user sentiment toward ads may be more malleable than expected and casts doubt on the necessity of hyper-personalized and privacy-invasive targeting methods.

By conducting audits that conceive of algorithmic systems as sociotechnical and investigating their technical and human components, we can better understand these systems in practice. Sociotechnical audits can aid us in proposing and validating alternative algorithm designs with an awareness of their impact on users and society.