🔬 Research Summary by Arun Teja Polcumpally, a Technology Policy Analyst at Wadhwani Institute of Technology Policy (WITP), New Delhi, India).

[Original paper by Eric Schmidt]

Overview:

This research article summary delves into the profound insights shared by Eric Schmidt, former CEO and chairman of Google, on the intricate intersection of artificial intelligence (AI), geopolitics, and national security. Schmidt’s authoritative perspective, rooted in his leadership role at Alphabet, Google’s parent company, offers a unique exploration of the challenges and opportunities presented by the rise of AI-driven geopolitics. The analysis navigates through Schmidt’s observations on the consolidation of network platforms, the influence of AI on social norms and institutions, and the critical question of information access. Drawing parallels with Schmidt’s book “The Age of AI,” the article examines how this new geopolitical landscape addresses key military questions, emphasizing the evolving role of AI technology in shaping global security strategies.

Introduction

In this research article summary, we delve into the insights provided by Eric Schmidt, former CEO and chairman of Google, regarding the intersection of artificial intelligence (AI), geopolitics, and national security. Schmidt’s unique perspective, grounded in his leadership role at one of the world’s foremost tech giants, Alphabet, offers authoritative insights into the challenges and opportunities posed by AI-led geopolitics. This research article does not have a scientific methodology to claim it as an academic exercise. Yet, it is published by an academic journal. This is because the author represents the voice of an American internet company, which is a global giant. The narrative appears to be a crisp version of the book “The Age of AI,” authored by Eric Schmidt, Henry Kissinger, and Daniel Huttenlocher.

Key Insights

“At the low end, AI is exacerbating cyber and disinformation threats and changing how states exercise targeted coercion against opponents. In the middle of the spectrum, warfare between conventional armed forces will feature more rapid actions and delegated decision-making that could make conflict harder to control. At the high end, AI-enabled military and intelligence capabilities may disrupt the fundamental premises of nuclear deterrence in ways that undermine strategic stability” (p 289)

The paper begins by highlighting two significant phenomena. First, Schmidt observes the consolidation of network platforms driven by the need for extensive user bases. These platforms, equipped with sizable user populations, can absorb other service-providing platforms, ultimately leading to market oligopoly or monopoly. The second phenomenon involves countries gravitating toward domestically developed platforms to align with national laws and regulations. Schmidt expresses concern over foreign countries potentially influencing the lives of U.S. citizens through their digital platforms. Given his association with Google, which operates globally, this notion might appear paradoxical.

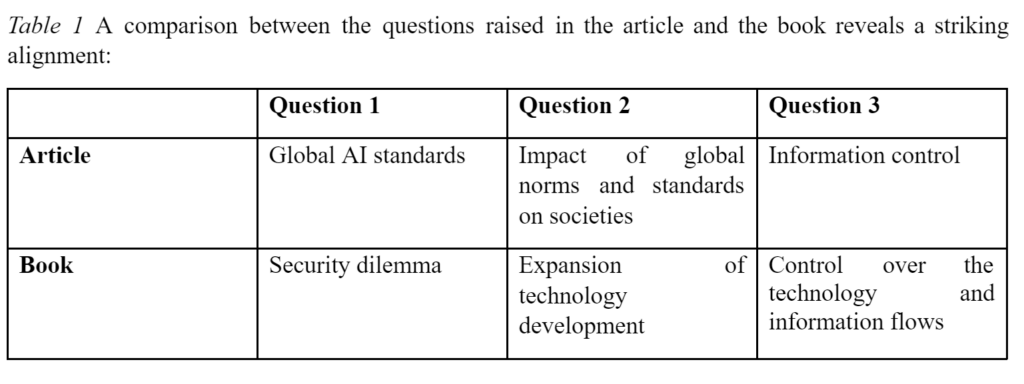

Three critical questions drive the paper’s exploration:

1. AI’s Design and Regulatory Parameters: Schmidt asks who determines AI’s design and its accompanying regulatory framework.

2. Impact on Social Norms and Institutions: The author examines the transformative impact of AI processes on societal norms and institutions.

3. Access to Information: Schmidt considers who holds access to the vast information generated through these platforms.

In his book “The Age of AI,” Schmidt extends his analysis by proposing that the new era of geopolitics, heavily influenced by AI, will address questions of military significance:

1. Degree of Inferiority in Crisis: What level of inferiority remains relevant in a crisis where each side maximizes its capabilities?

2. Required Margin of Superiority: How significant must a competitive state’s superiority margin be?

3. Threshold of Meaningful Superiority: At what point does superiority cease to impact performance?

This comparison underscores that Eric Schmidt emphasizes cross-border information control within the geopolitics anchored on digital technologies.

The author posits that AI and related technologies have ascended to the status of strategic technologies, paralleling the historical prominence of nuclear technology and space exploration. Schmidt highlights that AI, which spans diverse applications, introduces non-traditional security threats like deepfakes and misinformation proliferation that endanger democracies worldwide. Moreover, the deployment of Lethal Autonomous Weapon Systems (LAWs) in ongoing conflicts, such as the Ukraine war, adds to the security dilemma.

Quoting Schmidt’s 2021 report from the National Security Commission on Artificial Intelligence, he notes the transformation of machine learning algorithms from business applications to intelligence and statecraft tools.

Navigating the realm of AI in security strategies involves nuanced considerations. Cybersecurity necessitates distinctive approaches for preemptive attacks while avoiding excessive restrictions to promote innovation and economic growth. Schmidt acknowledges the complexity of imposing mutual restraints on AI-based weaponry due to unpredictable scope and engagement. He recommends that nuclear powers establish common protocols to ensure nuclear stability, drawing from a realist perspective that places national interest at the forefront.

Between the lines

Schmidt’s call for the United States to counter Chinese AI development while preserving its strategic advantage reflects his belief in U.S. global leadership. The assertion that China and the U.S. are the primary AI leaders also aligns with Schmidt’s competitive landscape perspective. Despite his ties to Google, Schmidt’s stance echoes the realist school of thought, which believes in anarchy and states conducting their foreign policy, prioritizing only national interest.

This article advocates that there is uncertainty and only two powers can steer the created uncertainty. Taking a neo-realist stance, Eric Schmidt might encourage scholars in the West or those with more access to Western literature to reiterate the global influence and power of the US. Irrespective of what narrative this article is selling, it is true that digital technology is becoming ubiquitous. When it truly does, cybersecurity becomes the fundamental national, social, and individual security aspect. Every socio-economic activity is becoming digital. Social interactions, business, education, health, governance, and dating are a few important human activities that can be immediately listed, which are mostly online. In this situation, the author’s assertion of AI technology is the strategic technology to which the US has to develop a comprehensive national strategy. While the author’s argument was anchored only to the US, the security threats emanating from loosely understood AI looms worldwide. Every country should revamp its approach towards national security. The world has been digitalized; now, the ‘society’ is a ‘digital society.’

Eric Schmidt’s insights comprehensively examine AI’s impact on geopolitics and national security. His background as a former CEO and chairman of Google lends authority to his exploration of the challenges and dynamics at the intersection of AI, great power competition, and global security.