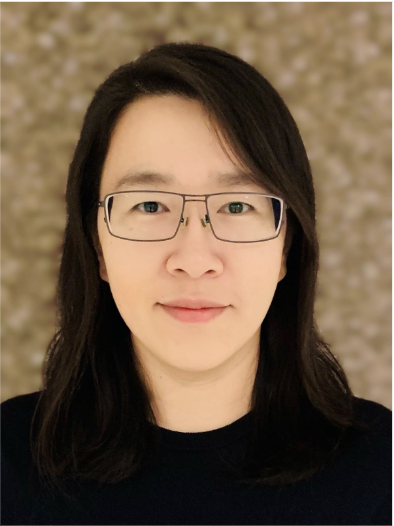

🔬 Research Summary by Dr Qinghua Lu, the team leader of the responsible AI science team at CSIRO’s Data61.

[Original paper by Qinghua Lu, Liming Zhu, Xiwei Xu, Zhenchang Xing, and Jon Whittle]

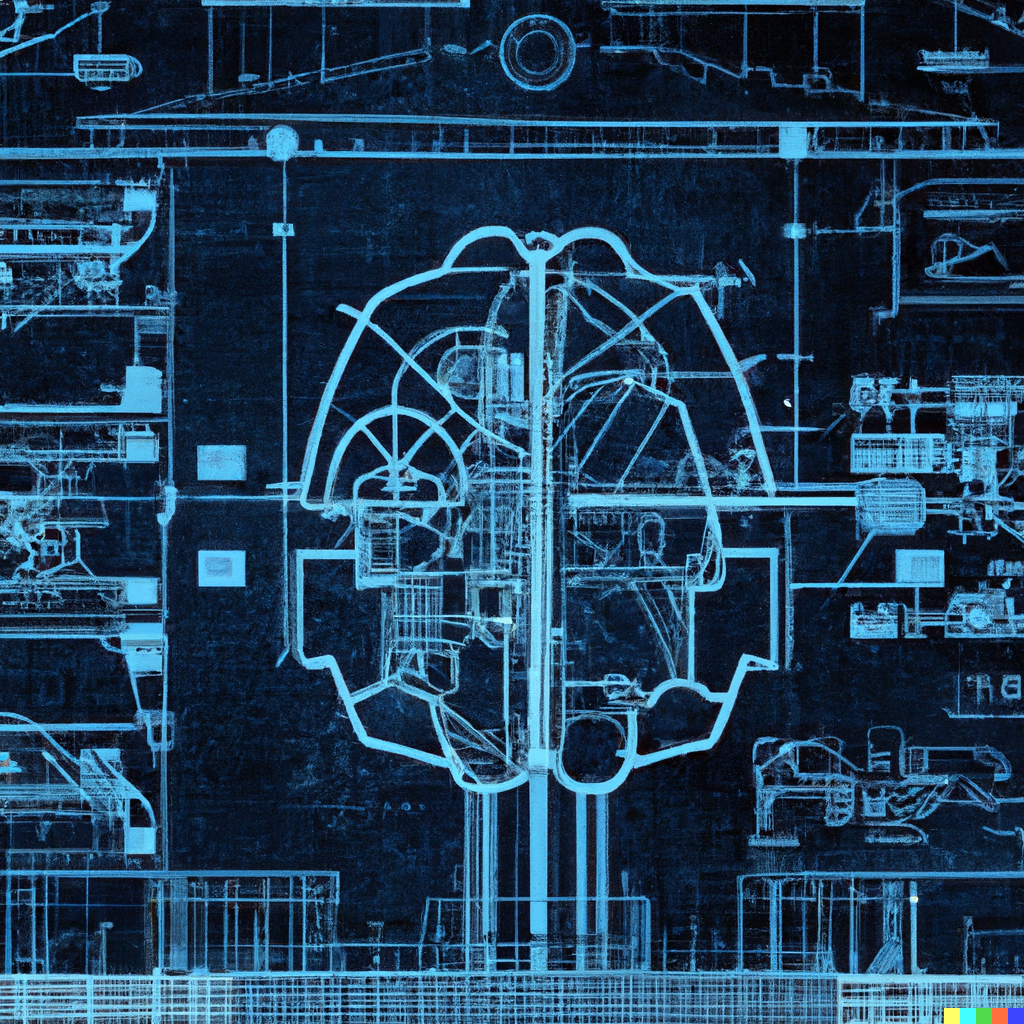

Overview: As foundation models are in their early stages, the design of foundation model-based systems has not yet been systematically explored. There is little understanding of the impact of introducing foundation models in software architecture. This paper proposes a taxonomy of foundation model-based systems, which classifies and compares the characteristics of foundation models and design options of foundation model-based systems. The taxonomy comprises three categories: foundation model pretraining and fine-tuning, architecture design of foundation model-based systems, and responsible-AI-by-design.

Introduction

How can we design the software architecture of foundation model-based AI systems and incorporate responsible-AI-by-design into the architecture?

Taxonomies have been used in the software architecture community to understand existing technologies better. Taxonomies enable architects to explore the conceptual design space and make rigorous comparisons of different design options by categorizing existing work into a compact framework. Therefore, this paper presents a taxonomy that defines categories for classifying the key characteristics of foundation models and design options of foundation model-based systems. The proposed taxonomy comprises three categories: foundation model pretraining and fine-tuning, architecture design of foundation model-based systems, and responsible-AI-by-design.

Key Insights

Foundation model pretraining and fine-tuning

An external foundation model can be cost-effective, as organizations only pay for API calls. Additionally, using a well-established foundation model can potentially result in higher accuracy and generalizability to a wider range of tasks. However, there can be more RAI-related issues as the organizations can only rely on limited means to ensure RAI qualities due to the black-box nature of external foundation models. On the other hand, training a sovereign foundation model in-house from scratch provides organizations with complete control over the model pipeline. However, this requires significant investment in cost and resources, including data, computational power, and human resources.

In-context learning (ICL) is fine-tuning the foundation model by providing examples to perform a specific task. This allows the foundation model to perform a task more accurately without tuning any parameters. There are still accuracy and RAI issues with the model outputs. This is due to the limited input-output examples size that can be used through prompts, resulting in the model generating outputs lacking the context and understanding required for accurate inference. Fine-tuning the parameters of the foundation model using labeled domain-specific data can be done in two ways. Full fine-tuning retrains all the parameters, which is less feasible and extremely expensive (such as GPT-3 with 175B parameters). Another way is to employ parameter-efficient fine-tuning techniques, e.g., LoRA, which reduces the number of training parameters by 10,000 times and decreases GPU usage by threefold.

Architecture design of foundation model-based systems

The foundation model can play an architectural role as the following connector services to connect with other non-AI/AI components provided by the organization:

- Communication connector: Foundation models such as LLMs can serve as a communication connector that enables data transfer between software components.

- Coordination connector: Different software components can coordinate their computations through a foundation model.

- Conversion connector: Foundation models can function as an interface adapter for software components that use different data formats to communicate with each other.

- Facilitation connector: Foundation models can be integrated as facilitation connectors to optimize component interactions.

Using the default web interface can be inefficient for complex tasks or workflows, as users may need to prompt every single step. An autonomous agent, such as Auto-GPT, BabyAGI, or AgentGPT, can reduce the need for prompt engineering and the associated cost. With an autonomous agent, users only need to prompt the overall goal, and the agent can break down the given goal into a set of tasks and use the other software components, the internet, and other tools to achieve those tasks in an automatic way. However, relying solely on an autonomous agent can lead to issues with accuracy, as the agent may not fully understand the users’ intentions. On the other hand, hybrid agents, such as GodMode, involve users in the loop to confirm the plan and provide feedback.

It is often challenging to leverage an organization’s internal data (e.g., documents, images, videos, audio, etc.) to improve the accuracy of foundation models, as foundation models are often inaccessible or expensive to retrain or fine-tune. To address this, there are two design options to consider. The first design option involves integrating the internal data through prompts. However, there is a token limit for the context; e.g., GPT-4 has a maximum limit of 8000 tokens, equivalent to approximately 50 pages of text. Doing a prompt with context data larger than the token limit is difficult. Alternatively, another design option is using a vector database (such as Pinecone and Milvus) to store personal or organizational internal data as vector embeddings. These embeddings can be used to perform similarity searches and enable the retrieval of internal data related to specific prompts. Compared to direct internal data integration through prompting, using a vector database is more cost-efficient and can achieve better accuracy and RAI-related properties.

Responsible-AI-by-Design

One key challenge of designing a foundation model-based system is controlling the risks introduced by foundation models.

- Model-level risk control

- Pretraining

- Reinforcement learning from human feedback (RLHF)

- Adversarial testing

- Through APIs

- Foundation model via APIs

- Prompt refusal

- Prompt modification

- Differential privacy

- Pretraining

- System-level risk control

- Intermediate process

- Think aloud

- Think silently

- Task execution

- Immutable agreement

- Verifier-in-the-loop

- Human verifier

- AI verifier

- Hybrid verifier

- System audit

- RAI black-box

- Co-versioning registry

- Standardized reporting

- RAI bill of materials (BOM)

- Intermediate process

Between the lines

This paper proposes a taxonomy of foundation models and foundation model-based systems. This taxonomy is intended to help with important architectural considerations about foundation model-based systems’ performance and RAI-related properties. Patterns also serve as an effective means of classifying and organizing existing solutions. We plan to collect a list of design patterns for building foundation model-based systems.