✍️ Original article by Ravit Dotan, a researcher in AI ethics, impact investing, philosophy of science, feminist philosophy, and their intersections.

In the previous article, I described my framework for implementing AI ethics, which builds on research in responsible innovation, AI ethics, and philosophy. In a nutshell, I recommend that organizations that develop or use AI technologies focus on three pillars of activity around responsible AI:

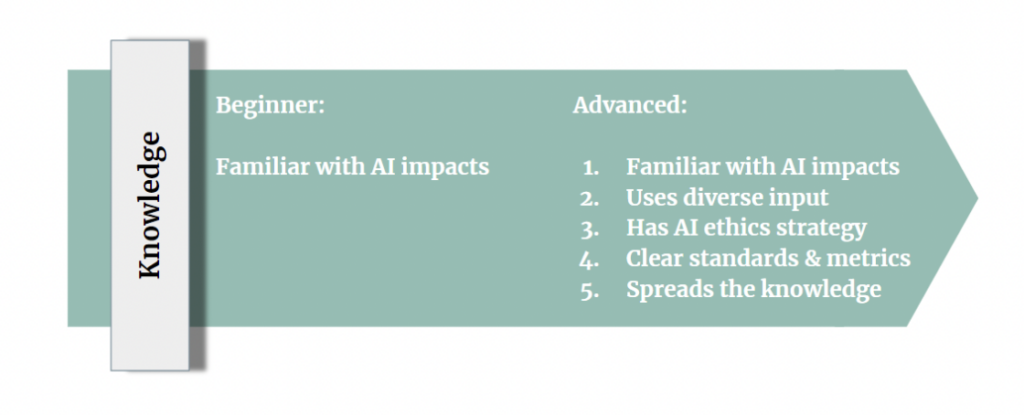

- Knowledge: What should you do to understand how your AI may impact people, society, and the environment?

- Workflow: How should you integrate responsible AI components into your workflows and incentives?

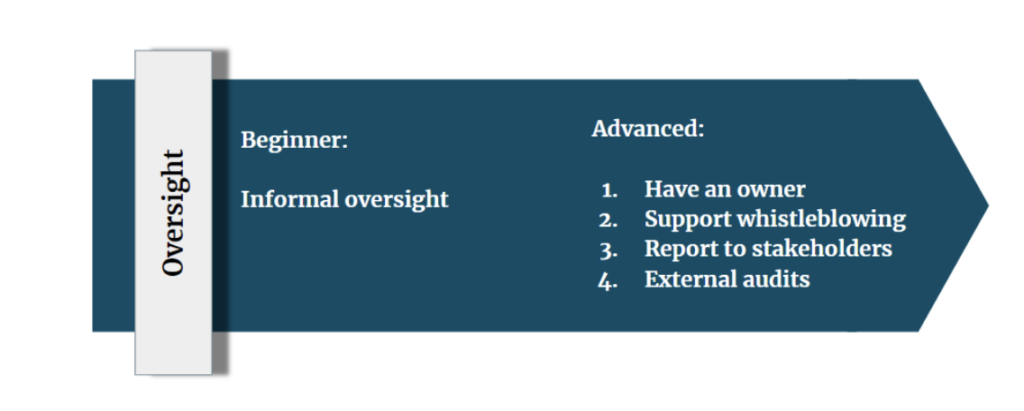

- Oversight: What should you do to keep yourself accountable?

This entry presents a case study: I describe how I use my framework to increase AI ethics maturity in a startup, Bria.ai. Bria is a synthetic imaging startup (between seed and Series A) with a vision to reinvent visual communication. Bria has developed a platform to generate, change, and repurpose images and videos. For example, it can recast a new actor in an existing image, create images out of text prompts, and add 3D motion to still photographs. The company’s customers are agencies and creative platforms.

I serve as Bria’s Responsible AI Advocate, leading all aspects of responsible AI efforts in the company, including R&D, HR, sales, operations, and marketing. In this article, I describe the company’s first steps in increasing its AI ethics maturity, a journey we have been on since May 2022.

1. Knowledge

As discussed in the previous article, beginners in AI ethics work to understand the impacts of their AI systems. Advanced organizations also have standards and metrics that are informed by diverse input and spread the knowledge inside the organization:

Accordingly, the first step at Bria was to increase the company’s understanding of its technology’s potential positive and negative impacts. To do so, I led an in-person workshop in which all of Bria’s employees participated (around 20 people). The structure of the workshop was as follows:

- Introduction to AI ethics – a talk and Q&A, including case studies

- Best and worst case scenarios – participants discussed two questions: What is the worst-case scenario for Bria regarding AI ethics? What would it mean for Bria to excel in AI ethics?

- What should Bria do to increase its AI responsibility? Participants discussed strategies for increasing AI ethics knowledge, workflow integration, and oversight.

The workshop helped raise awareness of responsible AI issues, form a shared understanding of which AI risks and opportunities are the most important to the employees, and brainstorm how the company can integrate this knowledge into its work.

Based on the workshop and additional feedback from employees at different levels and departments. We created a high-level plan to guide the company’s responsible AI efforts (also available on Bria’s website):

- Develop our knowledge and implementation of responsible AI on an ongoing basis. Our focus topics include bias, privacy, explainability, transparency, and copyright issues.

- Include responsible AI in the workflows of all departments, including R&D, sales, marketing, HR, and creative.

- Prioritize proactive bias prevention – in our datasets, measurement tools, and outputs.

- Build guardrails for responsible AI, privacy, and copyrights:

- We do not generate synthetic videos which speak (“talking heads”)

- We mark the images we generate as synthetic in the file properties

- We target our platform at businesses who want to tell visual stories for marketing purposes – and not to individuals.

- Give free access to nonprofit and academic users who can use it to promote goals that are aligned with our values, including

- Democratizing creativity

- Mitigating risks that deep fakes pose to people and society

- Encouraging diversity and inclusion in visuals

- Make a meaningful contribution to the conversation about responsible AI in the sector of synthetic imaging.

As you will see in the discussion of the following pillars, Bria’s learning continues through onboarding, ongoing training, and collecting ethics feedback from prospective and existing clients. In addition, Bria is in the process of creating concrete standards and metrics, starting with targeting bias mitigation.

2. Workflow

As discussed in the previous entry, beginners in AI ethics incorporate AI ethics components into their workflows sporadically. Advanced organizations do so systematically and holistically:

To incorporate AI ethics into Bria’s workflows systematically and holistically, the head of each department created plans and OKRs for Q3 and beyond in consultation with me and the CEO. The new workflows are as follows:

R&D

- New features, tools, and capabilities will undergo internal ethics review before development begins and before they are released.

- Existing features, tools, and capabilities will be reviewed gradually on an ongoing basis. When the time comes to update them, they will undergo an ethics review.

- The dataset the company uses for testing is currently undergoing an internal review. Bria is checking how diverse it is and plans to improve based on the review results.

- After the dataset review is complete, the company will review its methodology to evaluate its products’ quality, checking for unintended biases and improving if needed.

- The company is adding explanations throughout its platform to increase the explainability and transparency of the product.

- The head of R&D and I meet regularly to sync and ensure that things don’t fall between the cracks.

HR

- The company added a responsible AI section to the company code of conduct, which includes internal reporting policies.

- The company is working on adding responsible AI training to the onboarding process and examining whether and how to include AI ethics in all other aspects of the business related to HR, including recruiting, contracts, and off-boarding.

- The head of HR and I meet regularly to sync and ensure things don’t fall between the cracks.

Sales

- The head of Sales and I meet regularly to discuss ethical questions from prospective customers. The goal is to understand the concerns better and find ways to address them.

- The company committed to responsible AI development in its contracts with customers. In addition, the company is looking into including expectations for responsible usage of its platform from the customers.

Operations

- The company is currently setting up its operations department, which is in charge of managing the company’s relationships with existing customers (which are businesses). The head of Operations and I are working to embed responsible AI practices in the workflows that will be created. The goal is to understand better and address existing customers’ ethical concerns.

Marketing

- The company added a responsible AI section to the website. The goal is to allow anyone to engage and provide feedback.

- The company will release articles on responsible AI topics to contribute to conversations in the field. For example, the CEO is writing an essay about the company’s responsible AI journey from his perspective.

- The company decided to only engage with marketing vendors that are value-aligned.

Responsible AI Department

- Besides working with the heads of all departments and helping when needed, I lead independent, responsible AI projects.

- Responsible AI Challenge – I am organizing a competition for developing proofs of concept for responsible AI solutions.

- Contributing to conversations in the field – for example, Bria is currently discussing Partnership on AI’s ethical guidelines for synthetic media, and I will submit feedback on Bria’s behalf.

- Communicating with internal and external stakeholders about Bria’s responsible AI efforts includes presenting Bria’s efforts internally to employees and externally at conferences.

- Looking for and working with non-profit and research partners that can use the platform to create a positive impact.

3. Oversight

As discussed in the last entry, beginners in AI ethics use informal oversight structures for their AI ethics processes. Advanced organizations use multiple oversight structures that provide different kinds of accountability:

Accordingly, to keep itself accountable, Bria set up the following oversight structures:

- There is a person in charge of the company’s responsible AI activities (that person is me). The Responsible AI Advocate reports directly to the CEO, works closely with the heads of all departments, supports all the employees in responsible AI efforts, and leads independent projects. We chose the title “Responsible AI Advocate” after careful consideration. This title is meant to signal that while one person advocates for responsible AI, everyone is expected to take part in the efforts.

- Channels for discussing responsible AI concerns. Employees are encouraged to discuss responsible AI questions, comments, ideas, and concerns with the head of the relevant department or with the Responsible AI Advocate. In addition, employees may ask the Responsible AI Advocate to keep their identity concealed.

- Reports to internal and external stakeholders. Bria presents its responsible AI activities in articles, at conferences, and on the company’s website. The goal is to show what the company is doing so anyone can engage and provide feedback. In addition, The strategy and progress are discussed in company-wide meetings to communicate with internal stakeholders.

4. Summary and the next installment

In the previous installment, I described my approach to implementing responsible AI, which builds on best practices from responsible innovation, AI ethics, and philosophy. I recommended that companies that develop AI focus on increasing their AI responsibility by focusing on three pillars: knowledge, workflow, and oversight. In this installment, I explained how I implemented my framework in an AI startup, Bria. In the next installment, I will explain how investors can use my framework to conduct due diligence and guide portfolio companies. Feedback is very welcome in this form.